It’s not often that you see infographics with soul. Even though visuals are significantly more fun to look at than actual data tables, the oversimplification of infographics tends to suck out the interest in favor of making things quickly comprehensible (often to the detriment of the true data points, like the 2000 election map). This Röyksopp video, on the other hand, a delightful crossover between games, illustration, and infographic, is all about the storyline and subverts data to be a secondary player. This is not pure data visualization on the lines of the front page feature in USA Today. It is, instead, a touching story encased in the traditional visual language and iconography of infographics. The video’s currency belies its age: it won the 2002 MTV Europe music video award for best video.

Our information environment is growing both more dispersed and more saturated. Infographics serve as a filter, distilling hundreds of data points down into comprehensible form. They help us peer into the impenetrable data pools in our day to day life, and, in the best case, provide an alternative way to reevaluate our surroundings and make better decisions. (Tufte has also famously argued that infographics can be used to make incredibly poor decisions–caveat lector.)

But infographics do something else; more than visual representations of data, they are beautiful renderings of the invisible and obscured. They stylishly separate signal from noise, bringing a sense of comprehensive simplicity to an overstimulating environment. That’s what makes the video so wonderful. In the non-physical space of the animation, the datasphere is made visible. The ambient informatics reflect the information saturation that we navigate everyday (some with more serenity than others), but the woman in the video is unperturbed by the massive complexity of the systems that surround her. Her bathroom is part of a maze of municipal waterpipes; she navigates the public transport grid with thousands of others; she works at a computer terminal dealing with massive amounts of data (which are rendered in dancing—and therefore somewhat useless—infographics for her. A clever wink to the audience.); she eats food from a worldwide system of agricultural production that delivers it to her (as far as she can tell) in mere moments. This is the complexity that we see and we know and we ignore, just like her. This recursiveness and reference to the real is deftly handled. The video is designed to emphasize the larger picture and allows us to make connections without being visually bogged down in the particulars and textures of reality. The girl’s journey from morning to pint is utterly familiar, yet rendered at this larger scale and with the pointed clarity of a information graphic, the narrative is beautiful and touching.

via information aesthetics

Author Archives: jesse wilbur

smarter links for a better wikipedia

As Wikipedia continues its evolution, smaller and smaller pieces of its infrastructure come up for improvement. The latest piece to step forward to undergo enhancement: the link. “Computer scientists at the University of Karlsruhe in Germany have developed modifications to Wikipedia’s underlying software that would let editors add extra meaning to the links between pages of the encyclopaedia.” (full article) While this particular idea isn’t totally new (at least one previous attempt has been made: platypuswiki), SemanticWiki is using a high profile digital celebrity, which brings media attention and momentum.

What’s happening here is that under the Wikipedia skin, the SemanticWiki uses an extra bit of syntax in the link markup to inject machine readable information. A normal link in wikipedia is coded like this [link to a wiki page] or [http://www.someothersite.com link to an outside page]. What more do you need? Well, if by “you” I mean humans, the answer is: not much. We can gather context from the surrounding text. But our computers get left out in the cold. They aren’t smart enough to understand the context of a link well enough to make semantic decisions with the form “this link is related to this page this way”. Even among search engine algorithms, where PageRank rules them all, PageRank counts all links as votes, which increase the linked page’s value. Even PageRank isn’t bright enough to understand that you might link to something to refute or denigrate its value. When we write, we rely on judgement by human readers to make sense of a link’s context and purpose. The researchers at Karlsruhe, on the other hand, are enabling machine comprehension by inserting that contextual meaning directly into the links.

SemanticWiki links look just like Wikipedia links, only slightly longer. They include info like

- categories: An article on Karlsruhe, a city in Germany, could be placed in the City Category by adding

[[Category: City]]to the page. - More significantly, you can add typed relationships.

Karlsruhe [[:is located in::Germany]]would show up as Karlsruhe is located in Germany (the : before is located in saves typing). Other examples: in the Washington D.C. article, you can add [[is capital of:: United States of America]]. The types of relationships (“is capital of”) can proliferate endlessly. - attributes, which specify simple properties related to the content of an article without creating a link to a new article. For example,

[[population:=3,396,990]]

Adding semantic information to links is a good idea, and hewing closely to the current Wikipedia syntax is a smart tactic. But here’s why I’m not more optimistic: this solution combines the messiness of tagging with the bother of writing machine readable syntax. This combo reminds me of a great Simpsons quote, where Homer says, “Nuts and gum, together at last!” Tagging and semantic are not complementary functions – tagging was invented to put humans first, to relieve our fuzzy brains from the mechanical strictures of machine readable categorization; writing relationships in a machine readable format puts the machine squarely in front. It requires the proliferation of wikipedia type articles to explain each of the typed relationships and property names, which can quickly become unmaintainable by humans, exacerbating the very problem it’s trying to solve.

But perhaps I am underestimating the power of the network. Maybe the dedication of the Wikipedia community can overcome those intractible systemic problems. Through the quiet work of the gardeners who sleeplessly tend their alphanumeric plots, the fact-checkers and passers-by, maybe the SemanticWiki will sprout links with both human and computer sensible meanings. It’s feasible that the size of the network will self-generate consensus on the typology and terminology for links. And it’s likely that if Wikipedia does it, it won’t be long before semantic linking makes its way into the rest of the web in some fashion. If this is a success, I can foresee the semantic web becoming a reality, finally bursting forth from the SemanticWiki seed.

UPDATE:

I left off the part about how humans benefit from SemanticWiki type links. Obviously this better be good for something other than bringing our computers up to a second grade reading level. It should enable computers to do what they do best: sort through massive piles of information in milliseconds.

How can I search, using semantic annotations? – It is possible to search for the entered information in two differnt ways. On the one hand, one can enter inline queries in articles. The results of these queries are then inserted into the article instead of the query. On the other hand, one can use a basic search form, which also allows you to do some nice things, such as picture search and basic wildcard search.

For example, if I wanted to write an article on Acting in Boston, I might want a list of all the actors who were born in Boston. How would I do this now? I would count on the network to maintain a list of Bostonian thespians. But with SemanticWiki I can just add this: <ask>[[Category:Actor]] [[born in::Boston]], which will replace the inline query with the desired list of actors.

To do a more straightforward search I would go to the basic search page. If I had any questions about Berlin, I would enter it into the Subject field. SemanticWiki would return a list of short sentences where Berlin is the subject.

But this semantic functionality is limited to simple constructions and nouns—it is not well suited for concepts like 'politics,' or 'vacation'. One other point: SemanticWiki relationships are bounded by the size of the wiki. Yes, digital encyclopedias will eventually cover a wide range of human knowledge, but never all. In the end, SemanticWiki promises a digital network populated by better links, but it will take the cooperation of the vast human network to build it up.

digital comics

If you want to learn how to draw comics you can go to the art section of any bookstore and pick up books that will tell you how to draw the marvel way, how to draw manga, how to draw cutting edge comics, how to draw villains, women, horror, military, etc. But drawing characters is different than making comics. Will Eisner was the generator of the term ‘sequential art’ and the first popular theory of comics. Scott McCloud is his recent successor. Eisner created the vocabulary of sequential art in his long-running course at the School of Visual Arts in NYC. McCloud helped a generation of comic book readers grasp that vocabulary in Understanding Comics, by creating a graphic novel that employed comic art to explain comic theory. But both Eisner and McCloud wrote about a time when comic delivery was bound to newspapers and twenty-two page glossy, stapled pages.

Whither the network? McCloud treats the possibilities of the Internet in his second book, Reinventing Comics, but mostly as a distribution mechanism. We shouldn’t overlook the powerful affect the ‘net has had on individual producers who, in the past, would have created small runs of photocopied books to distribute locally. Now, of course, they can put their panels on the web and have a potential audience of millions. Some even make a jump from the web into print. Most web comics are sufficiently happy to ride the network to a wider audience without exploring the ‘net as vehicle to transform comics into uniquely non-print artifacts with motion, interactivity, sound.

But how might comics mutate on the web? At the recent ITP Spring show I saw a digital comics project from Tracy Ann White’s class. The class asks the question: “What happens when comics evolve from print to screen? How does presentation change to suit this shift?” Sounds like familiar territory. White, a teacher at ITP, has been a long time web comic artist (one of the first on the web, and certainly one of the first to incorporate comments and forums as part of the product.

When I did a little research on her, I found an amazing article on Webcomics Review discussing the history of web comics. (There’s also more from White there.) There has been some brilliant work done, making use of scrolling as part of the “infinite canvas,” but more importantly, work that could have no print analog due the incorporation of sound and motion. The discussion in Webcomics Review covers all of the transformative effects of online publishing that we talk about here at the Institute: interlinking, motion, sound, and more profoundly, the immediacy and participative aspects of the network. As an example, James Kochalka, well known for his Monkey vs. Robot comics and a simplistic cartoon style, publishes An American Elf. The four panel personal vignette is published daily-blogging with comics.

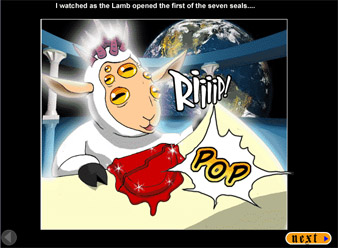

Other ground breaking work: Nowhere Girl by Justine Shaw, a long form graphic novel that proved that people will read lengthy comics online. Apocamon by Patrick Farley, is a mash up of Pokemon and The Book of Revelations. There is a well known series of bible stories in comic strip format – this raises that tradition to the level of heavenly farce (with anime). Apocamon judiciously uses sound and minor animation effects to create a rich reading experience, but relies on pages—a mode immediately familiar to comic book readers. The comics on Magic Inkwell (Cayetano Garza) use music and motion graphics in a more experimental way. And in Broken Saints we find an example where comic conventions (words in a comic style font, speech bubbles, and sequential images) fade into cinema.

As new technology enables stylistic enhancements to web comics, the boundaries between comics and other media will become more blurred. White says, “In terms of pushing interactive storytelling online games are at the forefront.” This is true, but online games dispense with important conventions that make comics comics. The next step for online comics is to enhance their networked and collaborative aspect while preserving the essential nature of comics as sequential art.

a2k wrap-up

—Jack Balkin, from opening plenary

I’m back from the A2K conference. The conference focused on intellectual property regimes and international development issues associated with access to medical, health, science, and technology information. Many of the plenary panels dealt specifically with the international IP regime, currently enshrined in several treaties: WIPO, TRIPS, Berne Convention, (and a few more. More from Ray on those). But many others, instead of relying on the language in the treaties, focused developing new language for advocacy, based on human rights: access to knowledge as an issue of justice and human dignity, not just an issue of intellectual property or infrastructure. The Institute is an advocate of open access, transparency, and sharing, so we have the same mentality as most of the participants, even if we choose to assail the status quo from a grassroots level, rather than the high halls of policy. Most of the discussions and presentations about international IP law were generally outside of the scope of our work, but many of the smaller panels dealt with issues that, for me, illuminated our work in a new light.

In the Peer Production and Education panel, two organizations caught my attention: Taking IT Global and the International Institute for Communication and Development (IICD). Taking IT Global is an international youth community site, notable for its success with cross-cultural projects, and for the fact that it has been translated into seven languages—by volunteers. The IICD trains trainers in Africa. These trainers then go on to help others learn the technological skills necessary to obtain basic information and to empower them to participate in creating information to share.

—Ronaldo Lemos

The ideology of empowerment ran thick in the plenary panels. Ronaldo Lemos, in the Political Economy of A2K, dropped a few figures that showed just how powerful communities outside the scope and target of traditional development can be. He talked about communities at the edge, peripheries, that are using technology to transform cultural production. He dropped a few figures that staggered the crowd: last year Hollywood produced 611 films. But Nigeria, a country with only ONE movie theater (in the whole nation!) released 1200 films. To answer the question of how? No copyright law, inexpensive technology, and low budgets (to say the least). He also mentioned the music industry in Brazil, where cultural production through mainstream corporations is about 52 CDs of Brazilian artists in all genres. In the favelas they are releasing about 400 albums a year. It’s cheaper, and it’s what they want to hear (mostly baile funk).

We also heard the empowerment theme and A2K as “a demand of justice” from Jack Balkin, Yochai Benkler, Nagla Rizk, from Egypt, and from John Howkins, who framed the A2K movement as primarily an issue of freedom to be creative.

The panel on Wireless ICT’s (and the accompanying wiki page) made it abundantly obvious that access isn’t only abut IP law and treaties: it’s also about physical access, computing capacity, and training. This was a continuation of the Network Neutrality panel, and carried through later with a rousing presentation by Onno W. Purbo, on how he has been teaching people to “steal” the last mile infrastructure from the frequencies in the air.

Finally, I went to the Role of Libraries in A2K panel. The panelists spoke on several different topics which were familiar territory for us at the Institute: the role of commercialized information intermediaries (Google, Amazon), fair use exemptions for digital media (including video and audio), the need for Open Access (we only have 15% of peer-reviewed journals available openly), ways to advocate for increased access, better archiving, and enabling A2K in developing countries through libraries.

—The Adelphi Charter

The name of the movement, Access to Knowledge, was chosen because, at the highest levels of international politics, it was the one phrase that everyone supported and no one opposed. It is an undeniable umbrella movement, under which different channels of activism, across multiple disciplines, can marshal their strength. The panelists raised important issues about development and capacity, but with a focus on human rights, justice, and dignity through participation. It was challenging, but reinvigorating, to hear some of our own rhetoric at the Institute repeated in the context of this much larger movement. We at the Institute are concerned with the uses of technology whether that is in the US or internationally, and we’ll continue, in our own way, to embrace development with the goal of creating a future where technology serves to enable human dignity, creativity, and participation.

google scholar

Google announced a new change to Google Scholar to improve the results of a search. The results can now be ordered by a confluence of citations, date of publication, and keyword relevance, instead of just the latter. From the Official Google Blog:

It’s not just a plain sort by date, but rather we try to rank recent papers the way researchers do, by looking at the prominence of the author’s and journal’s previous papers, how many citations it already has, when it was written, and so on. Look for the new link on the upper right for “Recent articles” — or switch to “All articles” for the full list.

Another feature, which I wasn’t aware of, is the “group of X”, located just at the end of the line. It points to papers that are very similar in topic. Researchers can use this feature to delve deeper into a topic, as opposed to skipping across the surface of a topic. This reflects the deep user-centered thinking that went into the design of the results, which is broken down in more detail here.

Though many professors lament the use of Google as students first and last research resource, the continual improvements of Google Scholar and the Google Book project (when combined with access rights afforded by a university library) provide an increasingly potent research environment. Google Scholar, by displaying the citation count, provides a significant piece of secondary data that improves decision making dramatically compared to unguided topic searches in the library. By selecting uncredited quotations and searching for them in Google Book project, students can get information on the primary text, read a little of the additional context, and decide whether or not to procure the book from the library. I feel like I’m overselling Google, but my real point has nothing to do with any specific corporation. The real point is: in the future, all the value is in the network.

wealth of networks

I was lucky enough to have a chance to be at The Wealth of Networks: How Social Production Transforms Markets and Freedom book launch at Eyebeam in NYC last week. After a short introduction by Jonah Peretti, Yochai Benkler got up and gave us his presentation. The talk was really interesting, covering the basic ideas in his book and delivered with the energy and clarity of a true believer. We are, he says, in a transitional period, during which we have the opportunity to shape our information culture and policies, and thereby the future of our society. From the introduction:

I was lucky enough to have a chance to be at The Wealth of Networks: How Social Production Transforms Markets and Freedom book launch at Eyebeam in NYC last week. After a short introduction by Jonah Peretti, Yochai Benkler got up and gave us his presentation. The talk was really interesting, covering the basic ideas in his book and delivered with the energy and clarity of a true believer. We are, he says, in a transitional period, during which we have the opportunity to shape our information culture and policies, and thereby the future of our society. From the introduction:

This book is offered, then, as a challenge to contemporary legal democracies. We are in the midst of a technological, economic and organizational transformation that allows us to renegotiate the terms of freedom, justice, and productivity in the information society. How we shall live in this new environment will in some significant measure depend on policy choices that we make over the next decade or so. To be able to understand these choices, to be able to make them well, we must recognize that they are part of what is fundamentally a social and political choice—a choice about how to be free, equal, productive human beings under a new set of technological and economic conditions.

During the talk Benkler claimed an optimism for the future, with full faith in the strength of individuals and loose networks to increasingly contribute to our culture and, in certain areas, replace the moneyed interests that exist now. This is the long-held promise of the Internet, open-source technology, and the infomation commons. But what I’m looking forward to, treated at length in his book, is the analysis of the struggle between the contemporary economic and political structure and the unstructured groups enabled by technology. In one corner there is the system of markets in which individuals, government, mass media, and corporations currently try to control various parts of our cultural galaxy. In the other corner there are individuals, non-profits, and social networks sharing with each other through non-market transactions, motivated by uniquely human emotions (community, self-gratification, etc.) rather than profit. Benkler’s claim is that current and future technologies enable richer non-market, public good oriented development of intellectual and cultural products. He also claims that this does not preclude the development of marketable products from these public ideas. In fact, he sees an economic incentive for corporations to support and contribute to the open-source/non-profit sphere. He points to IBM’s Global Services division: the largest part of IBM’s income is based off of consulting fees collected from services related to open-source software implementations. [I have not verified whether this is an accurate portrayal of IBM’s Global Services, but this article suggests that it is. Anecdotally, as a former IBM co-op, I can say that Benkler’s idea has been widely adopted within the organization.]

Further discussion of book will have to wait until I’ve read more of it. As an interesting addition, Benkler put up a wiki to accompany his book. Kathleen Fitzpatrick has just posted about this. She brings up a valid criticism of the wiki: why isn’t the text of the book included on the page? Yes, you can download the pdf, but the texts are in essentially the same environment—yet they are not together. This is one of the things we were trying to overcome with the Gamer Theory design. This separation highlights a larger issue, and one that we are preoccupied with at the institute: how can we shape technology to allow us handle text collaboratively and socially, yet still maintain an author’s unique voice?

G4M3R 7H30RY: part 4

We’ve moved past the design stage with the GAM3R 7H30RY blog and forum. We’re releasing the book in two formats: all at once (date to be soon decided), in the page card format, and through RSS syndication. We’re collecting user input and feedback in two ways: from comments submitted through the page-card interface, and in user posts in the forum.

The idea is to nest Ken’s work in the social network that surrounds it, made visible in the number of comments and topics posted. This accomplishes something fairly radical, shifting the focus from an author’s work towards the contributions of a work’s readers. The integration between the blog and forums, and the position of the comments in relation to the author’s work emphasizes this shift. We’re hoping that the use of color as an integrating device will further collapse the usual distance between the author and his reading (and writing) public.

To review all the stages that this project has been through before it arrived at this, check out Part I, Part II, and Part III. The design changes show the evolution of our thought and the recognition of the different problems we were facing: screen real estate, reading environment, maintaining the author’s voice but introducing the public, and making it fun. The basic interaction design emerged from those constraints. The page card concept arose from both the form of Ken’s book—a regimented number of paragraphs with limited length—and the constraints of screen real estate (1024×768). The overlapping arose from the physical handling of the ‘Oblique Strategies’ cards, and helps to present all the information on a single screen. The count of pages (five per section, five sections per chapter) is a further expression of the structure that Ken wrote into the book. Comments were lifted from their usual inglorious spot under the writer’s post to be right beside the work. It lends them some additional weight.

We’ve also reimagined the entry point for the forums with the topic pool. It provides a dynamic view of the forums, raising the traditional list into the realm of something energetic, more accurately reflecting the feeling of live conversation. It also helps clarify the direction of the topic discussion with a first post/last post view (visible in the mouseover state below). This simple preview will let users know whether or not a discussion has kept tightly to the subject or spun out of control into trivialities.

We’ve been careful with the niceties: the forum indicator bars turned on their sides to resemble video game power ups; the top of the comments sitting at the same height as the top of their associated page card; the icons representing comments and replies (thanks to famfamfam).

Each of the designed pages changed several times. The page cards have been the most drastically and frequently changed, but the home page went through a significant series of edits in a matter of a few days. As with many things related to design, I took several missteps before alighting on something which seems, in retrospect, perfectly obvious. Although the ‘table of contents’ is traditionally an integrated part of a bound volume, I tried (and failed) to create a different alignment and layout with it. I’m not sure why—it seemed like a good idea at the time. I also wanted to include a hint of the pages to come—unfortunately it just made it difficult for your eye move smoothly across the page. Finally I settled on a simpler concept, one that harmonized with the other layouts, and it all snapped into place.

With that we began the production stage, and we’re making it all real. Next update will be a pre-launch announcement.

corporate creep

A short article in the New York Times (Friday March 31, 2006, pg. A11) reported that the Smithsonian Institution has made a deal with Showtime in the interest of gaining an “active partner in developing and distributing [documentaries and short films].” The deal creates Smithsonian Networks, which will produce documentaries and short films to be released on an on-demand cable channel. Smithsonian Networks retains the right of first refusal to “commercial documentaries that rely heavily on Smithsonian collection or staff.” Ostensibly, this means that interviews with top personnel on broad topics is ok, but it may be difficult to get access to the paleobotanist to discuss the Mesozoic era. The most troubling part of this deal is that it extends to the Smithsonian’s collections as well. Tom Hayden, general manager of Smithsonian Networks, said the “collections will continue to be open to researchers and makers of educational documentaries.” So at least they are not trying to shut down educational uses of the these public cultural and scientific artifacts.

Except they are. The right of first refusal essentially takes the public institution and artifacts off the shelf, to be doled out only on approval. “A filmmaker who does not agree to grant Smithsonian Networks the rights to the film could be denied access to the Smithsonian’s public collections and experts.” Additionally, the qualifications for access are ill-defined: if you are making a commercial film, which may also be a rich educational resource, well, who knows if they’ll let you in. This is a blatant example of the corporatization of our public culture, and one that frankly seems hard to comprehend. From the Smithsonian’s mission statement:

The Smithsonian is committed to enlarging our shared understanding of the mosaic that is our national identity by providing authoritative experiences that connect us to our history and our heritage as Americans and to promoting innovation, research and discovery in science.

Hayden stated the reason for forming Smithsonian Networks is to “provide filmmakers with an attractive platform on which to display their work.” Yet, it was clearly stated by Linda St. Thomas, a spokeswoman for the Smithsonian, “if you are doing a one-hour program on forensic anthropology and the history of human bones, that would be competing with ourselves, because that is the kind of program we will be doing with Showtime On Demand.” Filmmakers are not happy, and this seems like the opposite of “enlarging our shared understanding.” It must have been quite a coup for Showtime to end up with stewardship of one of America’s treasured archives.

The application of corporate control over public resources follows the long-running trend towards privatization that began in the 80’s. Privatization assumes that the market, measured by profit and share price, provides an accurate barometer of success. But the corporate mentality towards profit doesn’t necessarily serve the best interest of the public. In “Censoring Culture: Contemporary Threats to Free Expression” (New Press, 2006), an essay by André Schiffrin outlines the effects that market orientation has had on the publishing industry:

As one publishing house after another has been taken over by conglomerates, the owners insist that their new book arm bring in the kind off revenue their newspapers, cable television networks, and films do….

To meet these new expectations, publishers drastically change the nature of what they publish. In a recent article, the New York Times focused on the degree to which large film companies are now putting out books through their publishing subsidiaries, so as to cash in on movie tie-ins.

The big publishing houses have edged away from variety and moved towards best-sellers. Books, traditionally the movers of big ideas (not necessarily profitable ones), have been homogenized. It’s likely that what comes out of the Smithsonian Networks will have high production values. This is definitely a good thing. But it also seems likely that the burden of the bottom line will inevitably drag the films down from a public education role to that of entertainment. The agreement may keep some independent documentaries from being created; at the very least it will have a chilling effect on the production of new films. But in a way it’s understandable. This deal comes at a time of financial hardship for the Smithsonian. I’m not sure why the Smithsonian didn’t try to work out some other method of revenue sharing with filmmakers, but I am sure that Showtime is underwriting a good part of this venture with the Smithsonian. The rest, of course, is coming from taxpayers. By some twist of profiteering logic, we are paying twice: once to have our resources taken away, and then again to have them delivered, on demand. Ironic. Painfully, heartbreakingly so.

if not rdf, then what?: part II

I had an exchange about my previous post with an RDF expert who explained to me that API’s are not like RDF and it would be incorrect to try to equate them. She’s right – API’s do not replace the need for RDF, nor do they replicate the functionality of RDF. API’s do provide access to data, but that data can be in many forms, including XML bound RDF. This is one of the pleasures and priviledges of writing on this blog: the audience contributes at a very high level of discourse, and is endowed with extremely deep knowledge about the topics under discussion.

I want to reiterate my point with a new inflection. By suggesting that API’s were an alternative to RDF, I was trying to get at a point that had more to do with adoption than functionality. I admit, I did not make the point well. So let me make a second attempt: API’s are about data access, and that, currently (and from my anecdotal experience) is where the value proposition lies for the new breed of web services. You have your data in someone’s database. That data is accessible to developers to manipulate and represent back to you in new, innovative, and useful ways. Most of the attention in the webdev community is turning towards the development of new interfaces—not towards the development of new tools to manage and enrich the data (again, anecdotal evidence only). Yes, there are people still interested in semantic data; we are indebted to them for continuing to improve the way our systems interact at a data level. But the focus of development has shifted to the interface. API’s make the gathering of data as simple as setting parameters, leaving only the work of designing the front-end experience.

Another note on RDF from my exchange: it was pointed out that practitioners of RDF prefer not to read it in XML, but instead use Notation 3 (N3), which is undeniably easier to read than XML. I don’t know enough about N3 to make a proper example, but I think you can get the idea if you look at the examples here and here.

sharing goes mainstream

Ben’s post on the Google book project mentioned a fundamental tenet of the Institute: the network is the environment for the future of reading and writing, and that’s why we care about network-related issues. While the goal of the network isn’t reducable to a single purpose, if you could say it was any one thing it would be: sharing. It’s why Tim Berners-Lee created it in the first place—to share scientific research. It’s why people put their lives on blogs, their photos on flickr, their movies on YouTube. And it is why the people who want to sell things are so anxious about putting their goods online. The bottom line is this: the ‘net is about sharing, that’s what it’s for.

Ben’s post on the Google book project mentioned a fundamental tenet of the Institute: the network is the environment for the future of reading and writing, and that’s why we care about network-related issues. While the goal of the network isn’t reducable to a single purpose, if you could say it was any one thing it would be: sharing. It’s why Tim Berners-Lee created it in the first place—to share scientific research. It’s why people put their lives on blogs, their photos on flickr, their movies on YouTube. And it is why the people who want to sell things are so anxious about putting their goods online. The bottom line is this: the ‘net is about sharing, that’s what it’s for.

Time magazine had an article in the March 20th issue on open-source and innovation-at-the-edges (by Lev Grossman). Those aren’t new ideas around office, but when I saw the phrase the “authorship of innovation is shifting from the Few to the Many” I realized that, for the larger public, they are still slightly foreign, that the distant intellectual altruism of the Enlightenment is being recast as the open-source movement, and that the notion of an intellectual commons is being rejuvenated in the public consciousness. True, Grossman puts out the idea of shared innovation as a curiosity—it’s a testament to the momentum of our contemporary notions of copyright that the cultural environment is antagonistic to giving away ideas—but I applaud any injection of the open-source ideal into the mainstream. Especially ideas like this:

Admittedly, it’s counterintuitive: until now the value of a piece of intellectual property has been defined by how few people possess it. In the future the value will be defined by how many people possess it.

I hope the article will seed the public mind with intimations of a world where the benefits of intellectual openness and sharing are assumed, rather than challenged.

Raising the public consciousness around issues of openness and sharing is one of the goals of the Institute. We’re happy to have help from a magazine with Time’s circulation, but most of all, I’m happy that the article is turning public attention in the direction of an open network, shared content, and a rich digital commons.