In Ben’s recent post, he noted that Larry Lessig worries about the trend toward a read-only internet, the harbinger of which is iTunes. Apple’s latest (academic) venture is iTunes U, a project begun at Duke and piloted by seven universities — Stanford, it appears, has been most active.  Since they are looking for a large scale roll out of iTunes U for 2006-07, and since we have many podcasting faculty here at USC, a group of us met with Apple reps yesterday.

Since they are looking for a large scale roll out of iTunes U for 2006-07, and since we have many podcasting faculty here at USC, a group of us met with Apple reps yesterday.

Initially I was very skeptical about Apple’s further insinuation into the academy and yet, what iTunes U offers is a repository for instructors to store podcasts, with several components similar to courseware such as Blackboard. Apple stores the content on its servers but the university retains ownership. The service is fairly customizable–you can store audio, video with audio, slides with audio (aka enhanced podcasts) and text (but only in pdf). Then you populate the class via university course rosters, which are password protected.

There are also open access levels on which the university (or, say, the alumni association) can add podcasts of vodcasts of events. And it is free. At least for now — the rep got a little cagey when asked about how long this would be the case.

The point is to allow students to capture lectures and such on their iPods (or MP3 players) for the purposes of study and review. The rationale is that students are already extremely familiar with the technology so there is less of a learning curve (well, at least privileged students such as those at my institution are familiar).

What seems particularly interesting is that students can then either speed up the talk of the lecture without changing pitch (and lord knows there are some whose speaking I would love to accelerate) or, say, in the case of an ESL student, slow it down for better comprehension. Finally, there is space for students to upload their own work — podcasting has been assigned to some of our students already.

Part of me is concerned at further academic incorporation, but a lot more parts of me are thinking this is not only a chance to help less tech savvy profs employ the technology (the ease of collecting and distributing assets is germane here) while also really pushing the envelope in terms of copyright, educational use, fair use, etc. Apple wants to only use materials that are in the public domain or creative commons initially, but undoubtedly some of the more muddy digital use issues will arise and it would be nice to have academics involved in the process.

Category Archives: Publishing, Broadcast, and the Press

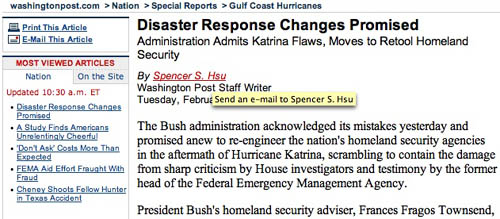

washington post and new york times hyperlink bylines

In an effort to more directly engage readers, two of America’s most august daily newspapers are adding a subtle but potentially significant feature to their websites: author bylines directly linked to email forms. The Post’s links are already active, but as of this writing the Times, which is supposedly kicking off the experiment today, only links to other articles by the same reporter. They may end up implementing this in a different way.

screen grab from today’s Post

The email trial comes on the heels of two notoriously failed experiments by elite papers to pull readers into conversation: the LA Times’ precipitous closure, after an initial 24-hour flood of obscenities and vandalism, of its “wikatorials” page, which invited readers to rewrite editorials alongside the official versions; and more recently, the Washington Post’s shutting down of comments on its “post.blog” after experiencing a barrage of reader hate mail. The common thread? An aversion to floods, barrages, or any high-volume influx of unpredictable reader response. The email features, which presumably are moderated, seem to be the realistic compromise, favoring the trickle over the deluge.

In a way, though, hyperlinking bylines is a more profound development than the higher profile experiments that came before, which were more transparently about jumping aboard the wiki/blog bandwagon without bothering to think through the implications, or taking the time — as successful blogs and wikis must always do — to gradually build up an invested community of readers who will share the burden of moderating the discussion and keeping things reasonably clean. They wanted instant blog, instant wiki. But online social spaces are bottom-up enterprises: invite people into your home without any preexisting social bonds and shared values — and add to that the easy target of being a mass media goliath — and your home will inevitably get trashed as soon as word gets out.

Being able to email reporters, however, gets more at the root of the widely perceived credibility problem of newspapers, which have long strived to keep the human element safely insulated behind an objective tone of voice. It’s certainly not the first time reporters’ or columnists’ email addresses have been made available, but usually they get tucked away toward the bottom. Having the name highlighted directly beneath the headline — making the reporter an interactive feature of the article — is more genuinely innovative than any tacked-on blog because it places an expectation on the writers as well as the readers. Some reporters will likely treat it as an annoying new constraint, relying on polite auto-reply messages to maintain a buffer between themselves and the public. Others may choose to engage, and that could be interesting.

can there be a compromise on copyright?

The following is a response to a comment made by Karen Schneider on my Monday post on libraries and DRM. I originally wrote this as just another comment, but as you can see, it’s kind of taken on a life of its own. At any rate, it seemed to make sense to give it its own space, if for no other reason than that it temporarily sidelined something else I was writing for today. It also has a few good quotes that might be of interest. So, Karen said:

I would turn back to you and ask how authors and publishers can continue to be compensated for their work if a library that would buy ten copies of a book could now buy one. I’m not being reactive, just asking the question–as a librarian, and as a writer.

This is a big question, perhaps the biggest since economics will define the parameters of much that is being discussed here. How do we move from an old economy of knowledge based on the trafficking of intellectual commodities to a new economy where value is placed not on individual copies of things that, as a result of new technologies are effortlessly copiable, but rather on access to networks of content and the quality of those networks? The question is brought into particularly stark relief when we talk about libraries, which (correct me if I’m wrong) have always been more concerned with the pure pursuit and dissemination of knowledge than with the economics of publishing.

Consider, as an example, the photocopier — in many ways a predecessor of the world wide web in that it is designed to deconstruct and multiply documents. Photocopiers have been unbundling books in libraries long before there was any such thing as Google Book Search, helping users break through the commodified shell to get at the fruit within.

Consider, as an example, the photocopier — in many ways a predecessor of the world wide web in that it is designed to deconstruct and multiply documents. Photocopiers have been unbundling books in libraries long before there was any such thing as Google Book Search, helping users break through the commodified shell to get at the fruit within.

I know there are some countries in Europe that funnel a share of proceeds from library photocopiers back to the publishers, and this seems to be a reasonably fair compromise. But the role of the photocopier in most libraries of the world is more subversive, gently repudiating, with its low hum, sweeping light, and clackety trays, the idea that there can really be such a thing as intellectual property.

That being said, few would dispute the right of an author to benefit economically from his or her intellectual labor; we just have to ask whether the current system is really serving in the authors’ interest, let alone the public interest. New technologies have released intellectual works from the restraints of tangible property, making them easily accessible, eminently exchangable and never out of print. This should, in principle, elicit a hallelujah from authors, or at least the many who have written works that, while possessed of intrinsic value, have not succeeded in their role as commodities.

But utopian visions of an intellecutal gift economy will ultimately fail to nourish writers who must survive in the here and now of a commercial market. Though peer-to-peer gift economies might turn out in the long run to be financially lucrative, and in unexpected ways, we can’t realistically expect everyone to hold their breath and wait for that to happen. So we find ourselves at a crossroads where we must soon choose as a society either to clamp down (to preserve existing business models), liberalize (to clear the field for new ones), or compromise.

In her essay “Books in Time,” Berkeley historian Carla Hesse gives a wonderful overview of a similar debate over intellectual property that took place in 18th Century France, when liberal-minded philosophes — most notably Condorcet — railed against the state-sanctioned Paris printing monopolies, demanding universal access to knowledge for all humanity. To Condorcet, freedom of the press meant not only freedom from censorship but freedom from commerce, since ideas arise not from men but through men from nature (how can you sell something that is universally owned?). Things finally settled down in France after the revolution and the country (and the West) embarked on a historic compromise that laid the foundations for what Hesse calls “the modern literary system”:

The modern “civilization of the book” that emerged from the democratic revolutions of the eighteenth century was in effect a regulatory compromise among competing social ideals: the notion of the right-bearing and accountable individual author, the value of democratic access to useful knowledge, and faith in free market competition as the most effective mechanism of public exchange.

Barriers to knowledge were lowered. A system of limited intellectual property rights was put in place that incentivized production and elevated the status of writers. And by and large, the world of ideas flourished within a commercial market. But the question remains: can we reach an equivalent compromise today? And if so, what would it look like?  Creative Commons has begun to nibble around the edges of the problem, but love it as we may, it does not fundamentally alter the status quo, focusing as it does primarily on giving creators more options within the existing copyright system.

Creative Commons has begun to nibble around the edges of the problem, but love it as we may, it does not fundamentally alter the status quo, focusing as it does primarily on giving creators more options within the existing copyright system.

Which is why free software guru Richard Stallman announced in an interview the other day his unqualified opposition to the Creative Commons movement, explaining that while some of its licenses meet the standards of open source, others are overly conservative, rendering the project bunk as a whole. For Stallman, ever the iconoclast, it’s all or nothing.

But returning to our theme of compromise, I’m struck again by this idea of a tax on photocopiers, which suggests a kind of micro-economy where payments are made automatically and seamlessly in proportion to a work’s use. Someone who has done a great dealing of thinking about such a solution (though on a much more ambitious scale than library photocopiers) is Terry Fisher, an intellectual property scholar at Harvard who has written extensively on practicable alternative copyright models for the music and film industries (Ray and I first encountered Fisher’s work when we heard him speak at the Economics of Open Content Symposium at MIT last month).

The following is an excerpt from Fisher’s 2004 book, “Promises to Keep: Technology, Law, and the Future of Entertainment”, that paints a relatively detailed picture of what one alternative copyright scheme might look like. It’s a bit long, and as I mentioned, deals specifically with the recording and movie industries, but it’s worth reading in light of this discussion since it seems it could just as easily apply to electronic books:

The following is an excerpt from Fisher’s 2004 book, “Promises to Keep: Technology, Law, and the Future of Entertainment”, that paints a relatively detailed picture of what one alternative copyright scheme might look like. It’s a bit long, and as I mentioned, deals specifically with the recording and movie industries, but it’s worth reading in light of this discussion since it seems it could just as easily apply to electronic books:

….we should consider a fundamental change in approach…. replace major portions of the copyright and encryption-reinforcement models with a variant of….a governmentally administered reward system. In brief, here’s how such a system would work. A creator who wished to collect revenue when his or her song or film was heard or watched would register it with the Copyright Office. With registration would come a unique file name, which would be used to track transmissions of digital copies of the work. The government would raise, through taxes, sufficient money to compensate registrants for making their works available to the public. Using techniques pioneered by American and European performing rights organizations and television rating services, a government agency would estimate the frequency with which each song and film was heard or watched by consumers. Each registrant would then periodically be paid by the agency a share of the tax revenues proportional to the relative popularity of his or her creation. Once this system were in place, we would modify copyright law to eliminate most of the current prohibitions on unauthorized reproduction, distribution, adaptation, and performance of audio and video recordings. Music and films would thus be readily available, legally, for free.

Painting with a very broad brush…., here would be the advantages of such a system. Consumers would pay less for more entertainment. Artists would be fairly compensated. The set of artists who made their creations available to the world at large–and consequently the range of entertainment products available to consumers–would increase. Musicians would be less dependent on record companies, and filmmakers would be less dependent on studios, for the distribution of their creations. Both consumers and artists would enjoy greater freedom to modify and redistribute audio and video recordings. Although the prices of consumer electronic equipment and broadband access would increase somewhat, demand for them would rise, thus benefiting the suppliers of those goods and services. Finally, society at large would benefit from a sharp reduction in litigation and other transaction costs.

While I’m uncomfortable with the idea of any top-down, governmental solution, this certainly provides food for thought.

harper-collins half-heartedly puts a book online

As noted in The New York Times, Harper-Collins has put the text of Bruce Judson’s Go It Alone: The Secret to Building a Successful Business on Your Own online; ostensibly this is a pilot for more books to come.

Harper-Collins isn’t doing this out of the goodness of their hearts: it’s an ad-supported project. Every page of the book (it’s paginated in exactly the same way as the print edition) bears five Google ads, a banner ad, and a prominent link to buy the book at Amazon. Visiting Amazon suggests other motives for Harper-Collins’s experiment: new copies are selling for $5.95 and there are no reader reviews of the book, suggesting that, despite what the press would have you believe, Judson’s book hasn’t attracted much attention in print format. Putting it online might not be so much of a brave pilot program as an attempt to staunch the losses for a failed book.

Certainly H-C hasn’t gone to a great deal of trouble to make the project look nice. As mentioned, the pagination is exactly the same as the print version; that means that you get pages like this, which start mid-sentence and end mid-sentence. While this is exactly what print books do, it’s more of a problem on the web: with so much extraneous material around it, it’s more difficult for the reader to remember where they were. It wouldn’t have been that hard to rebreak the book: on page 8, they could have left the first line on the previous page with the paragraph it belongs too while moving the last line to the next page.

It is useful to have a book that can be searched by Google. One suspects, however, that Google would have done a better job with this.

the comissar vanishes

The Lowell Sun reports that staff members of Representative Marty Meehan (Democrat, Massachusetts) have been found editing the representatives Wikipedia entry. As has been noted in a number of places (see, for example, this Slashdot discussion), Meehan’s staff edited out references to his campaign promise to leave the House after eight years, among other things, and considerably brightened the picture of Meehan painted by his biography there.

Meehan’s staff editing the Wikipedia doesn’t appear to be illegal, as far as I can tell, even if they’re trying to distort his record. It does thrust some issues about how Wikipedia works into the spotlight – much as Beppe Grillo did in Italy last week. Sunlight disinfects; this has brought up the problem of political vandalism stemming from Washington, and Wikipedia has taken the step of banning the editing of Wikipedia by all IP address from Congress while they try to figure out what to do about it: see the discussion here.

This is the sort of problem that was bound to come up with Wikipedia: it will be interesting to see how they attempt to surmount it. In a broad sense, trying to forcibly stop political vandalism is as much of a political statement as anything anyone in the Capitol could write. Something in me recoils from the idea of the Wikipedia banning people from editing it, even if they are politicians. The most useful contribution of the Wikipedia isn’t their networked search for a neutral portrait of truth, for this will always be flawed; it’s the idea that the truth is inherently in flux. Just as we should approach the mass media with an incredulous eye, we should approach Wikipedia with an incredulous eye. With Wikipedia, however, we know that we need to – and this is an advance.

what I heard at MIT

Over the next few days I’ll be sifting through notes, links, and assorted epiphanies crumpled up in my pocket from two packed, and at times profound, days at the Economics of Open Content symposium, hosted in Cambridge, MA by Intelligent Television and MIT Open CourseWare. For now, here are some initial impressions — things I heard, both spoken in the room and ricocheting inside my head during and since. An oral history of the conference? Not exactly. More an attempt to jog the memory. Hopefully, though, something coherent will come across. I’ll pick up some of these threads in greater detail over the next few days. I should add that this post owes a substantial debt in form to Eliot Weinberger’s “What I Heard in Iraq” series (here and here).

![]()

Naturally, I heard a lot about “open content.”

I heard that there are two kinds of “open.” Open as in open access — to knowledge, archives, medical information etc. (like Public Library of Science or Project Gutenberg). And open as in open process — work that is out in the open, open to input, even open-ended (like Linux, Wikipedia or our experiment with MItch Stephens, Without Gods).

I heard that “content” is actually a demeaning term, treating works of authorship as filler for slots — a commodity as opposed to a public good.

I heard that open content is not necessarily the same as free content. Both can be part of a business model, but the defining difference is control — open content is often still controlled content.

I heard that for “open” to win real user investment that will feedback innovation and even result in profit, it has to be really open, not sort of open. Otherwise “open” will always be a burden.

I heard that if you build the open-access resources and demonstrate their value, the money will come later.

I heard that content should be given away for free and that the money is to be made talking about the content.

I heard that reputation and an audience are the most valuable currency anyway.

I heard that the academy’s core mission — education, research and public service — makes it a moral imperative to have all scholarly knowledge fully accessible to the public.

I heard that if knowledge is not made widely available and usable then its status as knowledge is in question.

I heard that libraries may become the digital publishing centers of tomorrow through simple, open-access platforms, overhauling the print journal system and redefining how scholarship is disseminated throughout the world.

![]()

And I heard a lot about copyright…

I heard that probably about 50% of the production budget of an average documentary film goes toward rights clearances.

I heard that many of those clearances are for “underlying” rights to third-party materials appearing in the background or reproduced within reproduced footage. I heard that these are often things like incidental images, video or sound; or corporate logos or facades of buildings that happen to be caught on film.

I heard that there is basically no “fair use” space carved out for visual and aural media.

I heard that this all but paralyzes our ability as a culture to fully examine ourselves in terms of the media that surround us.

I heard that the various alternative copyright movements are not necessarily all pulling in the same direction.

I heard that there is an “inter-operability” problem between alternative licensing schemes — that, for instance, Wikipedia’s GNU Free Documentation License is not inter-operable with any Creative Commons licenses.

I heard that since the mass market content industries have such tremendous influence on policy, that a significant extension of existing copyright laws (in the United States, at least) is likely in the near future.

I heard one person go so far as to call this a “totalitarian” intellectual property regime — a police state for content.

I heard that one possible benefit of this extension would be a general improvement of internet content distribution, and possibly greater freedom for creators to independently sell their work since they would have greater control over the flow of digital copies and be less reliant on infrastructure that today only big companies can provide.

I heard that another possible benefit of such control would be price discrimination — i.e. a graduated pricing scale for content varying according to the means of individual consumers, which could result in fairer prices. Basically, a graduated cultural consumption tax imposed by media conglomerates

I heard, however, that such a system would be possible only through a substantial invasion of users’ privacy: tracking users’ consumption patterns in other markets (right down to their local grocery store), pinpointing of users’ geographical location and analysis of their socioeconomic status.

I heard that this degree of control could be achieved only through persistent surveillance of the flow of content through codes and controls embedded in files, software and hardware.

I heard that such a wholesale compromise on privacy is all but inevitable — is in fact already happening.

I heard that in an “information economy,” user data is a major asset of companies — an asset that, like financial or physical property assets, can be liquidated, traded or sold to other companies in the event of bankruptcy, merger or acquisition.

I heard that within such an over-extended (and personally intrusive) copyright system, there would still exist the possibility of less restrictive alternatives — e.g. a peer-to-peer content cooperative where, for a single low fee, one can exchange and consume content without restriction; money is then distributed to content creators in proportion to the demand for and use of their content.

I heard that such an alternative could theoretically be implemented on the state level, with every citizen paying a single low tax (less than $10 per year) giving them unfettered access to all published media, and easily maintaining the profit margins of media industries.

I heard that, while such a scheme is highly unlikely to be implemented in the United States, a similar proposal is in early stages of debate in the French parliament.

![]()

And I heard a lot about peer-to-peer…

I heard that p2p is not just a way to exchange files or information, it is a paradigm shift that is totally changing the way societies communicate, trade, and build.

I heard that between 1840 and 1850 the first newspapers appeared in America that could be said to have mass circulation. I heard that as a result — in the space of that single decade — the cost of starting a print daily rose approximately %250.

I heard that modern democracies have basically always existed within a mass media system, a system that goes hand in hand with a centralized, mass-market capital structure.

I heard that we are now moving into a radically decentralized capital structure based on social modes of production in a peer-to-peer information commons, in what is essentially a new chapter for democratic societies.

I heard that the public sphere will never be the same again.

I heard that emerging practices of “remix culture” are in an apprentice stage focused on popular entertainment, but will soon begin manifesting in higher stakes arenas (as suggested by politically charged works like “The French Democracy” or this latest Black Lantern video about the Stanley Williams execution in California).

I heard that in a networked information commons the potential for political critique, free inquiry, and citizen action will be greatly increased.

I heard that whether we will live up to our potential is far from clear.

I heard that there is a battle over pipes, the outcome of which could have huge consequences for the health and wealth of p2p.

I heard that since the telecomm monopolies have such tremendous influence on policy, a radical deregulation of physical network infrastructure is likely in the near future.

I heard that this will entrench those monopolies, shifting the balance of the internet to consumption rather than production.

I heard this is because pre-p2p business models see one-way distribution with maximum control over individual copies, downloads and streams as the most profitable way to move content.

I heard also that policing works most effectively through top-down control over broadband.

I heard that the Chinese can attest to this.

I heard that what we need is an open spectrum commons, where connections to the network are as distributed, decentralized, and collaboratively load-sharing as the network itself.

I heard that there is nothing sacred about a business model — that it is totally dependent on capital structures, which are constantly changing throughout history.

I heard that history is shifting in a big way.

I heard it is shifting to p2p.

I heard this is the most powerful mechanism for distributing material and intellectual wealth the world has ever seen.

I heard, however, that old business models will be radically clung to, as though they are sacred.

I heard that this will be painful.

wikipedia as civic duty

Number 14 on Technorati’s listings of the most popular blogs is Beppe Grillo. Who’s Beppe Grillo? He’s an immensely popular Italian political satirist, roughly the Italian Jon Stewart. Grillo has been hellbent on exposing corruption in the political system there, and has emerged as a major force in the ongoing elections there. While he happily and effectively skewers just about everybody involved in the Italian political process, Dario Fo, currently running for mayor of Milan under the refreshing slogan “I am not a moderate” manages to receive Grillo’s endorsement.

Number 14 on Technorati’s listings of the most popular blogs is Beppe Grillo. Who’s Beppe Grillo? He’s an immensely popular Italian political satirist, roughly the Italian Jon Stewart. Grillo has been hellbent on exposing corruption in the political system there, and has emerged as a major force in the ongoing elections there. While he happily and effectively skewers just about everybody involved in the Italian political process, Dario Fo, currently running for mayor of Milan under the refreshing slogan “I am not a moderate” manages to receive Grillo’s endorsement.

Grillo’s use of new media makes sense: he has effectively been banned from Italian television. While he performs around the country, his blog – which is also offered in English just as deadpan and full of bold-faced phrases as the Italian – has become one of his major vehicles. It’s proven astonishingly popular, as his Technorati ranking reveals.

His latest post (in English or Italian) is particularly interesting. (It’s also drawn a great deal of debate: note the 1044 comments – at this writing – on the Italian version.) Grillo’s been pushing the Wikipedia for a while; here, he suggests to his public that they should, in the name of transparency, have a go at revising the Wikipedia entry on Silvio Berlusconi.

Berlusconi is an apt target. He is, of course, the right-wing prime minister of Italy as well as its richest citizen, and at one point or another, he’s had his fingers in a lot of pies of dubious legality. In the five years that he’s been in power, he’s been systematically rewriting Italian laws standing in his way – laws against, for example, media monopolies. Berlusconi effectively controls most Italian television: it’s a fair guess that he has something to do with Grillo’s ban from Italian television. Indirectly, he’s probably responsible for Grillo turning to new media: Berlusconi doesn’t yet own the internet.

Berlusconi is an apt target. He is, of course, the right-wing prime minister of Italy as well as its richest citizen, and at one point or another, he’s had his fingers in a lot of pies of dubious legality. In the five years that he’s been in power, he’s been systematically rewriting Italian laws standing in his way – laws against, for example, media monopolies. Berlusconi effectively controls most Italian television: it’s a fair guess that he has something to do with Grillo’s ban from Italian television. Indirectly, he’s probably responsible for Grillo turning to new media: Berlusconi doesn’t yet own the internet.

Or the Wikipedia. Grillo brilliantly posits the editing of the Wikipedia as a civic duty. This is consonant with Grillo’s general attitude: he’s also been advocating environmental responsibility, for example. The public editing Berlusconi’s biography seems apt: famously, during the 2001 election, Berlusconi sent out a 200-page biography to every mailbox in Italy which breathlessly chronicled his rise from a singer on cruise ships to the owner of most of Italy. This vanity press biography presented itself as being neutral and objective. Grillo doesn’t buy it: history, he argues, should be written and rewritten by the masses. While Wikipedia claims to strive for a neutral point of view, its real value lies in its capacity to be rewritten by anyone.

How has Grillo’s suggestion played out? Wikipedia has evidently been swamped by “BeppeGrillati” attempting to modify Berlusconi’s biography. The Italian Wikipedia has declared “una edit war” and put a temporary lock on editing the page. From an administrative point of view, this seems understandable; for what it’s worth, there’s a similar, if less stringent, stricture on the English page for Mr. Bush. But this can’t help but feel like a betrayal of Wikipedia’s principals. Editing the Wikipedia should be a civic duty.

ESBNs and more thoughts on the end of cyberspace

Anyone who’s ever seen a book has seen ISBNs, or International Standard Book Numbers — that string of ten digits, right above the bar code, that uniquely identifies a given title. Now come ESBNs, or Electronic Standard Book Numbers, which you’d expect would be just like ISBNs, only for electronic books. And you’d be right, but only partly.  ESBNs, which just came into existence this year, uniquely identify not only an electronic title, but each individual copy, stream, or download of that title — little tracking devices that publishers can embed in their content. And not just books, but music, video or any other discrete media form — ESBNs are media-agnostic.

ESBNs, which just came into existence this year, uniquely identify not only an electronic title, but each individual copy, stream, or download of that title — little tracking devices that publishers can embed in their content. And not just books, but music, video or any other discrete media form — ESBNs are media-agnostic.

“It’s all part of the attempt to impose the restrictions of the physical on the digital, enforcing scarcity where there is none,” David Weinberger rightly observes. On the net, it’s not so much a matter of who has the book, but who is reading the book — who is at the book. It’s not a copy, it’s more like a place. But cyberspace blurs that distinction. As Alex Pang explains, cyberspace is still a place to which we must travel. Going there has become much easier and much faster, but we are still visitors, not natives. We begin and end in the physical world, at a concrete terminal.

When I snap shut my laptop, I disconnect. I am back in the world. And it is that instantaneous moment of travel, that light-speed jump, that has unleashed the reams and decibels of anguished debate over intellectual property in the digital era. A sort of conceptual jetlag. Culture shock. The travel metaphors begin to falter, but the point is that we are talking about things confused during travel from one world to another. Discombobulation.

This jetlag creates a schism in how we treat and consume media. When we’re connected to the net, we’re not concerned with copies we may or may not own. What matters is access to the material. The copy is immaterial. It’s here, there, and everywhere, as the poet said. But when you’re offline, physical possession of copies, digital or otherwise, becomes important again. If you don’t have it in your hand, or a local copy on your desktop then you cannot experience it. It’s as simple as that. ESBNs are a byproduct of this jetlag. They seek to carry the guarantees of the physical world like luggage into the virtual world of cyberspace.

But when that distinction is erased, when connection to the network becomes ubiquitous and constant (as is generally predicted), a pervasive layer over all private and public space, keeping pace with all our movements, then the idea of digital “copies” will be effectively dead. As will the idea of cyberspace. The virtual world and the actual world will be one.

For publishers and IP lawyers, this will simplify matters greatly. Take, for example, webmail. For the past few years, I have relied exclusively on webmail with no local client on my machine. This means that when I’m offline, I have no mail (unless I go to the trouble of making copies of individual messages or printouts). As a consequence, I’ve stopped thinking of my correspondence in terms of copies. I think of it in terms of being there, of being “on my email” — or not. Soon that will be the way I think of most, if not all, digital media — in terms of access and services, not copies.

But in terms of perception, the end of cyberspace is not so simple. When the last actual-to-virtual transport service officially shuts down — when the line between worlds is completely erased — we will still be left, as human beings, with a desire to travel to places beyond our immediate perception. As Sol Gaitan describes it in a brilliant comment to yesterday’s “end of cyberspace” post:

In the West, the desire to blur the line, the need to access the “other side,” took artists to try opium, absinth, kef, and peyote. The symbolists crossed the line and brought back dada, surrealism, and other manifestations of worlds that until then had been held at bay but that were all there. The virtual is part of the actual, “we, or objects acting on our behalf are online all the time.” Never though of that in such terms, but it’s true, and very exciting. It potentially enriches my reality. As with a book, contents become alive through the reader/user, otherwise the book is a dead, or dormant, object. So, my e-mail, the blogs I read, the Web, are online all the time, but it’s through me that they become concrete, a perceived reality. Yes, we read differently because texts grow, move, and evolve, while we are away and “the object” is closed. But, we still need to read them. Esse rerum est percipi.

Just the other night I saw a fantastic performance of Allen Ginsberg’s Howl that took the poem — which I’d always found alluring but ultimately remote on the page — and, through the conjury of five actors, made it concrete, a perceived reality. I dug Ginsburg’s words. I downloaded them, as if across time. I was in cyberspace, but with sweat and pheremones. The Beats, too, sought sublimity — transport to a virtual world. So, too, did the cyberpunks in the net’s early days. So, too, did early Christian monastics, an analogy that Pang draws:

Just the other night I saw a fantastic performance of Allen Ginsberg’s Howl that took the poem — which I’d always found alluring but ultimately remote on the page — and, through the conjury of five actors, made it concrete, a perceived reality. I dug Ginsburg’s words. I downloaded them, as if across time. I was in cyberspace, but with sweat and pheremones. The Beats, too, sought sublimity — transport to a virtual world. So, too, did the cyberpunks in the net’s early days. So, too, did early Christian monastics, an analogy that Pang draws:

…cyberspace expresses a desire to transcend the world; Web 2.0 is about engaging with it. The early inhabitants of cyberspace were like the early Church monastics, who sought to serve God by going into the desert and escaping the temptations and distractions of the world and the flesh. The vision of Web 2.0, in contrast, is more Franciscan: one of engagement with and improvement of the world, not escape from it.

The end of cyberspace may mean the fusion of real and virtual worlds, another layer of a massively mediated existence. And this raises many questions about what is real and how, or if, that matters. But the end of cyberspace, despite all the sweeping gospel of Web 2.0, continuous computing, urban computing etc., also signals the beginning of something terribly mundane. Networks of fiber and digits are still human networks, prone to corruption and virtue alike. A virtual environment is still a natural environment. The extraordinary, in time, becomes ordinary. And undoubtedly we will still search for lines to cross.

.tv

People have been talking about internet television for a while now. But Google and Yahoo’s unveiling of their new video search and subscription services last week at the Consumer Electronics Show in Las Vegas seemed to make it real.

Sifting through the predictions and prophecies that subsequently poured forth, I stumbled on something sort of interesting — a small concrete discovery that helped put some of this in perspective. Over the weekend, Slate Magazine quietly announced its partnership with “meaningoflife.tv,” a web-based interview series hosted by Robert Wright, author of Nonzero and The Moral Animal, dealing with big questions at the perilous intersection of science and religion.

Launched last fall (presumably in response to the intelligent design fracas), meaningoflife.tv is a web page featuring a playlist of video interviews with an intriguing roster of “cosmic thinkers” — philosophers, scientists and religious types — on such topics as “Direction in evolution,” “Limits in science,” and “The Godhead.”

This is just one of several experiments in which Slate is fiddling with its text-to-media ratio. Today’s Pictures, a collaboration with Magnum Photos, presents a daily gallery of images and audio-photo essays, recalling both the heyday of long-form photojournalism and a possible future of hybrid documentary forms. One problem is that it’s not terribly easy to find these projects on Slate’s site. The Magnum page has an ad tucked discretely on the sidebar, but meaningoflife.tv seems to have disappeared from the front page after a brief splash this weekend. For a born-digital publication that has always thought of itself in terms of the web, Slate still suffers from a pretty appalling design, with its small headline area capping a more or less undifferentiated stream of headlines and teasers.

Still, I’m intrigued by these collaborations, especially in light of the forecast TV-net convergence. While internet TV seems to promise fragmentation, these projects provide a comforting dose of coherence — a strong editorial hand and a conscious effort to grapple with big ideas and issues, like the reassuringly nutritious programming of PBS or the BBC. It’s interesting to see text-based publications moving now into the realm of television. As Tivo, on demand, and now, the internet atomize TV beyond recognition, perhaps magazines and newspapers will fill part of the void left by channels.

Limited as it may now seem, traditional broadcast TV can provide us with valuable cultural touchstones, common frames of reference that help us speak a common language about our culture. That’s one thing I worry we’ll lose as the net blows broadcast media apart. Then again, even in the age of five gazillion cable channels, we still have our water-cooler shows, our mega-hits, our television “events.” And we’ll probably have them on the internet too, even when “by appointment” television is long gone. We’ll just have more choice regarding where, when and how we get at them. Perhaps the difference is that in an age of fragmentation, we view these touchstone programs with a mildly ironic awareness of their mainstream status, through the multiple lenses of our more idiosyncratic and infinitely gratified niche affiliations. They are islands of commonality in seas of specialization. And maybe that makes them all the more refreshing. Shows like “24,” “American Idol,” or a Ken Burns documentary, or major sporting events like the World Cup or the Olympics that draw us like prairie dogs out of our niches. Coming up for air from deep submersion in our self-tailored, optional worlds.

exploring the book-blog nexus

It appears that Amazon is going to start hosting blogs for authors. Sort of. Amazon Connect, a new free service designed to boost sales and readership, will host what are essentially stripped-down blogs where registered authors can post announcements, news and general musings.  Eventually, customers can keep track of individual writers by subscribing to bulletins that collect in an aggregated “plog” stream on their Amazon home page. But comments and RSS feeds — two of the most popular features of blogs — will not be supported. Engagement with readers will be strictly one-way, and connection to the larger blogosphere basically nil. A missed opportunity if you ask me.

Eventually, customers can keep track of individual writers by subscribing to bulletins that collect in an aggregated “plog” stream on their Amazon home page. But comments and RSS feeds — two of the most popular features of blogs — will not be supported. Engagement with readers will be strictly one-way, and connection to the larger blogosphere basically nil. A missed opportunity if you ask me.

Then again, Amazon probably figured it would be a misapplication of resources to establish a whole new province of blogland. This is more like the special events department of a book store — arranging readings, book singings and the like. There has on occasion, however, been some entertaining author-public interaction in Amazon’s reader reviews, most famously Anne Rice’s lashing out at readers for their chilly reception of her novel Blood Canticle (link – scroll down to first review). But evidently Connect blogs are not aimed at sparking this sort of exchange. Genuine literary commotion will have to occur in the nooks and crannies of Amazon’s architecture.

It’s interesting, though, to see this happening just as our own book-blog experiment, Without Gods, is getting underway. Over the past few weeks, Mitchell Stephens has been writing a blog (hosted by the institute) as a way of publicly stoking the fire of his latest book project, a narrative history of atheism to be published next year by Carroll and Graf. While Amazon’s blogs are mainly for PR purposes, our project seeks to foster a more substantive relationship between Mitch and his readers (though, naturally, Mitch and his publisher hope it will have a favorable effect on sales as well). We announced Without Gods a little over two weeks ago and already it has collected well over 100 comments, a high percentage of which are thoughtful and useful.

We are curious to learn how blogging will impact the process of writing the book. By working partially in the open, Mitch in effect raises the stakes of his research — assumptions will be challenged and theses tested. Our hunch isn’t so much that this procedure would be ideal for all books or authors, but that for certain ones it might yield some tangible benefit, whether due to the nature or breadth of their subject, the stage they’re at in their thinking, or simply a desire to try something new.

An example. This past week, Mitch posted a very thinking-out-loud sort of entry on “a positive idea of atheism” in which he wrestles with Nietzsche and the concepts of void and nothingness. This led to a brief exchange in the comment stream where a reader recommended that Mitch investigate the writings of Gora, a self-avowed atheist and figure in the Indian independence movement in the 30s. Apparently, Gora wrote what sounds like a very intriguing memoir of his meeting with Gandhi (whom he greatly admired) and his various struggles with the religious component of the great leader’s philosophy. Mitch had not previously been acquainted with Gora or his writings, but thanks to the blog and the community that has begun to form around it, he now knows to take a look.

What’s more, Mitch is currently traveling in India, so this could not have come at a more appropriate time. It’s possible that the commenter had noted this from a previous post, which may have helped trigger the Gora association in his mind. Regardless, these are the sorts of the serendipitous discoveries one craves while writing book. I’m thrilled to see the blog making connections where none previously existed.