A number of influential folks around the blogosphere are reluctantly endorsing Google’s decision to play by China’s censorship rules on its new Google.cn service — what one local commentator calls a “eunuch version” of Google.com. Here’s a sampler of opinions:

Ethan Zuckerman (“Google in China: Cause For Any Hope?”):

It’s a compromise that doesn’t make me happy, that probably doesn’t make most of the people who work for Google very happy, but which has been carefully thought through…

In launching Google.cn, Google made an interesting decision – they did not launch versions of Gmail or Blogger, both services where users create content. This helps Google escape situations like the one Yahoo faced when the Chinese government asked for information on Shi Tao, or when MSN pulled Michael Anti’s blog. This suggests to me that Google’s willing to sacrifice revenue and market share in exchange for minimizing situations where they’re asked to put Chinese users at risk of arrest or detention… This, in turn, gives me some cause for hope.

Rebecca MacKinnon (“Google in China: Degrees of Evil”):

At the end of the day, this compromise puts Google a little lower on the evil scale than many other internet companies in China. But is this compromise something Google should be proud of? No. They have put a foot further into the mud. Now let’s see whether they get sucked in deeper or whether they end up holding their ground.

David Weinberger (“Google in China”):

If forced to choose — as Google has been — I’d probably do what Google is doing. It sucks, it stinks, but how would an information embargo help? It wouldn’t apply pressure on the Chinese government. Chinese citizens would not be any more likely to rise up against the government because they don’t have access to Google. Staying out of China would not lead to a more free China.

Doc Searls (“Doing Less Evil, Possibly”):

I believe constant engagement — conversation, if you will — with the Chinese government, beats picking up one’s very large marbles and going home. Which seems to be the alternative.

Much as I hate to say it, this does seem to be the sensible position — not unlike opposing America’s embargo of Cuba. The logic goes that isolating Castro only serves to further isolate the Cuban people, whereas exposure to the rest of the world — even restricted and filtered — might, over time, loosen the state’s monopoly on civic life. Of course, you might say that trading Castro for globalization is merely an exchange of one tyranny for another. But what is perhaps more interesting to ponder right now, in the wake of Google’s decision, is the palpable melancholy felt in the comments above. What does it reveal about what we assume — or used to assume — about the internet and its relationship to politics and geography?

A favorite “what if” of recent history is what might have happened in the Soviet Union had it lasted into the internet age. Would the Kremlin have managed to secure its virtual borders? Or censor and filter the net into a state-controlled intranet — a Union of Soviet Socialist Networks? Or would the decentralized nature of the technology, mixed with the cultural stirrings of glasnost, have toppled the totalitarian state from beneath?

Ten years ago, in the heady early days of the internet, most would probably have placed their bets against the Soviets. The Cold War was over. Some even speculated that history itself had ended, that free-market capitalism and democracy, on the wings of the information revolution, would usher in a long era of prosperity and peace. No borders. No limits.

“Jingjing” and “Chacha.” Internet police officers from the city of Shenzhen who float over web pages and monitor the cyber-traffic of local users.

“Jingjing” and “Chacha.” Internet police officers from the city of Shenzhen who float over web pages and monitor the cyber-traffic of local users.

It’s interesting now to see how exactly the opposite has occurred. Bubbles burst. Towers fell. History, as we now realize, did not end, it was merely on vacation; while the utopian vision of the internet — as a placeless place removed from the inequities of the physical world — has all but evaporated. We realize now that geography matters. Concrete features have begun to crystallize on this massive information plain: ports, gateways and customs houses erected, borders drawn. With each passing year, the internet comes more and more to resemble a map of the world.

Those of us tickled by the “what if” of the Soviet net now have ourselves a plausible answer in China, who, through a stunning feat of pipe control — a combination of censoring filters, on-the-ground enforcement, and general peering over the shoulders of its citizens — has managed to create a heavily restricted local net in its own image. Barely a decade after the fall of the Iron Curtain, we have the Great Firewall of China.

And as we’ve seen this week, and in several highly publicized instances over the past year, the virtual hand of the Chinese government has been substantially strengthened by Western technology companies willing to play by local rules so as not to be shut out of the explosive Chinese market. Tech giants like Google, Yahoo! , and Cisco Systems have proved only too willing to abide by China’s censorship policies, blocking certain search returns and politically sensitive terms like “Taiwanese democracy,” “multi-party elections” or “Falun Gong”. They also specialize in precision bombing, sometimes removing the pages of specific users at the government’s bidding. The most recent incident came just after New Year’s when Microsoft acquiesced to government requests to shut down the My Space site of popular muckraking blogger Zhao Jing, aka Michael Anti.

One of many angry responses that circulated the non-Chinese net in the days that followed.

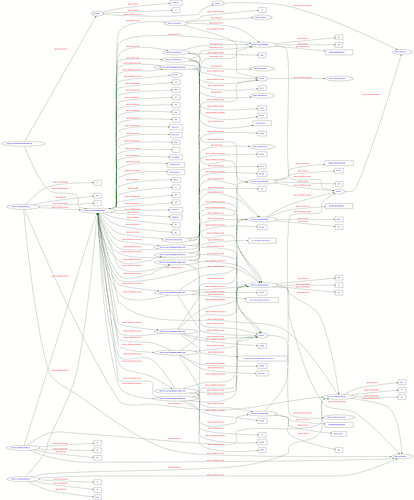

We tend to forget that the virtual is built of physical stuff: wires, cable, fiber — the pipes. Whoever controls those pipes, be it governments or telecomms, has the potential to control what passes through them. The result is that the internet comes in many flavors, depending in large part on where you are logging in. As Jack Goldsmith and Timothy Wu explain in an excellent article in Legal Affairs (adapted from their forthcoming book Who Controls the Internet? : Illusions of a Borderless World), China, far from being the boxed-in exception to an otherwise borderless net, is actually just the uglier side of a global reality. The net has been mapped out geographically into “a collection of nation-state networks,” each with its own politics, social mores, and consumer appetites. The very same technology that enables Chinese authorities to write the rules of their local net enables companies around the world to target advertising and gear services toward local markets. Goldsmith and Wu:

…information does not want to be free. It wants to be labeled, organized, and filtered so that it can be searched, cross-referenced, and consumed….Geography turns out to be one of the most important ways to organize information on this medium that was supposed to destroy geography.

Who knows? When networked devices truly are ubiquitous and can pinpoint our location wherever we roam, the internet could be censored or tailored right down to the individual level (like the empire in Borges’ fable that commissions a one-to-one map of its territory that upon completion perfectly covers every corresponding inch of land like a quilt).

The case of Google, while by no means unique, serves well to illustrate how threadbare the illusion of the borderless world has become. The company’s famous credo, “don’t be evil,” just doesn’t hold up in the messy, complicated real world. “Choose the lesser evil” might be more appropriate. Also crumbling upon contact with air is Google’s famous mission, “to make the world’s information universally accessible and useful,” since, as we’ve learned, Google will actually vary the world’s information depending on where in the world it operates.

Google may be behaving responsibly for a corporation, but it’s still a corporation, and corporations, in spite of well-intentioned employees, some of whom may go to great lengths to steer their company onto the righteous path, are still ultimately built to do one thing: get ahead. Last week in the States, the get-ahead impulse happened to be consonant with our values. Not wanting to spook American users, Google chose to refuse a Dept. of Justice request for search records to aid its anti-pornography crackdown. But this week, not wanting to ruffle the Chinese government, Google compromised and became an agent of political repression. “Degrees of evil,” as Rebecca MacKinnon put it.

The great irony is that technologies we romanticized as inherently anti-tyrannical have turned out to be powerful instruments of control, highly adaptable to local political realities, be they state or market-driven. Not only does the Chinese government use these technologies to suppress democracy, it does so with the help of its former Cold War adversary, America — or rather, the corporations that in a globalized world are the de facto co-authors of American foreign policy. The internet is coming of age and with that comes the inevitable fall from innocence. Part of us desperately wanted to believe Google’s silly slogans because they said something about the utopian promise of the net. But the net is part of the world, and the world is not so simple.

There are several reasons that Yahoo! released some of their

There are several reasons that Yahoo! released some of their