A November 27 Chicago Tribune article by Julia Keller bundles together hypertext fiction, blogging, texting, and new electronic distribution methods for books under a discussion of “e-literature.” Interviewing Scott Rettberg (of Grand Text Auto) and MIT’s William J. Mitchell, the reporter argues that the hallmark of e-literature is increased consumer control over the shape and content of a book:

Literature, like all genres, is being reimagined and remade by the constantly unfolding extravagance of technological advances. The question of who’s in charge — the producer or the consumer — is increasingly relevant to the literary world. The idea of the book as an inert entity is gradually giving way to the idea of the book as a fluid, formless repository for an ever-changing variety of words and ideas by a constantly modified cast of writers.

A fluid, formless repository? Ever-changing words? This is the Ipod version of the future of literature, and I’m having a hard time articulating why I find it disturbing. It might be the idea that the digitized literature will bring about a sort of consumer revolution. I can’t help but think of this idea as a strange rearticulation of the Marxist rhetoric of the Language Poets, a group of experimental writers who claimed to give the reader a greater role in the production process of a literary work as part of critique of capitalism (more on this here). In the Ipod model of e-literature, readers don’t challenge the capitalist sytem: they are consumers, empowered by their purchasing power.

There’s also a a contradiction in the article itself: Keller’s evolutionary narrative, in which the “inert book” slowly becomes an obsolete concept, is undermined by her last paragraphs. She ends the article by quoting Mitchell, who insists that there will always be a place for “traditional paper-based literature” because a book “feels good, looks good — it really works.” This gets us back to Malcolm Gladwell territory: is it true that paper books will always seem to work better than digital ones? Or is it just too difficult to think beyond what “feels good” right now?

Monthly Archives: November 2005

sober thoughts on google: privatization and privacy

Siva Vaidhyanathan has written an excellent essay for the Chronicle of Higher Education on the “risky gamble” of Google’s book-scanning project — some of the most measured, carefully considered comments I’ve yet seen on the issue. His concerns are not so much for the authors and publishers that have filed suit (on the contrary, he believes they are likely to benefit from Google’s service), but for the general public and the future of libraries. Outsourcing to a private company the vital task of digitizing collections may prove to have been a grave mistake on the part of Google’s partner libraries. Siva:

The long-term risk of privatization is simple: Companies change and fail. Libraries and universities last…..Libraries should not be relinquishing their core duties to private corporations for the sake of expediency. Whichever side wins in court, we as a culture have lost sight of the ways that human beings, archives, indexes, and institutions interact to generate, preserve, revise, and distribute knowledge. We have become obsessed with seeing everything in the universe as “information” to be linked and ranked. We have focused on quantity and convenience at the expense of the richness and serendipity of the full library experience. We are making a tremendous mistake.

This essay contains in abundance what has largely been missing from the Google books debate: intellectual courage. Vaidhyanathan, an intellectual property scholar and “avowed open-source, open-access advocate,” easily could have gone the predictable route of scolding the copyright conservatives and spreading the Google gospel. But he manages to see the big picture beyond the intellectual property concerns. This is not just about economics, it’s about knowledge and the public interest.

What irks me about the usual debate is that it forces you into a position of either resisting Google or being its apologist. But this fails to get at the real bind we all are in: the fact that Google provides invaluable services and yet is amassing too much power; that a private company is creating a monopoly on public information services. Sooner or later, there is bound to be a conflict of interest. That is where we, the Google-addicted public, are caught. It’s more complicated than hip versus square, or good versus evil.

Here’s another good piece on Google. On Monday, The New York Times ran an editorial by Adam Cohen that nicely lays out the privacy concerns:

Google says it needs the data it keeps to improve its technology, but it is doubtful it needs so much personally identifiable information. Of course, this sort of data is enormously valuable for marketing. The whole idea of “Don’t be evil,” though, is resisting lucrative business opportunities when they are wrong. Google should develop an overarching privacy theory that is as bold as its mission to make the world’s information accessible – one that can become a model for the online world. Google is not necessarily worse than other Internet companies when it comes to privacy. But it should be doing better.

Two graduate students in Stanford in the mid-90s recognized that search engines would the most important tools for dealing with the incredible flood of information that was then beginning to swell, so they started indexing web pages and working on algorithms. But as the company has grown, Google’s admirable-sounding mission statement — “to organize the world’s information and make it universally accessible and useful” — has become its manifest destiny, and “information” can now encompass the most private of territories.

At one point it simply meant search results — the answers to our questions. But now it’s the questions as well. Google is keeping a meticulous record of our clickstreams, piecing together an enormous database of queries, refining its search algorithms and, some say, even building a massive artificial brain (more on that later). What else might they do with all this personal information? To date, all of Google’s services are free, but there may be a hidden cost.

“Don’t be evil” may be the company motto, but with its IPO earlier this year, Google adopted a new ideology: they are now a public corporation. If web advertising (their sole source of revenue) levels off, then investors currently high on $400+ shares will start clamoring for Google to maintain profits. “Don’t be evil to us!” they will cry. And what will Google do then?

images: New York Public Library reading room by Kalloosh via Flickr; archive of the original Google page

flushing the net down the tubes

Grand theories about upheavals on the internet horizon are in ready supply. Singularities are near. Explosions can be expected in the next six to eight months. Or the whole thing might just get “flushed” down the tubes. This last scenario is described at length in a recent essay in Linux Journal by Doc Searls, which predicts the imminent hijacking of the net by phone and cable companies who will turn it into a top-down, one-way broadcast medium. In other words, the net’s utopian moment, the “read/write” web, may be about to end. Reading Searls’ piece, I couldn’t help thinking about the story of radio and a wonderful essay Brecht wrote on the subject in 1932:

Here is a positive suggestion: change this apparatus over from distribution to communication. The radio would be the finest possible communication apparatus in public life, a vast network of pipes. That is to say, it would be if it knew how to receive as well as to transmit, how to let the listener speak as well as hear, how to bring him into a relationship instead of isolating him. On this principle the radio should step out of the supply business and organize its listeners as suppliers….turning the audience not only into pupils but into teachers.

Unless you’re the military, law enforcement, or a short-wave hobbyist, two-way radio never happened. On the mainstream commercial front, radio has always been about broadcast: a one-way funnel. The big FM tower to the many receivers, “prettifying public life,” as Brecht puts it. Radio as an agitation? As an invitation to a debate, rousing families from the dinner table into a critical encounter with their world? Well, that would have been neat.

Now there’s the internet, a two-way, every-which-way medium — a stage of stages — that would have positively staggered a provocateur like Brecht. But although the net may be a virtual place, it’s built on some pretty actual stuff. Copper wire, fiber optic cable, trunks, routers, packets — “the vast network of pipes.” The pipes are owned by the phone and cable companies — the broadband providers — and these guys expect a big return (far bigger than they’re getting now) on the billions they’ve invested in laying down the plumbing. Searls:

The choke points are in the pipes, the permission is coming from the lawmakers and regulators, and the choking will be done….The carriers are going to lobby for the laws and regulations they need, and they’re going to do the deals they need to do. The new system will be theirs, not ours….The new carrier-based Net will work in the same asymmetrical few-to-many, top-down pyramidal way made familiar by TV, radio, newspapers, books, magazines and other Industrial Age media now being sucked into Information Age pipes. Movement still will go from producers to consumers, just like it always did.

If Brecht were around today I’m sure he would have already written (or blogged) to this effect, no doubt reciting the sad fate of radio as a cautionary tale. Watch the pipes, he would say. If companies talk about “broad” as in “broadband,” make sure they’re talking about both ends of the pipe. The way broadband works today, the pipe running into your house dwarfs the one running out. That means more download and less upload, and it’s paving the way for a content delivery platform every bit as powerful as cable on an infinitely broader band. Data storage, domain hosting — anything you put up there — will be increasingly costly, though there will likely remain plenty of chat space and web mail provided for free, anything that allows consumers to fire their enthusiasm for commodities through the synapse chain.

If the net goes the way of radio, that will be the difference (allow me to indulge in a little dystopia). Imagine a classic Philco cathedral radio but with a few little funnel-ended hoses extending from the side that connect you to other listeners. “Tune into this frequency!” “You gotta hear this!” You whisper recommendations through the tube. It’s sending a link. Viral marketing. Yes, the net will remain two-way to the extent that it helps fuel the market. Web browsers, like the old Philco, would essentially be receivers, enabling participation only to the extent that it encouraged others to receive.

If the net goes the way of radio, that will be the difference (allow me to indulge in a little dystopia). Imagine a classic Philco cathedral radio but with a few little funnel-ended hoses extending from the side that connect you to other listeners. “Tune into this frequency!” “You gotta hear this!” You whisper recommendations through the tube. It’s sending a link. Viral marketing. Yes, the net will remain two-way to the extent that it helps fuel the market. Web browsers, like the old Philco, would essentially be receivers, enabling participation only to the extent that it encouraged others to receive.

You might even get your blog hosted for free if you promote products — a sports shoe with gelatinous heels or a music video that allows you to undress the dancing girls with your mouse. Throw in some political rants in between to blow off some steam, no problem. That’s entrepreneurial consumerism. Make a living out of your appetites and your ability to make them infectious. Hip recommenders can build a cosy little livelihood out of their endorsements. But any non-consumer activity will be more like amateur short-wave radio: a mildly eccentric (and expensive) hobby (and they’ll even make a saccharine movie about a guy communing with his dead firefighter dad through a ghost blog).

Searls sees it as above all a war of language and metaphor. The phone and cable companies will dominate as long as the internet is understood fundamentally as a network of pipes, a kind of information transport system. This places the carriers at the top of the hierarchy — the highway authority setting the rules of the road and collecting the tolls. So far the carriers have managed, through various regulatory wrangling and court rulings, to ensure that the “transport metaphor” has prevailed.

But obviously the net is much more than the sum of its pipes. It’s a public square. It’s a community center. It’s a market. And it’s the biggest publishing system the world has ever known. Searls wants to promote “place metaphors” like these. Sure, unless you’re a lobbyist for Verizon or SBC, you probably already think of it this way. But in the end it’s the lobbyists that will make all the difference. Unless, that is, an enlightened citizens’ lobby begins making some noise. So a broad, broad as in broadband, public conversation should be in order. Far broader than what goes on in the usual progressive online feedback loops — the Linux and open source communities, the creative commies, and the techno-hip blogosphere, that I’m sure are already in agreement about this.

Google also seems to have an eye on the pipes, reportedly having bought thousands of miles of “dark fiber” — pipe that has been laid but is not yet in use. Some predict a nationwide “Googlenet.” But this can of worms is best saved for another post.

virtual libraries, real ones, empires

Last Tuesday, a Washington Post editorial written by Library of Congress librarian James Billington outlined the possible benefits of a World Digital Library, a proposed LOC endeavor discussed last week in a post by Ben Vershbow. Billington seemed to imagine the library as sort of a United Nations of information: claiming that “deep conflict between cultures is fired up rather than cooled down by this revolution in communications,” he argued that a US-sponsored, globally inclusive digital library could serve to promote harmony over conflict:

Last Tuesday, a Washington Post editorial written by Library of Congress librarian James Billington outlined the possible benefits of a World Digital Library, a proposed LOC endeavor discussed last week in a post by Ben Vershbow. Billington seemed to imagine the library as sort of a United Nations of information: claiming that “deep conflict between cultures is fired up rather than cooled down by this revolution in communications,” he argued that a US-sponsored, globally inclusive digital library could serve to promote harmony over conflict:

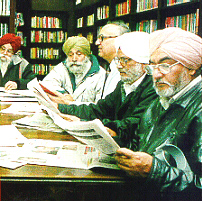

Libraries are inherently islands of freedom and antidotes to fanaticism. They are temples of pluralism where books that contradict one another stand peacefully side by side just as intellectual antagonists work peacefully next to each other in reading rooms. It is legitimate and in our nation’s interest that the new technology be used internationally, both by the private sector to promote economic enterprise and by the public sector to promote democratic institutions. But it is also necessary that America have a more inclusive foreign cultural policy — and not just to blunt charges that we are insensitive cultural imperialists. We have an opportunity and an obligation to form a private-public partnership to use this new technology to celebrate the cultural variety of the world.

What’s interesting about this quote (among other things) is that Billington seems to be suggesting that a World Digital Library would function in much the same manner as a real-world library, and yet he’s also arguing for the importance of actual physical proximity. He writes, after all, about books literally, not virtually, touching each other, and about researchers meeting up in a shared reading room. There seems to be a tension here, in other words, between Billington’s embrace of the idea of a world digital library, and a real anxiety about what a “library” becomes when it goes online.

I also feel like there’s some tension here — in Billington’s editorial and in the whole World Digital Library project — between “inclusiveness” and “imperialism.” Granted, if the United States provides Brazilians access to their own national literature online, this might be used by some as an argument against the idea that we are “insensitive cultural imperialists.” But there are many varieties of empire: indeed, as many have noted, the sun stopped setting on Google’s empire a while ago.

To be clear, I’m not attacking the idea of the World Digital Library. Having watch the Smithsonian invest in, and waffle on, some of their digital projects, I’m all for a sustained commitment to putting more material online. But there needs to be some careful consideration of the differences between online libraries and virtual ones — as well as a bit more discussion of just what a privately-funded digital library might eventually morph into.

gaming and the academy

So, what happens when you put together a drama professor and a computer science one?

You get an entertainment technology program. In an article, in the NY Times, Seth Schiesel talks about the blossoming of academic programs devoted entirely to the study and development of video games, offering courses that range from basic game programming to contemporary culture studies.

Since first appearing about three decades ago, video games are well on their way to becoming the dominant medium of the 21st century. They are played across the world by people of all ages, from all walks of life. And in a time where everything is measured by the bottom line, they have in fact surpassed the movie industry in sales. The academy, therefore, no matter how conservative, cannot continue to ignore this phenomenon for long. So from The New School (which includes Parsons) to Carnegie Mellon, prestigious colleges and universities are beginning to offer programs in interactive media. In the last five years the number of universities offering game-related programs has gone from a mere handful to more than 100. This can hardly be described as widescale penetration of higher education, but the trend is unmistakable.

The video game industry has a stake in advancing these programs since they stand to benefit from a pool of smart, sophisticated young developers ready upon graduation to work on commercial games. Bing Gordon, CEO of Electronic Arts says that there is an over-production of cinema studies professionals but that the game industry still lacks the abundant in-flow of talent that the film industry enjoys. Considering the state of public education in this country, it seems that video game programs will continue flourishing only with the help of private funds.

The academy offers the possibility for multidisciplinary study to enrich students’ technical and academic backgrounds, and to produce well-rounded talents for the professional world. In his article, Schiesel quotes Bing Gordon:

To create a video game project you need the art department and the computer science department and the design department and the literature or film department all contributing team members. And then there needs to be a leadership or faculty that can evaluate the work from the individual contributors but also evaluate the whole project.

These collaborations are possible now, in part, because technology has become an integral part of art production in the 21st century. It’s no longer just for geeks. The contributions of new media artists are too prominent and sophisticated to be ignored. Therefore it seems quite natural that, for instance, an art department might collaborate with faculty in computer science.

war on text?

Last week, there was a heated discussion on the 1600-member Yahoo Groups videoblogging list about the idea of a videobloggers launching a “war on text” — not necessarily calling for book burning, but at least promoting the use of threaded video conversations as a way of replacing text-based communication online. It began with a post to the list by Steve Watkins and led to responses such as this enthusiastic embrace of the end of using text to communicate ideas:

Audio and video are a more natural medium than text for most humans. The only reason why net content is mainly text is that it’s easier for programs to work with — audio and video are opaque as far as programs are concerned. On top of that, it’s a lot easier to treat text as hypertext, and hypertext has a viral quality.

As a text-based attack on the printed work, the “war on text” debate had a Phaedrus aura about it, especially since the vloggers seemed to be gravitating towards the idea of secondary orality originally proposed by Walter Ong in Orality and Literacy — a form of communication which is involved at least the representation of an oral exchange, but which also draws on a world defined by textual literacy. The vlogger’s debt to the written word was more explicitly acknowledged some posts, such as one by Steve Garfield that declared his work to be a “marriage of text and video.”

Over several days, the discussion veered to cover topics such as film editing, the over-mediation of existence, and the transition from analog to digital. The sophistication and passion of the discussion gave a sense of the way at least some in the video blogging community are thinking, both about the relationship between their work and text-based blogging and about the larger relationship between the written word and other forms of digitally mediated communication.

Perhaps the most radical suggestion in the entire exchange was the prediction that video itself would soon seem to be an outmoded form of communication:

in my opinion, before video will replace text, something will replace video…new technologies have already been developed that are more likely to play a large role in communications over this century… how about the one that can directly interface to the brain (new scientist reports on electroencephalography with quadriplegics able to make a wheelchair move forward, left or right)… considering the full implications of devices like this, it’s not hard to see where the real revolutions will occur in communications.

This comment implies that debates such as the “war on text” are missing the point — other forms of mediation are on the horizon that will radically change our understanding of what “communication” entails, and make the distinction between orality and literacy seem relatively miniscule. It’s an apocalyptic idea (like the idea that the internet will explode), but perhaps one worth talking about.

alternative journalisms

Craigslist founder Craig Newmark has announced he will launch a major citizen journalism site within the next three months. As quoted in The Guardian:

The American public has lost a lot of trust in conventional newspaper mechanisms. Mechanisms are now being developed online to correct that.

…It was King Henry II who said: ‘Won’t someone rid me of that turbulent priest?’ We have seen a modern manifestation of that in the US with the instances of plausible deniability, the latest example of that has been the Valerie Plame case and that has caused damage.

Can a Craiglist approach work for Washington politics? It’s hard to imagine a million worker ants distributed across the nation cracking Plamegate. You’re more likely to get results from good old investigative reporting, but combined with a canny postmodern sense of spin (and we’re not just talking about the Bush administration’s spin, but Judith Miller’s spin, The New York Times’ spin) and the ability to make that part of the story. Combine the best of professional journalism with the best of the independent blogosphere. Can this be done?

Josh Marshall of Talking Points Memo fame wants to bridge the gap with a new breed of “reporter-blogger,” currently looking to fill two such positions — paid positions — for a new muckraking blog that will provide “wall-to-wall coverage of corruption, self-dealing, and betrayals of the public trust in today’s Washington” (NY Sun has details). While other high-profile bloggers sign deals with big media, Marshall clings fast to his independence, but recognizes the limitations of not being on the ground, in the muck, as it were. He’s banking that his new cyborgs might be able to shake up the stagnant Washington press corps from the inside, or at least offer readers a less compromised view (though perhaps down the road fledgeling media empires like Marshall’s will become the new media establishment).

That’s not to say that the Craigslist approach will not be interesting, and possibly important. It was dazzling to witness the grassroots information network that sprung up on the web during Hurricane Katrina, including on the Craigslist New Orleans site, which became a clearinghouse for news on missing persons and a housing directory for the displaced. For sprawling catastrophes like this it’s impossible to have enough people on the ground. Unless the people on the ground start reporting themselves.

That’s not to say that the Craigslist approach will not be interesting, and possibly important. It was dazzling to witness the grassroots information network that sprung up on the web during Hurricane Katrina, including on the Craigslist New Orleans site, which became a clearinghouse for news on missing persons and a housing directory for the displaced. For sprawling catastrophes like this it’s impossible to have enough people on the ground. Unless the people on the ground start reporting themselves.

Citizen journalists also pick up on small stories that slip through the cracks. You could say the guy who taped the Rodney King beating was a “citizen journalist.” You could say this video (taken surreptitiously on a cellphone) of a teacher in a New Jersey high school flipping out at a student for refusing to stand for the national anthem is “citizen journalism.” Some clips speak for themselves, but more often you need context, you need to know how to frame it. The interesting thing is how grassroots journalism can work with a different model for contextualization. The New Jersey video made the rounds on the web and soon became a story in the press. One person slaps up some footage and everyone else comments, re-blogs and links out. The story is told collectively.

the next dictionary

I found this Hartford Courant article on slashdot.

Martin Benjamin heads up an eleven year old project to create an online Swahili dictionary called the Kamusi Project. Despite 80 million speakers, the current Swahili dictionary is over 30 years old. Setting this project apart from other online dictionaries, these entries are created by, not only academics, but also by volunteers ranging from former Peace Corp workers to African linguistic hobbyists. The site also includes a discussion board for the community of users and developers.

It is also important to mention that, like wikipedia, donations and volunteers support this collaborative project. Unlike wikipedia, it does not have the broad audience and publicity that wikipedia enjoys, which makes funding a continual issue.

multimedia vs intermedia

One of the odd things that strikes me about so much writing about technology is the seeming assumption that technology (and the ideas associated with it) arise from some sort of cultural vacuum. It’s an odd myopia, if not an intellectual arrogance, and it results in a widespream failure to notice many connections that should be obvious. One such connection is that between the work of many of the conceptual artists of the 1960s and continuing attempts to sort out what it means to read when books don’t need to be limited to text & illustrations. (This, of course, is a primary concern of the Institute.)

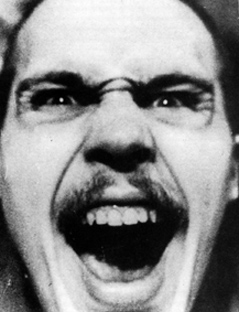

Lately I’ve been delving through the work of Dick Higgins (1938–1998), a self-described “polyartist” who might be most easily situated by declaring him to be a student of John Cage and part of the Fluxus group of artists. This doesn’t quite do him justice: Higgins’s work bounced back and forth between genres of the avant-garde, from music composition (in the rather frightening photo above, he’s performing his Danger Music No. 17, which instructs the performer to scream as loudly as possible for as long as possible) to visual poetry to street theatre. He supported himself by working as a printer: the first of several publishing ventures was the Something Else Press, which he ran from 1963 to 1974, publishing a variety of works by artists and poets as well as critical writing.

“Betweenness” might be taken as the defining quality of his work, and this betweenness is what interests me. Higgins recognized this – he was perhaps as strong a critic as an artist – and in 1964, he coined the term “intermedia” to describe what he & his fellow Fluxus artists were doing: going between media, taking aspects from established forms to create new ones. An example of this might be visual, or concrete poetry, of which that of Jackson Mac Low or Ian Hamilton Finlay – both of whom Higgins published – might be taken as representative. Visual poetry exists as an intermediate form between poetry and graphic design, taking on aspects of both. This is elaborated (in an apposite form) in his poster on poetry & its intermedia; click on the thumbnail to the right to see a (much) larger version with legible type.

“Betweenness” might be taken as the defining quality of his work, and this betweenness is what interests me. Higgins recognized this – he was perhaps as strong a critic as an artist – and in 1964, he coined the term “intermedia” to describe what he & his fellow Fluxus artists were doing: going between media, taking aspects from established forms to create new ones. An example of this might be visual, or concrete poetry, of which that of Jackson Mac Low or Ian Hamilton Finlay – both of whom Higgins published – might be taken as representative. Visual poetry exists as an intermediate form between poetry and graphic design, taking on aspects of both. This is elaborated (in an apposite form) in his poster on poetry & its intermedia; click on the thumbnail to the right to see a (much) larger version with legible type.

Higgins certainly did not imagine he was the first to use the idea of intermedia; he traced the word itself back to Samuel Taylor Coleridge, who had used it in the same sense in 1812. The concept has similarities to Richard Wagner’s idea of opera as Gesamkunstwerk, the “total artwork”, combining theatre and music. But Higgins suggested that the roots of the idea could be found in the sixteenth century, in Giordano Bruno’s On the Composition of Signs and Images, which he translated into English and annotated. And though it might be an old idea, a quote from a text about intermedia that he wrote in 1965 (available online at the always reliable Ubuweb) suggests parallels between the avant-garde world Higgins was working in forty years ago and our concern at the Institute, the way the book seems to be changing in the electronic world:

Much of the best work being produced today seems to fall between media. This is no accident. The concept of the separation between media arose in the Renaissance. The idea that a painting is made of paint on canvas or that a sculpture should not be painted seems characteristic of the kind of social thought–categorizing and dividing society into nobility with its various subdivisions, untitled gentry, artisans, serfs and landless workers–which we call the feudal conception of the Great Chain of Being. This essentially mechanistic approach continued to be relevant throughout the first two industrial revolutions, just concluded, and into the present era of automation, which constitutes, in fact, a third industrial revolution.

Higgins isn’t explicitly mentioning the print revolution started by Gutenberg or the corresponding changes in how reading is increasingly moving from the page to the screen, but that doesn’t seem like an enormous leap to make. A chart he made in 1995 diagrams the interactions between various sorts of intermedia:

Note the question marks – Higgins knew there was terrain yet to be mapped out, and an interview suggests that he imagined that the genre-mixing facilitated by the computer might be able to fill in those spaces.

“Multimedia” is something that comes up all the time when we’re talking about what computers do to reading. The concept is simple: you take a book & you add pictures and sound clips and movies. To me it’s always suggested an assemblage of different kinds of media with a rubber band – the computer program or the webpage – around them, an assemblage that usually doesn’t work as a whole because the elements comprising it are too disparate. Higgins’s intermedia is a more appealing idea: something that falls in between forms is more likely to bear scrutiny as a unified object than a collection of objects. The simple equation text + pictures (the simplest and most common way we think about multimedia) is less interesting to me than a unified whole that falls between text and pictures. When you have text + pictures, it’s all too easy to criticize the text and pictures separately: this picture compared to all other possible pictures invariably suffered, just as this text compared to all other possible texts must suffer. Put in other terms, it’s a design failure.

A note added to the essay quoted above in 1981 more explicitly presents the ways in which intermedia can be a useful tool for approaching new work:

It is today, as it was in 1965, a useful way to approach some new work; one asks oneself, “what that I know does this new work lie between?” But it is more useful at the outset of a critical process than at the later stages of it. Perhaps I did not see that at the time, but it is clear to me now. Perhaps, in all the excitement of what was, for me, a discovery, I overvalued it. I do not wish to compensate with a second error of judgment and to undervalue it now. But it would seem that to proceed further in the understanding of any given work, one must look elsewhere–to all the aspects of a work and not just to its formal origins, and at the horizons which the work implies, to find an appropriate hermeneutic process for seeing the whole of the work in my own relation to it.

The last sentence bears no small relevance to new media criticism: while a video game might be kind of like a film or kind of like a book, it’s not simply the sum of its parts. This might be seen as the difference between thinking about terms of multimedia and in terms of intermedia. We might use it as a guideline for thinking about the book to come, which isn’t a simple replacement for the printed book, but a new form entirely. While we can think about the future book as incorporating existing pieces – text, film, pictures, sound – we will only really be able to appreciate these objects when we find a way to look at them as a whole.

An addendum: “Intermedia” got picked up as the name of a hypertext project out of Brown started in 1985 that Ted Nelson, among others, was involved with. It’s hard to tell whether it was thus named because of familiarity with Higgins’s work, but I suspect not: these two threads, the technologic and the artistic, seem to be running in parallel. But this isn’t necessarily, as is commonly suuposed, because the artists weren’t interested in technical possibilities. Higgins’s Book of Love & Death & War, published in 1972, might merit a chapter in the history of books & computers: a book-length aleatory poem, he notes in his preface that one of its cantos was composed with the help of a FORTRAN IV program that he wrote to randomize its lines. (Canto One of the poem is online at Ubuweb; this is not, however, the computer-generated part of the work.)

And another addendum: something else to take from Higgins might be his resistance to commodification. Because his work fell between the crevices of recognized forms, it couldn’t easily be marketed: how would you go about selling his metadramas, for example? It does, however, appear perfectly suited for the web & perhaps this resistance to commodification is apropos right now, in the midst of furious debates about whether information wants to be free or not. “The word,” notes Higgins in one of his Aphorisms for a Rainy Day in the poster above, “is not dead, it is merely changing its skin.”

explosion

![]() A Nov. 18 post on Adam Green’s Darwinian Web makes the claim that the web will “explode” (does he mean implode?) over the next year. According to Green, RSS feeds will render many websites obsolete:

A Nov. 18 post on Adam Green’s Darwinian Web makes the claim that the web will “explode” (does he mean implode?) over the next year. According to Green, RSS feeds will render many websites obsolete:

The explosion I am talking about is the shifting of a website’s content from internal to external. Instead of a website being a “place” where data “is” and other sites “point” to, a website will be a source of data that is in many external databases, including Google. Why “go” to a website when all of its content has already been absorbed and remixed into the collective datastream.

Does anyone agree with Green? Will feeds bring about the restructuring of “the way content is distributed, valued and consumed?” More on this here.