Omnisio, a new Y Combinator startup, lets people grab clips from the Web and mash them up. Users can integrate video with slide presentations, and enable time-sensitive commenting in little popup bubbles layered on the video.

MediaCommons was founded partly to find a way of conducting media studies discussions at a pace more congruent with changes in the media landscape. It’s tempting to see this as part of that same narrative: crowdsourcing media commentary for the ADHD generation. For me, though, it evokes a question that Kate Pullinger raised during the research Chris and I conducted for the Arts Council. Namely: are we seeing an ineluctable decline of text on the Web? Are writers becoming multi-skilled media assemblers, masher-uppers, creators of Slideshares and videocasts and the rest? And if so, is this a bad thing?

I’ve been re-reading In The Beginning Was The Command Line, a 1999 meditation by Neal Stephenson on the paradigm shift from command line to GUI interactions in computer use. In a discussion on Disneyland, he draws a parallel between ‘Disneyfication’ and the shift from command line to GUI paradigm, and thence to an entire approach to culture:

Why are we rejecting explicit word-based interfaces, and embracing graphical or sensorial ones–a trend that accounts for the success of both Microsoft and Disney?

Part of it is simply that the world is very complicated now–much more complicated than the hunter-gatherer world that our brains evolved to cope with–and we simply can’t handle all of the details. We have to delegate. We have no choice but to trust some nameless artist at Disney or programmer at Apple or Microsoft to make a few choices for us, close off some options, and give us a conveniently packaged executive summary.

But more importantly, it comes out of the fact that, during this century, intellectualism failed, and everyone knows it. In places like Russia and Germany, the common people agreed to loosen their grip on traditional folkways, mores, and religion, and let the intellectuals run with the ball, and they screwed everything up and turned the century into an abbatoir. Those wordy intellectuals used to be merely tedious; now they seem kind of dangerous as well.

We Americans are the only ones who didn’t get creamed at some point during all of this. We are free and prosperous because we have inherited political and values systems fabricated by a particular set of eighteenth-century intellectuals who happened to get it right. But we have lost touch with those intellectuals, and with anything like intellectualism, even to the point of not reading books any more, though we are literate. We seem much more comfortable with propagating those values to future generations nonverbally, through a process of being steeped in media.

So this culture, steeped in media, emerges from intellectualism and arrives somewhere quite different. Stephenson goes on to discus the extent to which word processing programs complicate the assumed immutability of the written word, whether through system crashes, changing formats or other technical problems:

The ink stains the paper, the chisel cuts the stone, the stylus marks the clay, and something has irrevocably happened (my brother-in-law is a theologian who reads 3250-year-old cuneiform tablets–he can recognize the handwriting of particular scribes, and identify them by name). But word-processing software–particularly the sort that employs special, complex file formats–has the eldritch power to unwrite things. A small change in file formats, or a few twiddled bits, and months’ or years’ literary output can cease to exist.

For Stephenson, a skilled programmer as well as a writer, the solution is to dive into FLOSS tools, to become adept enough at the source code to escape reliance on GUIs. But what about those who do not? This is the deep anxiety that underpins the Flash-is-evil debate that pops up now and again in discussions of YouTube: when you can’t ‘View Source’ any more, how are you supposed to learn? Mashup applications like Microsoft’s Popfly give me the same nervous feeling of wielding tools that I don’t – and will never – understand.

And it’s central to the question confronting us, as the Web shifts steadily away from simple markup and largely textual interactions, toward multifaceted mashups and visual media that relegate the written word to a medium layered over the top – almost an afterthought. Stephenson is ambivalent about the pros and cons of ‘interface culture’: “perhaps the goal of all this is to make us feckless so we won’t nuke each other”, he says, but ten years on, deep in the War on Terror, it’s clear that hypermediation hasn’t erased the need for bombs so much as added webcams to their explosive noses so we can cheer along. And despite my own streak of techno-meritocracy (‘if they’ve voted it to the top then dammit, it’s the best’) I have to admit to wincing at the idea that intellectualism is so thoroughly a thing of the past.

This was meant to be a short post about how exciting it was to be able to blend video with commentary, and how promising this was for new kinds of literacy. But then I watched this anthology of Steve Ballmer videos, currently one of the most popular on the Omnisio site, and (once I stopped laughing) started thinking about the commentary over the top. What it’s for (mostly heckling), what it achieves, and how it relates to – say – the kind of skill that can produce an essay on the cultural ramifications of computer software paradigms. And it’s turned into a speculation about whether, as a writer, I’m on the verge of becoming obsolete, or at least in need of serious retraining. I don’t want this to lapse into the well-worn trope that conflates literacy with moral and civic value – but I’m unnerved by the notion of a fully post-literate world, and by the Flash applications and APIs that inhabit it.

To make a trifecta of film posts for the day, I’ll point out Jonathan Harris’s

To make a trifecta of film posts for the day, I’ll point out Jonathan Harris’s

A little while back I was musing on the possibility of a

A little while back I was musing on the possibility of a

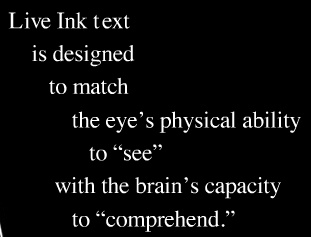

How can we ‘see’ a written text? Do you have a new way of visualizing writing on the screen? If so, then McKenzie Wark and the Institute for the Future of the Book have a challenge for you. We want you to visualize McKenzie’s new book, Gamer Theory.

How can we ‘see’ a written text? Do you have a new way of visualizing writing on the screen? If so, then McKenzie Wark and the Institute for the Future of the Book have a challenge for you. We want you to visualize McKenzie’s new book, Gamer Theory.