To mark the posting of the final chunk of chapter 1 of the Expressive Processing manuscript on Grand Text Auto, Noah has kicked off what will hopefully be a revealing meta-discussion to run alongside the blog-based peer review experiment. The first meta post includes a roundup of comments from the first week and invites readers to comment on the process as a whole. As you’ll see, there’s already been some incisive feedback and Noah is mulling over revisions. Chapter 2 starts tomorrow.

In case you missed it, here’s an intro to the project.

Category Archives: reading

amazon reviewer no. 7 and the ambiguities of web 2.0

Slate takes a look at Grady Harp, Amazon’s no. 7-ranked book reviewer, and finds the amateur-driven literary culture there to be a much grayer area than expected:

Absent the institutional standards that govern (however notionally) professional journalists, Web 2.0 stakes its credibility on the transparency of users’ motives and their freedom from top-down interference. Amazon, for example, describes its Top Reviewers as “clear-eyed critics [who] provide their fellow shoppers with helpful, honest, tell-it-like-it-is product information.” But beneath the just-us-folks rhetoric lurks an unresolved tension between transparency and opacity; in this respect, Amazon exemplifies the ambiguities of Web 2.0. The Top 10 List promises interactivity – ?”How do I become a Top Reviewer?” – ?yet Amazon guards its rankings algorithms closely…. As in any numbers game (tax returns, elections) opacity abets manipulation.

expressive processing: an experiment in blog-based peer review

An exciting new experiment begins today, one which ties together many of the threads begun in our earlier “networked book” projects, from Without Gods to Gamer Theory to CommentPress. It involves a community, a manuscript, and an open peer review process -? and, very significantly, the blessing of a leading academic press. (The Chronicle of Higher Education also reports.)

The community in question is Grand Text Auto, a popular multi-author blog about all things relating to digital narrative, games and new media, which for many readers here, probably needs no further introduction. The author, Noah Wardrip-Fruin, a professor of communication at UC San Diego, a writer/maker of digital fictions, and, of course, a blogger at GTxA. His book, which starting today will be posted in small chunks, open to reader feedback, every weekday over a ten-week period, is called Expressive Processing: Digital Fictions, Computer Games, and Software Studies. It probes the fundamental nature of digital media, looking specifically at the technical aspects of creation -? the machines and software we use, the systems and processes we must learn end employ in order to make media -? and how this changes how and what we create. It’s an appropriate guinea pig, when you think about it, for an open review experiment that implicitly asks, how does this new technology (and the new social arrangements it makes possible) change how a book is made?

The community in question is Grand Text Auto, a popular multi-author blog about all things relating to digital narrative, games and new media, which for many readers here, probably needs no further introduction. The author, Noah Wardrip-Fruin, a professor of communication at UC San Diego, a writer/maker of digital fictions, and, of course, a blogger at GTxA. His book, which starting today will be posted in small chunks, open to reader feedback, every weekday over a ten-week period, is called Expressive Processing: Digital Fictions, Computer Games, and Software Studies. It probes the fundamental nature of digital media, looking specifically at the technical aspects of creation -? the machines and software we use, the systems and processes we must learn end employ in order to make media -? and how this changes how and what we create. It’s an appropriate guinea pig, when you think about it, for an open review experiment that implicitly asks, how does this new technology (and the new social arrangements it makes possible) change how a book is made?

The press that has given the green light to all of this is none other than MIT, with whom Noah has published several important, vibrantly inter-disciplinary anthologies of new media writing. Expressive Processing his first solo-authored work with the press, will come out some time next year but now is the time when the manuscript gets sent out for review by a small group of handpicked academic peers. Doug Sery, the editor at MIT, asked Noah who would be the ideal readers for this book. To Noah, the answer was obvious: the Grand Text Auto community, which encompasses not only many of Noah’s leading peers in the new media field, but also a slew of non-academic experts -? writers, digital media makers, artists, gamers, game designers etc. -? who provide crucial alternative perspectives and valuable hands-on knowledge that can’t be gotten through more formal channels. Noah:

Blogging has already changed how I work as a scholar and creator of digital media. Reading blogs started out as a way to keep up with the field between conferences — and I soon realized that blogs also contain raw research, early results, and other useful information that never gets presented at conferences. But, of course, that’s just the beginning. We founded Grand Text Auto, in 2003, for an even more important reason: blogs can create community. And the communities around blogs can be much more open and welcoming than those at conferences and festivals, drawing in people from industry, universities, the arts, and the general public. Interdisciplinary conversations happen on blogs that are more diverse and sustained than any I’ve seen in person.

Given that ours is a field in which major expertise is located outside the academy (like many other fields, from 1950s cinema to Civil War history) the Grand Text Auto community has been invaluable for my work. In fact, while writing the manuscript for Expressive Processing I found myself regularly citing blog posts and comments, both from Grand Text Auto and elsewhere….I immediately realized that the peer review I most wanted was from the community around Grand Text Auto.

Sery was enthusiastic about the idea (although he insisted that the traditional blind review process proceed alongside it) and so Noah contacted me about working together to adapt CommentPress to the task at hand.

The challenge technically was to integrate CommentPress into an existing blog template, applying its functionality selectively -? in other words, to make it work for a specific group of posts rather than for all content in the site. We could have made a standalone web site dedicated to the book, but the idea was to literally weave sections of the manuscript into the daily traffic of the blog. From the beginning, Noah was very clear that this was the way it needed to work, insisting that the social and technical integration of the review process were inseparable. I’ve since come to appreciate how crucial this choice was for making a larger point about the value of blog-based communities in scholarly production, and moreover how elegantly it chimes with the central notions of Noah’s book: that form and content, process and output, can never truly be separated.

The challenge technically was to integrate CommentPress into an existing blog template, applying its functionality selectively -? in other words, to make it work for a specific group of posts rather than for all content in the site. We could have made a standalone web site dedicated to the book, but the idea was to literally weave sections of the manuscript into the daily traffic of the blog. From the beginning, Noah was very clear that this was the way it needed to work, insisting that the social and technical integration of the review process were inseparable. I’ve since come to appreciate how crucial this choice was for making a larger point about the value of blog-based communities in scholarly production, and moreover how elegantly it chimes with the central notions of Noah’s book: that form and content, process and output, can never truly be separated.

Up to this point, CommentPress has been an all or nothing deal. You can either have a whole site working with paragraph-level commenting, or not at all. In the technical terms of WordPress, its platform, CommentPress is a theme: a template for restructuring an entire blog to work with the CommentPress interface. What we’ve done -? with the help of a talented WordPress developer named Mark Edwards, and invaluable guidance and insight from Jeremy Douglass of the Software Studies project at UC San Diego (and the Writer Response Theory blog) -? is made CommentPress into a plugin: a program that enables a specific function on demand within a larger program or site. This is an important step for CommentPress, giving it a new flexibility that it has sorely lacked and acknowledging that it is not a one-size-fits-all solution.

Just to be clear, these changes are not yet packaged into the general CommentPress codebase, although they will be before too long. A good test run is still needed to refine the new model, and important decisions have to be made about the overall direction of CommentPress: whether from here it definitively becomes a plugin, or perhaps forks into two paths (theme and plugin), or somehow combines both options within a single package. If you have opinions on this matter, we’re all ears…

But the potential impact of this project goes well beyond the technical.

It represents a bold step by a scholarly press -? one of the most distinguished and most innovative in the world -? toward developing new procedures for vetting material and assuring excellence, and more specifically, toward meaningful collaboration with existing online scholarly communities to develop and promote new scholarship.

It seems to me that the presses that will survive the present upheaval will be those that learn to productively interact with grassroots publishing communities in the wild of the Web and to adopt the forms and methods they generate. I don’t think this will be a simple story of the blogosphere and other emerging media ecologies overthrowing the old order. Some of the older order will die off to be sure, but other parts of it will adapt and combine with the new in interesting ways. What’s particularly compelling about this present experiment is that it has the potential to be (perhaps now or perhaps only in retrospect, further down the line) one of these important hybrid moments -? a genuine, if slightly tentative, interface between two publishing cultures.

Whether the MIT folks realize it or not (their attitude at the outset seems to be respectful but skeptical), this small experiment may contain the seeds of larger shifts that will redefine their trade. The most obvious changes leveled on publishing by the Internet, and the ones that get by far the most attention, are in the area of distribution and economic models. The net flattens distribution, making everyone a publisher, and radically undercuts the heretofore profitable construct of copyright and the whole system of information commodities. The effects are less clear, however, in those hardest to pin down yet most essential areas of publishing -? the territory of editorial instinct, reputation, identity, trust, taste, community… These are things that the best print publishers still do quite well, even as their accounting departments and managing directors descend into panic about the great digital undoing. And these are things that bloggers and bookmarkers and other web curators, archivists and filterers are also learning to do well -? to sift through the information deluge, to chart a path of quality and relevance through the incredible, unprecedented din.

This is the part of publishing that is most important, that transcends technological upheaval -? you might say the human part. And there is great potential for productive alliances between print publishers and editors and the digital upstarts. By delegating half of the review process to an existing blog-based peer community, effectively plugging a node of his press into the Web-based communications circuit, Doug Sery is trying out a new kind of editorial relationship and exercising a new kind of editorial choice. Over time, we may see MIT evolve to take on some of the functions that blog communities currently serve, to start providing technical and social infrastructure for authors and scholarly collectives, and to play the valuable (and time-consuming) roles of facilitator, moderator and curator within these vast overlapping conversations. Fostering, organizing, designing those conversations may well become the main work of publishing and of editors.

I could go on, but better to hold off on further speculation and to just watch how it unfolds. The Expressive Processing peer review experiment begins today (the first actual manuscript section is here) and will run for approximately ten weeks and 100 thousand words on Grand Text Auto, with a new post every weekday during that period. At the end, comments will be sorted, selected and incorporated and the whole thing bundled together into some sort of package for MIT. We’re still figuring out how that part will work. Please go over and take a look and if a thought is provoked, join the discussion.

read this

An interesting experiment on Vimeo. See what’s going on?

Via IT IN place.

reading between the lines?

The NEA claims it wishes to “initiate a serious discussion” over the findings of its latest report, but the public statements from representatives of the Endowment have had a terse or caustic tone, such as in Sunil Iyengar’s reply to Nancy Kaplan. Another example is Mark Bauerlein’s letter to the editor in response to my December 7, 2007 Chronicle Review piece, “How Reading is Being Reimagined,” a letter in which Bauerlein seems unable or unwilling to elevate the discourse beyond branding me a “votary” of screen reading and suggesting that I “do some homework before passing opinions on matters out of [my] depth.”

One suspects that, stung by critical responses to the earlier Reading at Risk report (2004), the decision this time around was that the best defense is a good offense. Bauerlein chastises me for not matching data with data, that is for failing to provide any quantitative documentation in support of various observations about screen reading and new media (not able to resist the opportunity for insult, he also suggests such indolence is only to be expected of a digital partisan). Yet data wrangling was not the focus of my piece, and I said as much in print: rather, I wanted to raise questions about the NEA’s report in the context of the history of reading, questions which have also been asked by Harvard scholar Leah Price in a recent essay in the New York Times Book Review.

If my work is lacking in statistical heavy mettle, the NEA’s description of reading proceeds as though the last three decades of scholarship by figures like Elizabeth Eisenstein, Harvey Graff, Anthony Grafton, Lisa Jardin, Bill Sherman, Adrian Johns, Roger Chartier, Peter Stallybrass, Patricia Crain, Lisa Gitelman, and many others simply does not exist. But this body of work has demolished the idea that reading is a stable or historically homogeneous activity, thereby ripping the support out from under the quaint notion that the codex book is the simple, self-consistent artifact it is presented as in the reports, while also documenting the numerous varieties of cultural anxiety that have attended the act of reading and questions over whether we’re reading not enough or too much.

It’s worth underscoring that the academic response to the NEA’s two reports has been largely skeptical. Why is this? After all, in the ivied circles I move in, everyone loves books, cherishes reading, and wants people to read more, in whatever venue or medium. I also know that’s true of the people at if:book (and thanks to Ben Vershbow, by the way, for giving me the opportunity to respond here). And yet we bristle at the data as presented by the NEA. Is it because, as academics, eggheads, and other varieties of bookwormish nerds and geeks we’re all hopelessly ensorcelled by the pleasures of problematizing and complicating rather than accepting hard evidence at face value? Herein lies the curious anti-intellectualism to which I think at least some of us are reacting, an anti-intellectualism that manifests superficially in the rancorous and dismissive tone that Bauerlein and Iyengar have brought to the very conversation they claim they sought to initiate, but anti-intellectualism which, at its root, is – ?just possibly – ?about a frustration that the professors won’t stop indulging their fancy theories and footnotes and ditzy digital rhetoric. (Too much book larnin’ going on up at the college? Is that what I’m reading between the lines?)

Or maybe I’m wrong about that last bit. I hope so. Because as I said in my Chronicle Review piece, there’s no doubt it’s time for a serious conversation about reading. Perhaps we can have a portion of it here on if:book.

Matthew Kirschenbaum

University of Maryland

Related: “the NEA’s misreading of reading”

NEA reading debate round 2: an exchange between sunil iyengar and nancy kaplan

Last week I received an email from Sunil Iyengar of the National Endownment for the Arts responding to Nancy Kaplan’s critique (published here on if:book) of the NEA’s handling of literacy data in its report “To Read or Not to Read.” I’m reproducing the letter followed by Nancy’s response.

Sunil Iyengar:

The National Endowment for the Arts welcomes a “careful and responsible” reading of the report, To Read or Not To Read, and the data used to generate it. Unfortunately, Nancy Kaplan’s critique (11/30/07) misconstrues the NEA’s presentation of Department of Education test data as a “distortion,” although all of the report’s charts are clearly and accurately labeled.

For example, in Charts 5A to 5D of the full report, the reader is invited to view long-term trends in the average reading score of students at ages 9, 13, and 17. The charts show test scores from 1984 through 2004. Why did we choose that interval? Simply because most of the trend data in the preceding chapters–starting with the NEA’s own study data featured in Chapter One–cover the same 20-year period. For the sake of consistency, Charts 5A to 5D refer to those years.

Dr. Kaplan notes that the Department of Education’s database contains reading score trends from 1971 onward. The NEA report also emphasizes this fact, in several places. In 2004, the report observes, the average reading score for 17-year-olds dipped back to where it was in 1971. “For more than 30 years…17-year-olds have not sustained improvements in reading scores,” the report states on p. 57. Nine-year-olds, by contrast, scored significantly higher in 2004 than in 1971.

Further, unlike the chart in Dr. Kaplan’s critique, the NEA’s Charts 5A to 5D explain that the “test years occurred at irregular intervals,” and each test year from 1984 to 2004 is provided. Also omitted from the critique’s reproduction are labels for the charts’ vertical axes, which provide 5-point rather than the 10-point intervals used by the Department of Education chart. Again, there is no mystery here. Five-point intervals were chosen to make the trends easier to read.

Dr. Kaplan makes another mistake in her analysis. She suggests that the NEA report is wrong to draw attention to declines in the average reading score of adult Americans of virtually every education level, and an overall decline in the percentage of adult readers who are proficient. But the Department of Education itself records these declines. In their separate reports, the NEA and the Department of Education each acknowledge that the average reading score of adults has remained unchanged. That’s because from 1992 to 2003, the percentage of adults with postsecondary education increased and the percentage who did not finish high school decreased. “After all,” the NEA report notes, “compared with adults who do not complete high school, adults with postsecondary education tend to attain higher prose scores.” Yet this fact in no way invalidates the finding that average reading scores and proficiency levels are declining even at the highest education levels.

“There is little evidence of an actual decline in literacy rates or proficiency,” Dr. Kaplan concludes. We respectfully disagree.

Sunil Iyengar

Director, Research & Analysis

National Endowment for the Arts

Nancy Kaplan:

I appreciate Mr. Iyengar’s engagement with issues at the level of data and am happy to acknowledge that the NEA’s report includes a single sentence on pages 55-56 with the crucial concession that over the entire period for which we have data, the average scale scores of 17 year-olds have not changed: “By 2004, the average scale score had retreated to 285, virtually the same score as in 1971, though not shown in the chart.” I will even concede the accuracy of the following sentence: “For more than 30 years, in other words, 17year-olds have not sustained improvements in reading scores” [emphasis in the original]. What the report fails to note or account for, however, is that there actually was a period of statistically significant improvement in scores for 17 year-olds from 1971 to 1984. Although I did not mention it in my original critique, the report handles data from 13 year-olds in the same way: “the scores for 13-year-olds have remained largely flat from 1984-2004, with no significant change between the 2004 average score and the scores from the preceding seven test years. Although not apparent from the chart, the 2004 score does represent a significant improvement over the 1971 average – ?a four-point increase” (p. 56).

In other words, a completely accurate and honest assessment of the data shows that reading proficiency among 17 year-olds has fluctuated over the past 30 years, but has not declined over that entire period. At the same time, reading proficiency among 9 year-olds and 13 year-olds has improved significantly. Why does the NEA not state the case in the simple, accurate and complete way I have just written? The answer Mr. Iyengar proffers is consistency, but that response may be a bit disingenuous.

Plenty of graphs in the NEA report show a variety of time periods, so there is at best a weak rationale for choosing 1984 as the starting point for the graphs in question. Consistency, in this case, is surely less important than accuracy and completeness. Given the inferences the report draws from the data, then, it is more likely that the sample of data the NEA used in its representations was chosen precisely because, as Mr. Iyengar admits, that sample would make “the trends easier to read.” My point is that the “trends” the report wants to foreground are not the only trends in the data: truncating the data set makes other, equally important trends literally invisible. A single sentence in the middle of a paragraph cannot excuse the act of erasure here. As both Edward Tufte (The Visual Display of Quantitative Information) and Jacques Bertin (Semiology of Graphics), the two most prominent authorities on graphical representations of data, demonstrate in their seminal works on the subject, selective representation of data constitutes distortion of that data.

Similarly, labels attached to a graph, even when they state that the tests occurred at irregular intervals, do not substitute for representing the irregularity of the intervals in the graph itself (again, see Tufte and Bertin). To do otherwise is to turn disinterested analysis into polemic. “Regularizing” the intervals in the graphic representation distorts the data.

The NEA report wants us to focus on a possible correlation between choosing to read books in one’s leisure time, reading proficiency, and a host of worthy social and civic activities. Fine. But if the reading scores of 17 year-olds improved from 1971 to 1984 but there is no evidence that during the period of improvement these youngsters were reading more, the case the NEA is trying to build becomes shaky at best. Similarly, the reading scores of 13 year-olds improved from 1971 to 1984 but “have remained largely flat from 1984-2004 ….” Yet during that same period, the NEA report claims, leisure reading among 13 year-olds was declining. So what exactly is the hypothesis here -? that sometimes declines in leisure reading correlate with declines in reading proficiency but sometimes such a decline is not accompanied by a decline in reading proficiency? I’m skeptical.

My critique is aimed at the management of data (rather than the a-historical definition of reading the NEA employs, a somewhat richer and more potent issue joined by Matthew Kirschenbaum and others) because I believe that a crucial component of contemporary literacy, in its most capacious sense, includes the ability to understand the relationships between claims, evidence and the warrants for that evidence. The NEA’s data need to be read with great care and its argument held to a high scientific standard lest we promulgate worthless or wasteful public policy based on weak research.

I am a humanist by training and so have come to my appreciation of quantitative studies rather late in my intellectual life. I cannot claim to have a deep understanding of statistics, yet I know what “confounding factors” are. When the NEA report chooses to claim that the reading proficiency of adults is declining while at the same time ignoring the NCES explanation of the statistical paradox that explains the data, it is difficult to avoid the conclusion that the report’s authors are not engaging in a disinterested (that is, dispassionate) exploration of what we can know about the state of literacy in America today but are instead cherry-picking the elements that best suit the case they want to make.

Nancy Kaplan, Executive Director

School of Information Arts and Technologies

University of Baltimore

anatomy of a debate

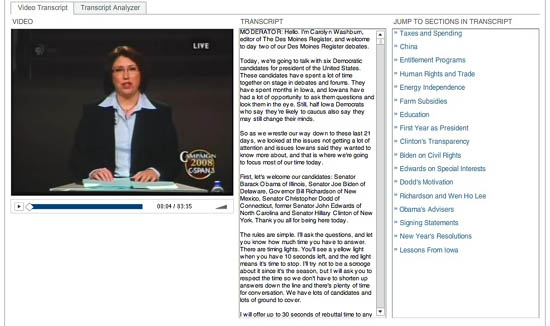

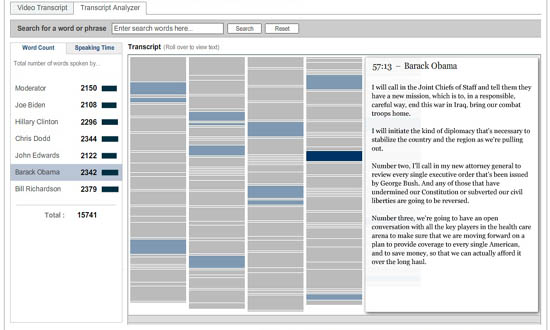

The New York Times continues to do quality interactive work online. Take a look at this recent feature that allows you to delve through video and transcript from the final Democratic presidential candidate debate in Iowa (Dec. 13, ’07). It begins with a lovely navigation tool that allows you to jump through the video topic by topic. Clicking text in the transcript (center column) or a topic from the list (right column) jumps you directly to the corresponding place in the video.

The second part is a “transcript analyzer,” which gives a visual overview of the debate. The text is laid out in miniature in a simple, clean schematic, navigable by speaker. Click a name in the left column and the speaker’s remarks are highlighted on the schematic. Hover over any block of text and that detail of the transcript pops up for you to read. You can also search the debate by keyword and see word counts and speaking times for each candidate.

These are fantastic tools -? if only they were more widely available. These would be amazing extensions to CommentPress.

bothered about blogging etc

I’ve never liked seeing movies in groups. After two hours immersed in a fictional world I dread that moment when you emerge blinking into the light and instantly have to give your verdict.

Personally I want time to mull, and for me a pleasure of private reading is not needing to share my opinion – or know what it is – until I’m good and ready.

I have similar problems with blogging. With such brilliant and articulate colleagues knocking out posts for if:book on a regular basis, I must admit I get nervous about adding speedy contributions. And when I do write words I think might be worth sharing, I wonder if I really want to cast them adrift in such fast flowing waters.

bloggers together – ben vershbow of if:book and cory doctorow of boing boing

I bring this up because the networked book involves active readers happily sharing their thoughts online with others happy to read them.

I dread finding the margins of my e-reader crammed with the scrawlings of cleverclogs, even if I’m free to jump in on their arguments. Having said that, I’m convinced there’s immense potential in building communities of debate around interesting texts. There’s plenty of intelligence and generosity of spirit on the web to counteract the bilge and the bile.

Doris Lessing asks in her Novel prize acceptance speech,

“How will our lives, our way of thinking, be changed by the internet, which has seduced a whole generation with its inanities so that even quite reasonable people will confess that, once they are hooked, it is hard to cut free, and they may find a whole day has passed in blogging etc?”

Lessing’s dismay at addiction to the screen amongst the even quite reasonable is a reminder of the importance of working to heal the cultural divide between bookworms and book geeks. This will involve patience on either side as familiar arguments are replayed to wider audiences. We need to talk in plain English about issues that are so much more interesting and culturally fruitful than many even quite reasonable lovers of literature seem to think they are.

cinematic reading

Random House Canada underwrote a series of short videos riffing on Douglas Coupland’s new novel The Gum Thief produced by the slick Toronto studio Crush Inc. These were forwarded to me by Alex Itin, who described watching them as a kind of “cinematic reading.” Watch, you’ll see what he means. There are three basic storylines, each consisting of three clips. This one, from the “Glove Pond” sequence, is particularly clever in its use of old magazines:

All the videos are available here at Crush Inc. Or on Coupland’s YouTube page.

kindle maths 101

Chatting with someone from Random House’s digital division on the day of the Kindle release, I suggested that dramatic price cuts on e-editions -? in other words, finally acknowledging that digital copies aren’t worth as much (especially when they come corseted in DRM) as physical hard copies -? might be the crucial adjustment needed to at last blow open the digital book market. It seemed like a no-brainer to me that Amazon was charging way too much for its e-books (not to mention the Kindle itself). But upon closer inspection, it clearly doesn’t add up that way. Tim O’Reilly explains why:

…the idea that there’s sufficient unmet demand to justify radical price cuts is totally wrongheaded. Unlike music, which is quickly consumed (a song takes 3 to 4 minutes to listen to, and price elasticity does have an impact on whether you try a new song or listen to an old one again), many types of books require a substantial time commitment, and having more books available more cheaply doesn’t mean any more books read. Regular readers already often have huge piles of unread books, as we end up buying more than we have time for. Time, not price, is the limiting factor.

Even assuming the rosiest of scenarios, Kindle readers are going to be a subset of an already limited audience for books. Unless some hitherto untapped reader demographic comes out of the woodwork, gets excited about e-books, buys Kindles, and then significantly surpasses the average human capacity for book consumption, I fail to see how enough books could be sold to recoup costs and still keep prices low. And without lower prices, I don’t see a huge number of people going the Kindle route in the first place. And there’s the rub.

Even if you were to go as far as selling books like songs on iTunes at 99 cents a pop, it seems highly unlikely that people would be induced to buy a significantly greater number of books than they already are. There’s only so much a person can read. The iPod solved a problem for music listeners: carrying around all that music to play on your Disc or Walkman was a major pain. So a hard drive with earphones made a great deal of sense. It shouldn’t be assumed that readers have the same problem (spine-crushing textbook-stuffed backpacks notwithstanding). Do we really need an iPod for books?

UPDATE: Through subsequent discussion both here and off the blog, I’ve since come around 360 back to my original hunch. See comment.

We might, maybe (putting aside for the moment objections to the ultra-proprietary nature of the Kindle), if Amazon were to abandon the per copy idea altogether and go for a subscription model. (I’m just thinking out loud here -? tell me how you’d adjust this.) Let’s say 40 bucks a month for full online access to the entire Amazon digital library, along with every major newspaper, magazine and blog. You’d have the basic cable option: all books accessible and searchable in full, as well as popular feedback functions like reviews and Listmania. If you want to mark a book up, share notes with other readers, clip quotes, save an offline copy, you could go “premium” for a buck or two per title (not unlike the current Upgrade option, although cheaper). Certain blockbuster titles or fancy multimedia pieces (once the Kindle’s screen improves) might be premium access only -? like HBO or Showtime. Amazon could market other services such as book groups, networked classroom editions, book disaggregation for custom assembled print-on-demand editions or course packs.

This approach reconceives books as services, or channels, rather than as objects. The Kindle would be a gateway into a vast library that you can roam about freely, with access not only to books but to all the useful contextual material contributed by readers. Piracy isn’t a problem since the system is totally locked down and you can only access it on a Kindle through Amazon’s Whispernet. Revenues could be shared with publishers proportionately to traffic on individual titles. DRM and all the other insults that go hand in hand with trying to manage digital media like physical objects simply melt away.

* * * * *

On a related note, Nick Carr talks about how the Kindle, despite its many flaws, suggests a post-Web2.0 paradigm for hardware:

If the Kindle is flawed as a window onto literature, it offers a pretty clear view onto the future of appliances. It shows that we’re rapidly approaching the time when centrally stored and managed software and data are seamlessly integrated into consumer appliances – all sorts of appliances.

The problem with “Web 2.0,” as a concept, is that it constrains innovation by perpetuating the assumption that the web is accessed through computing devices, whether PCs or smartphones or game consoles. As broadband, storage, and computing get ever cheaper, that assumption will be rendered obsolete. The internet won’t be so much a destination as a feature, incorporated into all sorts of different goods in all sorts of different ways. The next great wave in internet innovation, in other words, won’t be about creating sites on the World Wide Web; it will be about figuring out creative ways to deploy the capabilities of the World Wide Computer through both traditional and new physical products, with, from the user’s point of view, “no computer or special software required.”

That the Kindle even suggests these ideas signals a major advance over its competitors -? the doomed Sony Reader and the parade of failed devices that came before. What Amazon ought to be shooting for, however, (and almost is) is not an iPod for reading -? a digital knapsack stuffed with individual e-books -? but rather an interface to a networked library.