Last night I attended a fascinating panel discussion at the American Bar Association on the legality of Google Book Search. In many ways, this was the debate made flesh. Making the case against Google were high-level representatives from the two entities that have brought suit, the Authors’ Guild (Executive Director Paul Aiken) and the Association of American Publishers (VP for legal counsel Allan Adler). It would have been exciting if Google, in turn, had sent representatives to make their case, but instead we had two independent commentators, law professor and blogger Susan Crawford and Cameron Stracher, also a law professor and writer. The discussion was vigorous, at times heated — in many ways a preview of arguments that could eventually be aired (albeit under a much stricter clock) in front of federal judges.

The lawsuits in question center around whether Google’s scanning of books and presenting tiny snippet quotations online for keyword searches is, as they claim, fair use. As I understand it, the use in question is the initial scanning of full texts of copyrighted books held in the collections of partner libraries. The fair use defense hinges on this initial full scan being the necessary first step before the “transformative” use of the texts, namely unbundling the book into snippets generated on the fly in response to user search queries.

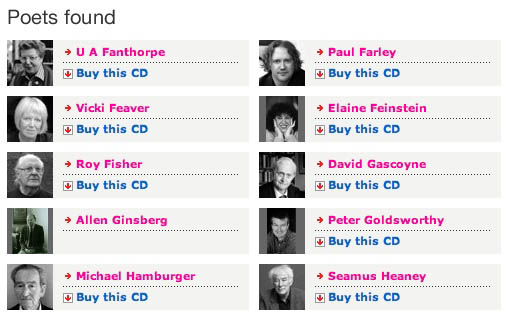

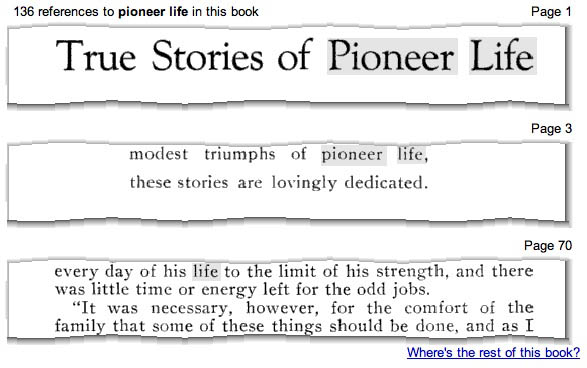

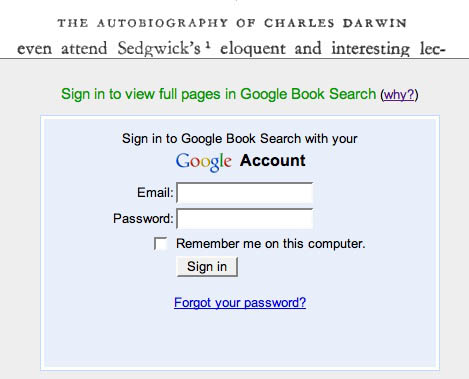

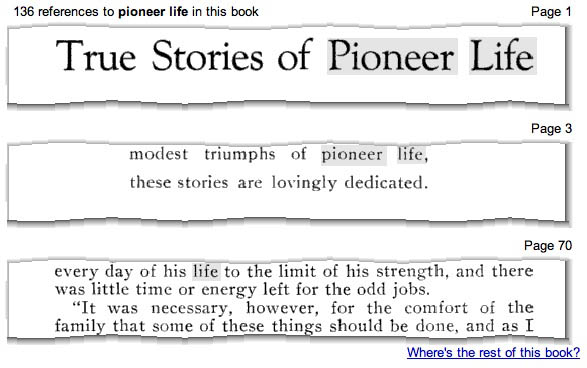

…in case you were wondering what snippets look like

At first, the conversation remained focused on this question, and during that time it seemed that Google was winning the debate. The plaintiffs’ arguments seemed weak and a little desperate. Aiken used carefully scripted language about not being against online book search, just wanting it to be licensed, quipping “we’re just throwing a little gravel in the gearbox of progress.” Adler was a little more strident, calling Google “the master of misdirection,” using the promise of technological dazzlement to turn public opinion against the legitimate grievances of publishers (of course, this will be settled by judges, not by public opinion). He did score one good point, though, saying Google has betrayed the weakness of its fair use claim in the way it has continually revised its description of the program.

Almost exactly one year ago, Google unveiled its “library initiative” only to re-brand it several months later as a “publisher program” following a wave of negative press. This, however, did little to ease tensions and eventually Google decided to halt all book scanning (until this past November) while they tried to smooth things over with the publishers. Even so, lawsuits were filed, despite Google’s offer of an “opt-out” option for publishers, allowing them to request that certain titles not be included in the search index. This more or less created an analog to the “implied consent” principle that legitimates search engines caching web pages with “spider” programs that crawl the net looking for new material.

In that case, there is a machine-to-machine communication taking place and web page owners are free to insert programs that instruct spiders not to cache, or can simply place certain content behind a firewall. By offering an “opt-out” option to publishers, Google enables essentially the same sort of communication. Adler’s point (and this was echoed more succinctly by a smart question from the audience) was that if Google’s fair use claim is so air-tight, then why offer this middle ground? Why all these efforts to mollify publishers without actually negotiating a license? (I am definitely concerned that Google’s efforts to quell what probably should have been an anticipated negative reaction from the publishing industry will end up undercutting its legal position.)

Crawford came back with some nice points, most significantly that the publishers were trying to make a pretty egregious “double dip” into the value of their books. Google, by creating a searchable digital index of book texts — “a card catalogue on steroids,” as she put it — and even generating revenue by placing ads alongside search results, is making a transformative use of the published material and should not have to seek permission. Google had a good idea. And it is an eminently fair use.

And it’s not Google’s idea alone, they just had it first and are using it to gain a competitive advantage over their search engine rivals, who in their turn, have tried to get in on the game with the Open Content Alliance (which, incidentally, has decided not to make a stand on fair use as Google has, and are doing all their scanning and indexing in the context of license agreements). Publishers, too, are welcome to build their own databases and to make them crawl-able by search engines. Earlier this week, Harper Collins announced it would be doing exactly that with about 20,000 of its titles. Aiken and Adler say that if anyone can scan books and make a search engine, then all hell will break loose and millions of digital copies will be leaked into the web. Crawford shot back that this lawsuit is not about net security issues, it is about fair use.

But once the security cat was let out of the bag, the room turned noticeably against Google (perhaps due to a preponderance of publishing lawyers in the audience). Aiken and Adler worked hard to stir up anxiety about rampant ebook piracy, even as Crawford repeatedly tried to keep the discussion on course. It was very interesting to hear, right from the horse’s mouth, that the Authors’ Guild and AAP both are convinced that the ebook market, tiny as it currently is, is within a few years of exploding, pending the release of some sort of ipod-like gadget for text. At that point, they say, Google will have gained a huge strategic advantage off the back of appropriated content.

Their argument hinges on the fourth determining factor in the fair use exception, which evaluates “the effect of the use upon the potential market for or value of the copyrighted work.” So the publishers are suing because Google might be cornering a potential market!!! (Crawford goes further into this in her wrap-up) Of course, if Google wanted to go into the ebook business using the material in their database, there would have to be a licensing agreement, otherwise they really would be pirating. But the suits are not about a future market, they are about creating a search service, which should be ruled fair use. If publishers are so worried about the future ebook market, then they should start planning for business.

To echo Crawford, I sincerely hope these cases reach the court and are not settled beforehand. Larger concerns about Google’s expansionist program aside, I think they have made a very brave stand on the principle of fair use, the essential breathing space carved out within our over-extended copyright laws. Crawford reminded the room that intellectual property is NOT like physical property, over which the owner has nearly unlimited rights. Copyright is a “temporary statutory monopoly” originally granted (“with hesitation,” Crawford adds) in order to incentivize creative expression and the production of ideas. The internet scares the old-guard publishing industry because it poses so many threats to the security of their product. These threats are certainly significant, but they are not the subject of these lawsuits, nor are they Google’s, or any search engine’s, fault. The rise of the net should not become a pretext for limiting or abolishing fair use.

Thus far, Librivox — which has only been up for a few months — has recorded about 30 titles, relying on dozens of volunteers. The website promotes the project as the “acoustical liberation of the public domain” and claims that the ultimate goal is to liberate all public domain works of literature. For now, titles cataloged on the website include L Frank Baum’s The Wizard of Oz, Joseph Conrad’s The Secret Agent and the U.S. Constitution.

Thus far, Librivox — which has only been up for a few months — has recorded about 30 titles, relying on dozens of volunteers. The website promotes the project as the “acoustical liberation of the public domain” and claims that the ultimate goal is to liberate all public domain works of literature. For now, titles cataloged on the website include L Frank Baum’s The Wizard of Oz, Joseph Conrad’s The Secret Agent and the U.S. Constitution.

The archive naturally focuses on British poets, but offers a significant selection of english-language writers from the U.S. and the British Commonwealth countries. Seamus Heaney is serving as president of the archive.

The archive naturally focuses on British poets, but offers a significant selection of english-language writers from the U.S. and the British Commonwealth countries. Seamus Heaney is serving as president of the archive.