A federal judge said Tuesday he intends to require Google Inc. to turn over some information to the Department of Justice . . .

progressive people are likely to defend Google against the encroachment of the govt. however, while i am in complete agreement with the sentiment that Google shouldn’t be giving information to the government about what people search for, i think the debate needs to be shifted in a dramatically different direction. the really important question (for the long term health of society) isn’t “should Google have to surrender information to this or any other government” but “why should Google have such sensitive information in the first place?”

if Google’s goal were simply as they say “to organize the world’s information and make it universally accessible and useful” then there really wouldn’t be a rationale for collecting information on what individuals search for. in reality of course, Google’s “reason for being” is to deliver people to advertisers and thus the need to collect all that data about us.

try this for a thought experiment. if Google continues to collect “all the world’s information” how long will it be before Google is indistinguishable from “God.” do we really want to give this much power to a private corporation whose first allegiance is to shareholders rather than the body politic?

what i can’t figure out is: why isn’t there a movement to develop a nonprofit, open source search engine? we have mozilla, we have wikipedia, we have linux. where is the people’s search engine? isn’t it time?

Category Archives: open_source

cultural environmentalism symposium at stanford

Ten years ago, the web just a screaming infant in its cradle, Duke law scholar James Boyle proposed “cultural environmentalism” as an overarching metaphor, modeled on the successes of the green movement, that might raise awareness of the need for a balanced and just intellectual property regime for the information age. A decade on, I think it’s safe to say that a movement did emerge (at least on the digital front), drawing on prior efforts like the General Public License for software and giving birth to a range of public interest groups like the Electronic Frontier Foundation and Creative Commons. More recently, new threats to cultural freedom and innovation have been identified in the lobbying by internet service providers for greater control of network infrastructure. Where do we go from here? Last month, writing in the Financial Times, Boyle looked back at the genesis of his idea:

We were writing the ground rules of the information age, rules that had dramatic effects on speech, innovation, science and culture, and no one – except the affected industries – was paying attention.

My analogy was to the environmental movement which had quite brilliantly made visible the effects of social decisions on ecology, bringing democratic and scholarly scrutiny to a set of issues that until then had been handled by a few insiders with little oversight or evidence. We needed an environmentalism of the mind, a politics of the information age.

Might the idea of conservation — of water, air, forests and wild spaces — be applied to culture? To the public domain? To the millions of “orphan” works that are in copyright but out of print, or with no contactable creator? Might the internet itself be considered a kind of reserve (one that must be kept neutral) — a place where cultural wildlife are free to live, toil, fight and ride upon the backs of one another? What are the dangers and fallacies contained in this metaphor?

Ray and I have just set up shop at a fascinating two-day symposium — Cultural Environmentalism at 10 — hosted at Stanford Law School by Boyle and Lawrence Lessig where leading intellectual property thinkers have converged to celebrate Boyle’s contributions and to collectively assess the opportunities and potential pitfalls of his metaphor. Impressions and notes soon to follow.

sophie is coming!

Though we haven’t talked much about it here, the Institute is dedicated to practice in addition to the theory we regularly spout here. In July, the Institute will release Sophie, our first piece of software. Sophie is an open-source platform for creating and reading electronic books for the networked environment. It will facilitate the construction of documents that use multimedia and time in ways that are currently difficult, if not impossible, with today’s software. We spend a fair amount of time talking about what electronic books and documents should do on this blog. Hopefully, many of these ideas will be realized in Sophie.

Though we haven’t talked much about it here, the Institute is dedicated to practice in addition to the theory we regularly spout here. In July, the Institute will release Sophie, our first piece of software. Sophie is an open-source platform for creating and reading electronic books for the networked environment. It will facilitate the construction of documents that use multimedia and time in ways that are currently difficult, if not impossible, with today’s software. We spend a fair amount of time talking about what electronic books and documents should do on this blog. Hopefully, many of these ideas will be realized in Sophie.

A beta release for Sophie will be upon us before we know it, and readers of this blog will be hearing (and seeing) more about it in the future. We’re excited about what we’ve seen Sophie do so far; soon you’ll be able to see too. Until then, we can offer you this 13-page PDF that attempts to explain exactly what Sophie is, the problems that it was created to solve, and what it will do. An HTML version of this will be arriving shortly, and there will soon be a Sophie version. There’s also, should you be especially curious, a second 5-page PDF that explains Sophie’s pedigree: a quick history of some of the ideas and software that informed Sophie’s design.

presidents’ day

Few would disagree that Presidents’ Day, though in theory a celebration of the nation’s highest office, is actually one of our blandest holidays — not so much about history as the resuscitation of commerce from the post-holiday slump. Yesterday, however, brought a refreshing change.

Spending the afternoon at the institute was Holly Shulman, a historian from the University of Virginia well known among digital scholarship circles as the force behind the Dolley Madison Project — a comprehensive online portal to the life, letters and times of one of the great figures of the early American republic. So, for once we actually talked about presidential history on Presidents’ Day — only, in this case from the fascinating and chronically under-studied spousal perspective.

Shulman came to discuss possible collaboration on a web-based history project that would piece together the world of America’s founding period — specifically, as experienced and influenced by its leading women. The question, in terms of form, was how to break out of the mould of traditional web archives, which tend to be static and exceedingly hierarchical, and tap more fully into the energies of the network? We’re talking about something you might call open source scholarship — new collaborative methods that take cues from popular social software experiments like Wikipedia, Flickr and del.icio.us yet add new layers and structures that would better ensure high standards of scholarship. In other words: the best of both worlds.

Shulman lamented that the current generation of historians are highly resistant to the idea of electronic publication as anything more than supplemental to print. Even harder to swallow is the open ethos of Wikipedia, commonly regarded as a threat to the hierarchical authority and medieval insularity of academia.

Again, we’re reminded of how fatally behind the times the academy is in terms of communication — both communication among scholars and with the larger world. Shulman’s eyes lit up as we described the recent surge on the web of social software and bottom-up organizational systems like tagging that could potentially create new and unexpected avenues into history.

A small example that recurred in our discussion: Dolley Madison wrote eloquently on grief, mourning and widowhood, yet few would know to seek out her perspective on these matters. Think of how something like tagging, still in an infant stage of development, could begin to solve such a problem, helping scholars, students and general readers unlock the multiple facets of complex historical figures like Madison, and deepening our collective knowledge of subjects — like death and war — that have historically been dominated by men’s accounts. It’s a small example, but points toward something grand.

can there be a compromise on copyright?

The following is a response to a comment made by Karen Schneider on my Monday post on libraries and DRM. I originally wrote this as just another comment, but as you can see, it’s kind of taken on a life of its own. At any rate, it seemed to make sense to give it its own space, if for no other reason than that it temporarily sidelined something else I was writing for today. It also has a few good quotes that might be of interest. So, Karen said:

I would turn back to you and ask how authors and publishers can continue to be compensated for their work if a library that would buy ten copies of a book could now buy one. I’m not being reactive, just asking the question–as a librarian, and as a writer.

This is a big question, perhaps the biggest since economics will define the parameters of much that is being discussed here. How do we move from an old economy of knowledge based on the trafficking of intellectual commodities to a new economy where value is placed not on individual copies of things that, as a result of new technologies are effortlessly copiable, but rather on access to networks of content and the quality of those networks? The question is brought into particularly stark relief when we talk about libraries, which (correct me if I’m wrong) have always been more concerned with the pure pursuit and dissemination of knowledge than with the economics of publishing.

Consider, as an example, the photocopier — in many ways a predecessor of the world wide web in that it is designed to deconstruct and multiply documents. Photocopiers have been unbundling books in libraries long before there was any such thing as Google Book Search, helping users break through the commodified shell to get at the fruit within.

Consider, as an example, the photocopier — in many ways a predecessor of the world wide web in that it is designed to deconstruct and multiply documents. Photocopiers have been unbundling books in libraries long before there was any such thing as Google Book Search, helping users break through the commodified shell to get at the fruit within.

I know there are some countries in Europe that funnel a share of proceeds from library photocopiers back to the publishers, and this seems to be a reasonably fair compromise. But the role of the photocopier in most libraries of the world is more subversive, gently repudiating, with its low hum, sweeping light, and clackety trays, the idea that there can really be such a thing as intellectual property.

That being said, few would dispute the right of an author to benefit economically from his or her intellectual labor; we just have to ask whether the current system is really serving in the authors’ interest, let alone the public interest. New technologies have released intellectual works from the restraints of tangible property, making them easily accessible, eminently exchangable and never out of print. This should, in principle, elicit a hallelujah from authors, or at least the many who have written works that, while possessed of intrinsic value, have not succeeded in their role as commodities.

But utopian visions of an intellecutal gift economy will ultimately fail to nourish writers who must survive in the here and now of a commercial market. Though peer-to-peer gift economies might turn out in the long run to be financially lucrative, and in unexpected ways, we can’t realistically expect everyone to hold their breath and wait for that to happen. So we find ourselves at a crossroads where we must soon choose as a society either to clamp down (to preserve existing business models), liberalize (to clear the field for new ones), or compromise.

In her essay “Books in Time,” Berkeley historian Carla Hesse gives a wonderful overview of a similar debate over intellectual property that took place in 18th Century France, when liberal-minded philosophes — most notably Condorcet — railed against the state-sanctioned Paris printing monopolies, demanding universal access to knowledge for all humanity. To Condorcet, freedom of the press meant not only freedom from censorship but freedom from commerce, since ideas arise not from men but through men from nature (how can you sell something that is universally owned?). Things finally settled down in France after the revolution and the country (and the West) embarked on a historic compromise that laid the foundations for what Hesse calls “the modern literary system”:

The modern “civilization of the book” that emerged from the democratic revolutions of the eighteenth century was in effect a regulatory compromise among competing social ideals: the notion of the right-bearing and accountable individual author, the value of democratic access to useful knowledge, and faith in free market competition as the most effective mechanism of public exchange.

Barriers to knowledge were lowered. A system of limited intellectual property rights was put in place that incentivized production and elevated the status of writers. And by and large, the world of ideas flourished within a commercial market. But the question remains: can we reach an equivalent compromise today? And if so, what would it look like?  Creative Commons has begun to nibble around the edges of the problem, but love it as we may, it does not fundamentally alter the status quo, focusing as it does primarily on giving creators more options within the existing copyright system.

Creative Commons has begun to nibble around the edges of the problem, but love it as we may, it does not fundamentally alter the status quo, focusing as it does primarily on giving creators more options within the existing copyright system.

Which is why free software guru Richard Stallman announced in an interview the other day his unqualified opposition to the Creative Commons movement, explaining that while some of its licenses meet the standards of open source, others are overly conservative, rendering the project bunk as a whole. For Stallman, ever the iconoclast, it’s all or nothing.

But returning to our theme of compromise, I’m struck again by this idea of a tax on photocopiers, which suggests a kind of micro-economy where payments are made automatically and seamlessly in proportion to a work’s use. Someone who has done a great dealing of thinking about such a solution (though on a much more ambitious scale than library photocopiers) is Terry Fisher, an intellectual property scholar at Harvard who has written extensively on practicable alternative copyright models for the music and film industries (Ray and I first encountered Fisher’s work when we heard him speak at the Economics of Open Content Symposium at MIT last month).

The following is an excerpt from Fisher’s 2004 book, “Promises to Keep: Technology, Law, and the Future of Entertainment”, that paints a relatively detailed picture of what one alternative copyright scheme might look like. It’s a bit long, and as I mentioned, deals specifically with the recording and movie industries, but it’s worth reading in light of this discussion since it seems it could just as easily apply to electronic books:

The following is an excerpt from Fisher’s 2004 book, “Promises to Keep: Technology, Law, and the Future of Entertainment”, that paints a relatively detailed picture of what one alternative copyright scheme might look like. It’s a bit long, and as I mentioned, deals specifically with the recording and movie industries, but it’s worth reading in light of this discussion since it seems it could just as easily apply to electronic books:

….we should consider a fundamental change in approach…. replace major portions of the copyright and encryption-reinforcement models with a variant of….a governmentally administered reward system. In brief, here’s how such a system would work. A creator who wished to collect revenue when his or her song or film was heard or watched would register it with the Copyright Office. With registration would come a unique file name, which would be used to track transmissions of digital copies of the work. The government would raise, through taxes, sufficient money to compensate registrants for making their works available to the public. Using techniques pioneered by American and European performing rights organizations and television rating services, a government agency would estimate the frequency with which each song and film was heard or watched by consumers. Each registrant would then periodically be paid by the agency a share of the tax revenues proportional to the relative popularity of his or her creation. Once this system were in place, we would modify copyright law to eliminate most of the current prohibitions on unauthorized reproduction, distribution, adaptation, and performance of audio and video recordings. Music and films would thus be readily available, legally, for free.

Painting with a very broad brush…., here would be the advantages of such a system. Consumers would pay less for more entertainment. Artists would be fairly compensated. The set of artists who made their creations available to the world at large–and consequently the range of entertainment products available to consumers–would increase. Musicians would be less dependent on record companies, and filmmakers would be less dependent on studios, for the distribution of their creations. Both consumers and artists would enjoy greater freedom to modify and redistribute audio and video recordings. Although the prices of consumer electronic equipment and broadband access would increase somewhat, demand for them would rise, thus benefiting the suppliers of those goods and services. Finally, society at large would benefit from a sharp reduction in litigation and other transaction costs.

While I’m uncomfortable with the idea of any top-down, governmental solution, this certainly provides food for thought.

wikipedia, lifelines, and the packaging of authority

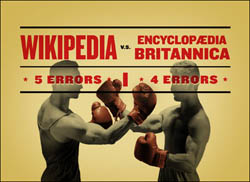

In a nice comment in yesterday’s Times, “The Nitpicking of the Masses vs. the Authority of the Experts,” George Johnson revisits last month’s Seigenthaler smear episode and Nature magazine Wikipedia-Britannica comparison, and decides to place his long term bets on the open-source encyclopedia:

In a nice comment in yesterday’s Times, “The Nitpicking of the Masses vs. the Authority of the Experts,” George Johnson revisits last month’s Seigenthaler smear episode and Nature magazine Wikipedia-Britannica comparison, and decides to place his long term bets on the open-source encyclopedia:

It seems natural that over time, thousands, then millions of inexpert Wikipedians – even with an occasional saboteur in their midst – can produce a better product than a far smaller number of isolated experts ever could.

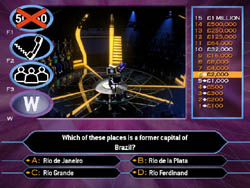

Reading it, a strange analogy popped into my mind: “Who Wants to Be a Millionaire.” Yes, the game show. What does it have to do with encyclopedias, the internet and the re-mapping of intellectual authority? I’ll try to explain. “Who Wants to Be a Millionaire” is a simple quiz show, very straightforward, like “Jeopardy” or “The $64,000 Question.” A single contestant answers a series of multiple choice questions, and with each question the money stakes rise toward a million-dollar jackpot. The higher the stakes the harder the questions (and some seriously overdone lighting and music is added for maximum stress). There is a recurring moment in the game when the contestant’s knowledge fails and they have the option of using one of three “lifelines” that have been alloted to them for the show.

The first lifeline (and these can be used in any order) is the 50:50, which simply reduces the number of possible answers from four to two, thereby doubling your chances of selecting the correct one — a simple jiggering of probablities.  The other two are more interesting. The second lifeline is a telephone call to a friend or relative at home who is given 30 seconds to come up with the answer to the stumper question. This is a more interesting kind of a probability, since it involves a personal relationship. It deals with who you trust, who you feel you can rely on. Last, and my favorite, is the “ask the audience” lifeline, in which the crowd in the studio is surveyed and hopefully musters a clear majority behind one of the four answers. Here, the probability issue gets even more intriguing. Your potential fortune is riding on the knowledge of a room full of strangers.

The other two are more interesting. The second lifeline is a telephone call to a friend or relative at home who is given 30 seconds to come up with the answer to the stumper question. This is a more interesting kind of a probability, since it involves a personal relationship. It deals with who you trust, who you feel you can rely on. Last, and my favorite, is the “ask the audience” lifeline, in which the crowd in the studio is surveyed and hopefully musters a clear majority behind one of the four answers. Here, the probability issue gets even more intriguing. Your potential fortune is riding on the knowledge of a room full of strangers.

In most respects, “Who Wants to Be a Millionaire” is just another riff on the classic quiz show genre, but the lifeline option pegs it in time, providing a clue about its place in cultural history. The perceptive game show anthropologist would surely recognize that the lifeline is all about the network. It’s what gives “Millionaire” away as a show from around the time of the tech bubble in the late 90s — manifestly a network-era program. Had it been produced in the 50s, the lifeline option would have been more along the lines of “ask the professor!” Lights rise on a glass booth containing a mustached man in a tweed jacket sucking on a pipe. Our cliché of authority. But “Millionaire” turns not to the tweedy professor in the glass booth (substitute ivory tower) but rather to the swarming mound of ants in the crowd.

And that’s precisely what we do when we consult Wikipedia. It isn’t an authoritative source in the professor-in-the-booth sense. It’s more lifeline number 3 — hive mind, emergent intelligence, smart mobs, there is no shortage of colorful buzzwords to describe it. We’ve always had lifeline number 2. It’s who you know. The friend or relative on the other end of the phone line. Or think of the whispered exchange between students in the college library reading room, or late-night study in the dorm. Suddenly you need a quick answer, an informal gloss on a subject. You turn to your friend across the table, or sprawled on the couch eating Twizzlers: When was the Glorious Revolution again? Remind me, what’s the Uncertainty Principle?

With Wikipedia, this friend factor is multiplied by an order of millions — the live studio audience of the web. This is the lifeline number 3, or network, model of knowledge. Individual transactions may be less authoritative, pound for pound, paragraph for paragraph, than individual transactions with the professors. But as an overall system to get you through a bit of reading, iron out a wrinkle in a conversation, or patch over a minor factual uncertainty, it works quite well. And being free and informal it’s what we’re more inclined to turn to first, much more about the process of inquiry than the polished result. As Danah Boyd puts it in an excellently measured defense of Wikipedia, it “should be the first source of information, not the last. It should be a site for information exploration, not the definitive source of facts.” Wikipedia advocates and critics alike ought to acknowledge this distinction.

So, having acknowledged it, can we then broker a truce between Wikipedia and Britannica? Can we just relax and have the best of both worlds? I’d like that, but in the long run it seems that only one can win, and if I were a betting man, I’d have to bet with Johnson. Britannica is bound for obsolescence. A couple of generations hence (or less), who will want it? How will it keep up with this larger, far more dynamic competitor that is already of roughly equal in quality in certain crucial areas?

So, having acknowledged it, can we then broker a truce between Wikipedia and Britannica? Can we just relax and have the best of both worlds? I’d like that, but in the long run it seems that only one can win, and if I were a betting man, I’d have to bet with Johnson. Britannica is bound for obsolescence. A couple of generations hence (or less), who will want it? How will it keep up with this larger, far more dynamic competitor that is already of roughly equal in quality in certain crucial areas?

Just as the printing press eventually drove the monastic scriptoria out of business, Wikipedia’s free market of knowledge, with all its abuses and irregularities, its palaces and slums, will outperform Britannica’s centralized command economy, with its neat, cookie-cutter housing slabs, its fair, dependable, but ultimately less dynamic, system. But, to stretch the economic metaphor just a little further before it breaks, it’s doubtful that the free market model will remain unregulated for long. At present, the world is beginning to take notice of Wikipedia. A growing number are championing it, but for most, it is more a grudging acknowledgment, a recognition that, for better of for worse, what’s going on with Wikipedia is significant and shouldn’t be ignored.

Eventually we’ll pass from the current phase into widespread adoption. We’ll realize that Wikipedia, being an open-source work, can be repackaged in any conceivable way, for profit even, with no legal strings attached (it already has been on sites like about.com and thousands — probably millions — of spam and link farms). As Lisa intimated in a recent post, Wikipedia will eventually come in many flavors. There will be commercial editions, vetted academic editions, handicap-accessible editions. Darwinist editions, creationist editions. Google, Yahoo and Amazon editions. Or, in the ultimate irony, Britannica editions! (If you can’t beat ’em…)

All the while, the original Wikipedia site will carry on as the sprawling community garden that it is. The place where a dedicated minority take up their clippers and spades and tend the plots. Where material is cultivated for packaging. Right now Wikipedia serves best as an informal lifeline, but soon enough, people will begin to demand something more “authoritative,” and so more will join in the effort to improve it. Some will even make fortunes repackaging it in clever ways for which people or institutions are willing to pay. In time, we’ll likely all come to view Wikipedia, or its various spin-offs, as a resource every bit as authoritative as Britannica. But when this happens, it will no longer be Wikipedia.

Authority, after all, is a double-edged sword, essential in the pursuit of truth, but dangerous when it demands that we stop asking questions. What I find so thrilling about the Wikipedia enterprise is that it is so process-oriented, that its work is never done. The minute you stop questioning it, stop striving to improve it, it becomes a museum piece that tells the dangerous lie of authority. Even those of use who do not take part in the editorial gardening, who rely on it solely as lifeline number 3, we feel the crowd rise up to answer our query, we take the knowledge it gives us, but not (unless we are lazy) without a grain of salt. The work is never done. Crowds can be wrong. But we were not asking for all doubts to be resolved, we wanted simply to keep moving, to keep working. Sometimes authority is just a matter of packaging, and the packaging bonanza will soon commence. But I hope we don’t lose the original Wikipedia — the rowdy community garden, lifeline number 3. A place that keeps you on your toes — that resists tidy packages.

Wikipedia to consider advertising

The London Times just published an interview with Wikipedia founder Jimmy Wales in which he entertains the  idea of carrying ads. This mention is likely to generate an avalanche of discussion about the commercialization of open-source resources. While i would love to see Wikipedia stay out of the commercial realm, it’s just not likely. Yahoo, Google and other big companies are going to commercialize Wikipedia anyway so taking ads is likely to end up a no-brainer. As i mentioned in my comment on Lisa’s earlier post, this is going to happen as long as the overall context is defined by capitalist relations. Presuming that the web can be developed in a cooperative, non-capitalist way without fierce competition and push-back from the corporations who control the web’s infrastructure seems naive to me.

idea of carrying ads. This mention is likely to generate an avalanche of discussion about the commercialization of open-source resources. While i would love to see Wikipedia stay out of the commercial realm, it’s just not likely. Yahoo, Google and other big companies are going to commercialize Wikipedia anyway so taking ads is likely to end up a no-brainer. As i mentioned in my comment on Lisa’s earlier post, this is going to happen as long as the overall context is defined by capitalist relations. Presuming that the web can be developed in a cooperative, non-capitalist way without fierce competition and push-back from the corporations who control the web’s infrastructure seems naive to me.

google print on deck at radio open source

Open Source, the excellent public radio program (not to be confused with “Open Source Media”) that taps into the blogosphere to generate its shows, has been chatting with me about putting together an hour on the Google library project. Open Source is a unique hybrid, drawing on the best qualities of the blogosphere — community, transparency, collective wisdom — to produce an otherwise traditional program of smart talk radio. As host Christopher Lydon puts it, the show is “fused at the brain stem with the world wide web.” Or better, it “uses the internet to be a show about the world.”

The Google show is set to air live this evening at 7pm (ET) (they also podcast). It’s been fun working with them behind the scenes, trying to figure out the right guests and questions for the ideal discussion on Google and its bookish ambitions. My exchange has been with Brendan Greeley, the Radio Open Source “blogger-in-chief” (he’s kindly linked to us today on their site). We agreed that the show should avoid getting mired in the usual copyright-focused news peg — publishers vs. Google etc. — and focus instead on the bigger questions. At my suggestion, they’ve invited Siva Vaidhyanathan, who wrote the wonderful piece in the Chronicle of Higher Ed. that I talked about yesterday (see bigger questions). I’ve also recommended our favorite blogger-librarian, Karen Schneider (who has appeared on the show before), science historian George Dyson, who recently wrote a fascinating essay on Google and artificial intelligence, and a bunch of cybertext studies people: Matthew G. Kirschenbaum, N. Katherine Hayles, Jerome McGann and Johanna Drucker. If all goes well, this could end up being a very interesting hour of discussion. Stay tuned.

UPDATE: Open Source just got a hold of Nicholas Kristof to do an hour this evening on Genocide in Sudan, so the Google piece will be pushed to next week.

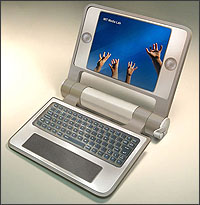

hundred dollar laptops may make good table lamps

“Demo or die.” That was the creed of the MIT Media Lab in the glory days — days of ferment that produced important, foundational work in interactive media. Well, yesterday at the World Summit on the Information Society in Tunisia, where Nicholas Negroponte and Kofi Annan were unveiling the prototype of the 100 dollar laptop, the demo died. Or rather, the demo just didn’t happen.

“Demo or die.” That was the creed of the MIT Media Lab in the glory days — days of ferment that produced important, foundational work in interactive media. Well, yesterday at the World Summit on the Information Society in Tunisia, where Nicholas Negroponte and Kofi Annan were unveiling the prototype of the 100 dollar laptop, the demo died. Or rather, the demo just didn’t happen.

As it turns out, Negroponte wasn’t able to get past the screen lock on the slick lime-green device, so the mob of assembled journalists and technofiles had to accept the 100 dollar gospel on faith, making do with touching anecdotes about destitute families huddled in wonder around their child’s new laptop, the brightest source of light in their tiny hovel. All told, an inauspicious beginning for the One Laptop Per Child intitiative, which aims to put millions of cheap, robust, free-software-chugging computers into the hands of the world’s poorest children.

Sorry to be so snide, but we were watching the live webcast from Tunis yesterday… it’s hard not to laugh at the leaders of the free world bumbling over this day-glo gadget, this glorified Trapper Keeper cum jack-in-the-box (Annan ended up breaking the hand crank), with barely a word devoted to what educational content will actually go inside, or to how teachers plan to construct lessons around these new toys. In the end, it’s going to come down to them. Good teachers, who know computers, may be able to put the laptops to good use. But somehow I’m getting visions of stacks of unused or busted laptops, cast aside like so many neon bricks.

A sunnier future for the 100 dollar laptop? A commercial company obtains the rights and starts selling them in the West for $250 a pop. They’re a huge hit. Everyone just has to have one to satisfy their poor inner child.

no laptop left behind

MIT has re-dubbed its $100 Laptop Project “One Laptop Per Child.” It’s probably a good sign that they’ve gotten children into the picture, but like many a program with sunny-sounding names and lofty goals, it may actually contain something less sweet. The hundred-dollar laptop is about bringing affordable computer technology to the developing world. But the focus so far has been almost entirely on the hardware, the packaging. Presumably what will fit into this fancy packaging is educational software, electronic textbooks and the like. But we aren’t hearing a whole lot about this. Nor are we hearing much about how teachers with little or no experience with computers will be able to make use of this powerful new tool.

MIT has re-dubbed its $100 Laptop Project “One Laptop Per Child.” It’s probably a good sign that they’ve gotten children into the picture, but like many a program with sunny-sounding names and lofty goals, it may actually contain something less sweet. The hundred-dollar laptop is about bringing affordable computer technology to the developing world. But the focus so far has been almost entirely on the hardware, the packaging. Presumably what will fit into this fancy packaging is educational software, electronic textbooks and the like. But we aren’t hearing a whole lot about this. Nor are we hearing much about how teachers with little or no experience with computers will be able to make use of this powerful new tool.

The headlines tell of a revolution in the making: “Crank It Up: Design of $100 Laptop for the World’s Children Unveiled” or “Argentina Joins MIT’s Low-Cost Laptop Plan: Ministry of Education is ordering between 500,000 to 1 million.” Conspicuously absent are headlines like “Web-Based Curriculum in Development For Hundred Dollar Laptops” or “Argentine Teachers Go On Tech Tutorial Retreats, Discuss Pros and Cons of Technology in the Classroom.”

Help! Help! We’re sinking!

This emphasis on the package, on the shell, makes me think of the Container Store. Anyone who has ever shopped at the Container Store knows that it is devoted entirely to empty things. Shelves, bins, baskets, boxes, jars, tubs, and crates. Empty vessels to organize and contain all the bric-a-brac, the creeping piles of crap that we accumulate in our lives. Shopping there is a weirdly existential affair. Passing through aisles of hollow objects, your mind filling them with uses, needs, pressing abundances. The store’s slogan “contain yourself” speaks volumes about a culture in the advanced stages of consumption-induced distress. The whole store is a cry for help! Or maybe a sedative. There’s no question that the Container Store sells useful things, providing solutions to a problem we undoubtedly have. But that’s just the point. We had to create the problem first.

I worry that One Laptop Per Child is providing a solution where there isn’t a problem. Open up the Container Store in Malawi and people there would scratch their heads. Who has so much crap that they need an entire superstore devoted to selling containers? Of course, there is no shortage of problems in these parts of the world. One need not bother listing them. But the hundred-dollar laptop won’t seek to solve these problems directly. It’s focused instead on a much grander (and vaguer) challenge: to bridge the “digital divide.” The digital divide — that catch-all bogey, the defeat of which would solve every problem in its wake. But beware of cure-all tonics. Beware of hucksters pulling into the dusty frontier town with a shiny new box promising to end all woe.

A more devastating analogy was recently drawn between MIT’s hundred dollar laptops and pharmaceutical companies peddling baby formula to the developing world, a move that has made the industries billions while spreading malnutrition and starvation.

Breastfeeding not only provides nutrition, but also provides immunity to the babies. Of course, for a baby whose mother cannot produce milk, formula is better than starvation. But often the mothers stop producing milk only after getting started on formula. The initial amount is given free to the mothers in the poor parts of the world and they are told that formula is much much better than breast milk. So when the free amount is over and the mother is no longer lactating, the formula has to be bought. Since it is expensive, soon the formula is severely diluted until the infant is receiving practically no nutrition and is slowly starving to death.

…Babies are important when it comes to profits for the peddlers of formula. But there are only so many babies in the developed world. For real profit, they have to tap into the babies of the under-developed world. All with the best of intentions, of course: to help the babies of the poor parts of the world because there is a “formula divide.” Why should only the rich “gain” from the wonderful benefits of baby formula?

Which brings us back to laptops:

Hundreds of millions of dollars which could have been more useful in providing primary education would instead end up in the pockets of hardware manufacturers and software giants. Sure a few children will become computer-savvy, but the cost of this will be borne by the millions of children who will suffer from a lack of education.

Ethan Zuckerman, a passionate advocate for bringing technology to the margins, was recently able to corner hundred-dollar laptop project director Nicholas Negroponte for a couple of hours and got some details on what is going on. He talks at great length here about the design of the laptop itself, from the monitor to the hand crank to the rubber gasket rim, and further down he touches briefly on some of the software being developed for it, including Alan Kay’s Squeak environment, which allows children to build their own electronic toys and games.

The open source movement is behind One Laptop Per Child in a big way, and with them comes the belief that if you give the kids tools, they will teach themselves and grope their way to success. It’s a lovely thought, and may prove true in some instances. But nothing can substitute for a good teacher. Or a good text. It’s easy to think dreamy thoughts about technology emptied of content — ready, like those aisles of containers, drawers and crates, to be filled with our hopes and anxieties, to be filled with little brown hands reaching for the stars. But that’s too easy. And more than a little dangerous.

Dropping cheap, well-designed laptops into disadvantaged classrooms around the world may make a lot of money for the manufacturers and earn brownie points for governments. And it’s a great feel-good story for everyone in the thousand-dollar laptop West. But it could make a mess on the ground.