The NY Times reports on new web-based services at university libraries that are incorporating features such as personalized recommendations, browsing histories, and email alerts, the sort of thing developed by online retailers like Amazon and Netflix to recreate some of the experience of browsing a physical store. Remember Ranganathan’s fourth law of library science: “save the time of the reader.” The reader and the customer are perhaps becoming one in the same.

It would be interesting if a social software system were emerging for libraries that allowed students and researchers to work alongside librarians in organizing the stacks. Automated recommendations are just the beginning. I’m talking more about value added by the readers themselves (Amazon has does this with reader reviews, Listmania, and So You’d Like To…). A social card catalogue with a tagging system and other reader-supplied metadata where readers could leave comments and bread crumb trails between books. Each card catalogue entry with its own blog and wiki to create a context for the book. Books are not just surrounded by other volumes on the shelves, they are surrounded by people, other points of view, affinities — the kinds of thing that up to this point were too vaporous to collect. This goes back to David Weinberger’s comment on metadata and Google Book Search.

Category Archives: Libraries, Search and the Web

google print is no more

Not the program, of course, just the name. From now on it is to be known as Google Book Search. “Print” obviously struck a little too close to home with publishers and authors. On the company blog, they explain the shift in emphasis:

No, we don’t think that this new name will change what some folks think about this program. But we do believe it will help a lot of people understand better what we’re doing. We want to make all the world’s books discoverable and searchable online, and we hope this new name will help keep everyone focused on that important goal.

the book in the network – masses of metadata

In this weekend’s Boston Globe, David Weinberger delivers the metadata angle on Google Print:

…despite the present focus on who owns the digitized content of books, the more critical battle for readers will be over how we manage the information about that content-information that’s known technically as metadata.

…we’re going to need massive collections of metadata about each book. Some of this metadata will come from the publishers. But much of it will come from users who write reviews, add comments and annotations to the digital text, and draw connections between, for example, chapters in two different books.

As the digital revolution continues, and as we generate more and more ways of organizing and linking books-integrating information from publishers, libraries and, most radically, other readers-all this metadata will not only let us find books, it will provide the context within which we read them.

The book in the network is a barnacled spirit, carrying with it the sum of its various accretions. Each book is also its own library by virtue not only of what it links to itself, but of what its readers are linking to, of what its readers are reading. Each book is also a milk crate of earlier drafts. It carries its versions with it. A lot of weight for something physically weightless.

having browsed google print a bit more…

…I realize I was over-hasty in dismissing the recent additions made since book scanning resumed earlier this month. True, many of the fine wines in the cellar are there only for the tasting, but the vintage stuff can be drunk freely, and there are already some wonderful 19th century titles, at this point mostly from Harvard. The surest way to find them is to search by date, or by title and date. Specify a date range in advanced search or simply enter, for example, “date: 1890” and a wealth of fully accessible texts comes up, any of which can be linked to from a syllabus. An astonishing resource for teachers and students.

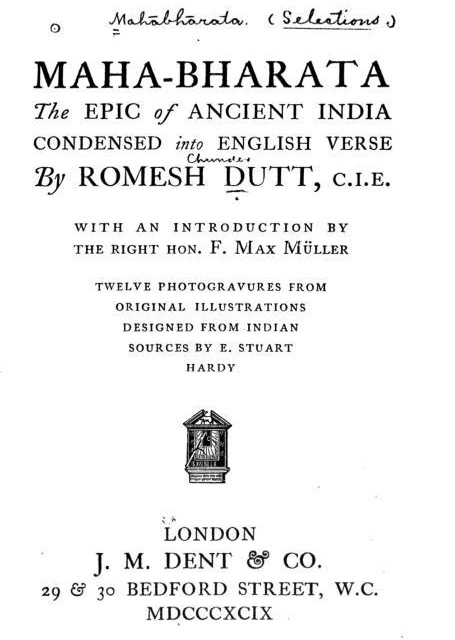

The conclusion: Google Print really is shaping up to be a library, that is, of the world pre-1923 — the current line of demarcation between copyright and the public domain. It’s a stark reminder of how over-extended copyright is. Here’s an 1899 english printing of The Mahabharata:

A charming detail found on the following page is this old Harvard library stamp that got scanned along with the rest:

google print’s not-so-public domain

Google’s first batch of public domain book scans is now online, representing a smattering of classics and curiosities from the collections of libraries participating in Google Print. Essentially snapshots of books, they’re not particularly comfortable to read, but they are keyword-searchable and, since no copyright applies, fully accessible.

Google’s first batch of public domain book scans is now online, representing a smattering of classics and curiosities from the collections of libraries participating in Google Print. Essentially snapshots of books, they’re not particularly comfortable to read, but they are keyword-searchable and, since no copyright applies, fully accessible.

The problem is, there really isn’t all that much there. Google’s gotten a lot of bad press for its supposedly cavalier attitude toward copyright, but spend a few minutes browsing Google Print and you’ll see just how publisher-centric the whole affair is. The idea of a text being in the public domain really doesn’t amount to much if you’re only talking about antique manuscripts, and these are the only books that they’ve made fully accessible. Daisy Miller‘s copyright expired long ago but, with the exception of Harvard’s illustrated 1892 copy, all the available scanned editions are owned by modern publishers and are therefore only snippeted. This is not an online library, it’s a marketing program. Google Print will undeniably have its uses, but we shouldn’t confuse it with a library.

(An interesting offering from the stacks of the New York Public Library is this mid-19th century biographic registry of the wealthy burghers of New York: “Capitalists whose wealth is estimated at one hundred thousand dollars and upwards…”)

a better wikipedia will require a better conversation

There’s an interesting discussion going on right now under Kim’s Wikibooks post about how an open source model might be made to work for the creation of authoritative knowledge — textbooks, encyclopedias etc. A couple of weeks ago there was some dicussion here about an article that, among other things, took some rather cheap shots at Wikipedia, quoting (very selectively) a couple of shoddy passages. Clearly, the wide-open model of Wikipedia presents some problems, but considering the advantages it presents (at least in potential) — never out of date, interconnected, universally accessible, bringing in voices from the margins — critics are wrong to dismiss it out of hand. Holding up specific passages for critique is like shooting fish in a barrel. Even Wikipedia’s directors admit that most of the content right now is of middling quality, some of it downright awful. It doesn’t then follow to say that the whole project is bunk. That’s a bit like expelling an entire kindergarten for poor spelling. Wikipedia is at an early stage of development. Things take time.

Instead we should be talking about possible directions in which it might go, and how it might be improved. Dan for one, is concerned about the market (excerpted from comments):

What I worry about…is that we’re tearing down the old hierarchies and leaving a vacuum in their wake…. The problem with this sort of vacuum, I think, is that capitalism tends to swoop in, simply because there are more resources on that side….

…I’m not entirely sure if the world of knowledge functions analogously, but Wikipedia does presume the same sort of tabula rasa. The world’s not flat: it tilts precariously if you’ve got the cash. There’s something in the back of my mind that suspects that Wikipedia’s not protected against this – it’s kind of in the state right now that the Web as a whole was in 1995 before the corporate world had discovered it. If Wikipedia follows the model of the web, capitalism will be sweeping in shortly.

Unless… the experts swoop in first. Wikipedia is part of a foundation, so it’s not exactly just bobbing in the open seas waiting to be swept away. If enough academics and librarians started knocking on the door saying, hey, we’d like to participate, then perhaps Wikipedia (and Wikibooks) would kick up to the next level. Inevitably, these newcomers would insist on setting up some new vetting mechanisms and a few useful hierarchies that would help ensure quality. What would these be? That’s exactly the kind of thing we should be discussing.

The Guardian ran a nice piece earlier this week in which they asked several “experts” to evaluate a Wikipedia article on their particular subject. They all more or less agreed that, while what’s up there is not insubstantial, there’s still a long way to go. The biggest challenge then, it seems to me, is to get these sorts of folks to give Wikipedia more than just a passing glance. To actually get them involved.

For this to really work, however, another group needs to get involved: the users. That might sound strange, since millions of people write, edit and use Wikipedia, but I would venture that most are not willing to rely on it as a bedrock source. No doubt, it’s incredibly useful to get a basic sense of a subject. Bloggers (including this one) link to it all the time — it’s like the conversational equivalent of a reference work. And for certain subjects, like computer technology and pop culture, it’s actually pretty solid. But that hits on the problem right there. Wikipedia, even at its best, has not gained the confidence of the general reader. And though the Wikimaniacs would be loathe to admit it, this probably has something to do with its core philosophy.

Karen G. Schneider, a librarian who has done a lot of thinking about these questions, puts it nicely:

Wikipedia has a tagline on its main page: “the free-content encyclopedia that anyone can edit.” That’s an intriguing revelation. What are the selling points of Wikipedia? It’s free (free is good, whether you mean no-cost or freely-accessible). That’s an idea librarians can connect with; in this country alone we’ve spent over a century connecting people with ideas.

However, the rest of the tagline demonstrates a problem with Wikipedia. Marketing this tool as a resource “anyone can edit” is a pitch oriented at its creators and maintainers, not the broader world of users. It’s the opposite of Ranganathan’s First Law, “books are for use.” Ranganathan wasn’t writing in the abstract; he was referring to a tendency in some people to fetishize the information source itself and lose sight that ultimately, information does not exist to please and amuse its creators or curators; as a common good, information can only be assessed in context of the needs of its users.

I think we are all in need of a good Wikipedia, since in the long run it might be all we’ve got. And I’m in now way opposed to its spirit of openness and transparency (I think the preservation of version histories is a fascinating element and one which should be explored further — perhaps the encyclopedia of the future can encompass multiple versions of the “the truth”). But that exhilarating throwing open of the doors should be tempered with caution and with an embrace of the parts of the old system that work. Not everything need be thrown away in our rush to explore the new. Some people know more than other people. Some editors have better judgement than others. There is such a thing as a good kind of gatekeeping.

If these two impulses could be brought into constructive dialogue then we might get somewhere. This is exactly the kind of conversation the Wikimedia Foundation should be trying to foster.

microsoft joins open content alliance

Microsoft’s forthcoming “MSN Book Search” is the latest entity to join the Open Content Alliance, the non-controversial rival to Google Print. ZDNet says: “Microsoft has committed to paying for the digitization of 150,000 books in the first year, which will be about $5 million, assuming costs of about 10 cents a page and 300 pages, on average, per book…”

Apparently having learned from Google’s mistakes, OCA operates under a strict “opt-in” policy for publishers vis-a-vis copyrighted works (whereas with Google, publishers have until November 1 to opt out). Judging by the growing roster of participants, including Yahoo, the National Archives of Britain, the University of California, Columbia University, and Rice University, not to mention the Internet Archive, it would seem that less hubris equals more results, or at least lower legal fees. Supposedly there is some communication between Google and OCA about potential cooperation.

Also story in NY Times.

to some writers, google print sounds like a sweet deal

Wired has a piece today about authors who are in favor of Google’s plans to digitize millions of books and make them searchable online. Most seem to agree that obscurity is a writer’s greatest enemy, and that the exposure afforded by Google’s program far outweighs any intellectual property concerns. Sometimes to get more you have to give a little.

The article also mentions the institute.

debating google print

The Washington Post has run a pair of op-eds, one from each side of the Google Print dispute. Neither says anything particularly new. Moreover, they enforce the perception that there can be only two positions on the subject — an endemic problem in newspaper opinion pages with their addiction to binaries, where two cardboard boxers are allotted their space to throw a persuasive punch. So you’re either for Google or against it? That’s awfully close to you’re either for technology — for progress — or against it. Unfortunately, like technology’s impact, the Google book-scanning project is a little trickier to figure out, and a more nuanced conversation is probably in order.

The first piece, “Riches We Must Share…”, is submitted in support of Google by University of Michigan President Sue Coleman (a partner in the Google library project). She argues that opening up the elitist vaults of the world’s great (english) research libraries will constitute a democratic revolution. “We believe the result can be a widening of human conversation comparable to the emergence of mass literacy itself.” She goes on to deliver some boilerplate about the “Net Generation” — too impatient to look for books unless they’re online etc. etc. (great to see a major university president being led by the students instead of leading herself).

Coleman then devotes a couple of paragraphs to the copyright question, failing to tackle any of its controversial elements:

Universities are no strangers to the responsible management of complex copyright, permission and security issues; we deal with them every day in our classrooms, libraries, laboratories and performance halls. We will continue to work within the current criteria for fair use as we move ahead with digitization.

The problem is, Google is stretching the current criteria of fair use, possibly to the breaking point. Coleman does not acknowledge or address this. She does, however, remind the plaintiffs that copyright is not only about the owners:

The protections of copyright are designed to balance the rights of the creator with the rights of the public. At its core is the most important principle of all: to facilitate the sharing of knowledge, not to stifle such exchange.

All in all a rather bland statement in support of open access. It fails to weigh in on the fair use question — something about which the academy should have a few things to say — and does not indicate any larger concern about what Google might do with its books database down the road.

The opposing view, “…But Not at Writers’ Expense”, comes from Nick Taylor, writer, and president of the Authors’ Guild (which sued Google last month). Taylor asserts that mega-rich Google is tramping on the dignity of working writers. But a couple of paragraphs in, he gets a little mixed up about contemporary publishing:

Except for a few big-name authors, publishers roll the dice and hope that a book’s sales will return their investment. Because of this, readers have a wealth of wonderful books to choose from.

A dubious assessment, since publishing conglomerates are not exactly enthusiastic dice rollers. I would counter that risk-averse corporate publishing has steadily shrunk the number of available titles, counting on a handful of blockbusters to drive the market. Taylor goes on to defend not just the publishing status quo, but the legal one:

Now that the Authors Guild has objected, in the form of a lawsuit, to Google’s appropriation of our books, we’re getting heat for standing in the way of progress, again for thoughtlessly wanting to be paid. It’s been tradition in this country to believe in property rights. When did we decide that socialism was the way to run the Internet?

First of all, it’s funny to think of the huge corporations that dominate the web as socialist. Second, this talk about being paid for appropriating books for a search database is revealing of the two totally different worldviews that are at odds in this struggle. The authors say that any use of their book requires a payment. Google sees including the books in the database as a kind of payment in itself. No one with a web page expects Google to pay them for indexing their site. They are grateful that they do! Otherwise, they are totally invisible. This is the unspoken compact that underpins web search. Google assumed the same would apply with books. Taylor says not so fast.

Here’s Taylor on fair use:

Google contends that the portions of books it will make available to searchers amount to “fair use,” the provision under copyright that allows limited use of protected works without seeking permission. That makes a private company, which is profiting from the access it provides, the arbiter of a legal concept it has no right to interpret. And they’re scanning the entire books, with who knows what result in the future.

Actually, Google is not doing all the interpreting. There is a legal precedent for Google’s reading of fair use established in the 2003 9th Circuit Court decision Kelly v. Arriba Soft. In the case, Kelly, a photographer, sued Arriba Soft, an online image search system, for indexing several of his photographs in their database. Kelly believed that his intellectual property had been stolen, but the court ruled that Arriba’s indexing of thumbnail-sized copies of images (which always linked to their source sites) was fair use: “Arriba’s use of the images serves a different function than Kelly’s use – improving access to information on the internet versus artistic expression.” Still, Taylor’s “with who knows what result in the future” concern is valid.

So on the one hand we have many writers and most publishers trying to defend their architecture of revenue (or, as Taylor would have it, their dignity). But I can’t imagine how Google Print would really be damaging that architecture, at least not in the foreseeable future. Rather it leverages it by placing it within the frame of another architecture: web search. The irony for the authors is that the current architecture doesn’t seem to be serving them terribly well. With print-on-demand gaining in quality and legitimacy, online book search could totally re-define what is an acceptable risk to publishers, and maybe more non-blockbuster authors would get published.

On the other hand we have the universities and libraries participating in Google’s program, delivering the good news of accessibility. But they are not sufficiently questioning what Google might do with its database down the road, or the implications of a private technology company becoming the principal gatekeeper of the world’s corpus.

If only this debate could be framed in a subtler way, rather than the for-Google-or-against-it paradigm we have now. I’m cautiously optimistic about the effect of having books searchable on the web. And I tend to believe it will be beneficial to authors and publishers. But I have other, deep reservations about the direction in which Google is heading, and feel that a number of things could go wrong. We think the cencorship of the marketplace is bad now in the age of publishing conglomerates. What if one company has total control of everything? And is keeping track of every book, every page, that you read. And is reading you while you read, throwing ads into your peripheral vision. I’m curious to hear from readers what they feel could be the hazards of Google Print.

google is sued… again

This time by publishers. Penguin Group USA, McGraw-Hill, Pearson Education, Simon & Schuster and John Wiley & Sons. The gripe is the same as with the Authors’ Guild, which filed suit last month alleging “massive copyright infringement.” Publishers fear a dangerous precedent is set by Google’s scanning of books to construct what amounts to a giant card catalogue on the web. Google claims “fair use” (see rationale), again pointing out that for copyrighted works only tiny “snippets” of text are displayed around keywords (though perhaps this is not yet fully in effect – I was searching around in this book and was able to look at quite a lot).

Google calls the publishers’ suit “near-sighted.” And it probably is. The benefit to readers and researchers will be tremendous, as will (Google is eager to point out) the exposure for authors and publishers. But Google Print is undoubtedly an earth-shaking program. Look at the reaction in Europe, where alarm bells rung by France warned of cultural imperialism, an english-drenched web. Heads of state and culture convened and initial plans for a European digital library have been drawn up.

What the transatlantic flap makes clear is that Google’s book scanning touches a deep nerve, and the argument over intellectual property, signficant though it is, distracts from a more profound human anxiety — an anxiety about the form of culture and the shape of thoughts. If we try to grope back through the millennia, we can find find an analogy in the invention of writing.

The shift from oral to written language froze speech into stable strings that could be transmitted and stored over distance and time. This change not only affected the modes of communication, it dramatically refigured the cognitive makeup of human beings (as McLuhan, Ong and others have described). We are currently going through another such shift. The digital takes the freezing medium of text and throws it back into fluidity. Like the melting of polar ice caps, it unsettles equilibriums, changes weather patterns. It is a lot to adjust to, and we wonder if our great-great-grandchildren will literally think differently from us.

But in spite of this disorienting new fluidity, we still have print, we still have the book. And actually, Google Print in many ways affirms this since its search returns will point to print retailers and brick-and-mortar libraries. Yet the fact remains that the canon is being scanned, with implications we can’t fully perceive, and future uses we can’t fully predict, and so it is understandable that many are unnerved. The ice is really beginning to melt.

In Phaedrus, Plato expresses a similar anxiety about the invention of writing. He tells the tale of Theuth, an Egyptian deity who goes around spreading the new technology, and one day encounters a skeptic in King Thamus:

…you who are the father of letters, from a paternal love of your own children have been led to attribute to them a power opposite to that which they in fact possess. For this discovery of yours will create forgetfulness in the minds of those who learn to use it; they will not exercise their memories, but, trusting in external, foreign marks, they will not bring things to remembrance from within themselves. You have discovered a remedy not for memory, but for reminding. You offer your students the appearance of wisdom, not true wisdom. They will be hearers of many things and will have learned nothing; they will appear to be omniscient and will generally know nothing; they will be tiresome company, having the show of wisdom without the reality.

As I type, I’m exhibiting wisdom without the reality. I’ve read Plato, but nowhere near exhaustively. Yet I can slash and weave texts on the web in seconds, throw together a blog entry and send it screeching into the commons. And with Google Print I can get the quote I need and let the rest of the book rot behind the security fence. This fluidity is dangerous because it makes connections so easy. Do we know what we are connecting?