This is welcome. Several leading American research libraries including the Boston Public and the Smithsonian have said no thanks to Google and Microsoft book digitization deals, opting instead for the more costly but less restrictive Open Content Alliance/Internet Archive program. The NY Times reports, and explains how private foundations like Sloan are funding some of the OCA partnerships.

Category Archives: google

the googlization of everything: a public writing begins

We’re very excited to announce that Siva’s new Google book site, produced and hosted by the Institute, is now live! In addition to being the seed of what will likely be a very important book, I’ll bet that over time this will become one of the best Google-focused blogs on the Web.

The Googlization of Everything: How One Company is Disrupting Culture, Commerce, and Community… and Why We Should Worry.

The book:

…a critical interpretation of the actions and intentions behind the cultural behemoth that is Google, Inc. The book will answer three key questions: What does the world look like through the lens of Google?; How is Google’s ubiquity affecting the production and dissemination of knowledge?; and how has the corporation altered the rules and practices that govern other companies, institutions, and states?

I have never tried to write a book this way. Few have. Writing has been a lonely, selfish pursuit for my so far. I tend to wall myself off from the world (and my loved ones) for days at a time in fits and spurts when I get into a writing groove. I don’t shave. I order pizza. I grumble. I ignore emails from my mother.

I tend to comb through and revise every sentence five or six times (although I am not sure that actually shows up in the quality of my prose). Only when I am sure that I have not embarrassed myself (or when the editor calls to threaten me with a cancelled contract – whichever comes first) do I show anyone what I have written. Now, this is not an uncommon process. Closed composition is the default among writers. We go to great lengths to develop trusted networks of readers and other writers with whom we can workshop – or as I prefer to call it because it’s what the jazz musicians do, woodshed our work.

Well, I am going to do my best to woodshed in public. As I compose bits and pieces of work, I will post them here. They might be very brief bits. They might never make it into the manuscript. But they will be up here for you to rip up or smooth over.

That’s the thing. For a number of years now I have made my bones in the intellectual world trumpeting the virtues of openness and the values of connectivity. I was an early proponent of applying “open source” models to scholarship, journalism, and lots of other things.

And, more to the point: One of my key concerns with Google is that it is a black box. Something that means so much to us reveals so little of itself.

So I would be a hypocrite if I wrote this book any other way. This book will not be a black box.

siva podcast on the googlization of libraries

We’re just a couple of days away from launching what promises to be one of our most important projects to date, The Googlization of Everything, a weblog where Siva Vaidhyanathan (who’s a fellow here) will publicly develop his new book, a major critical examination of the Google behemoth. As an appetizer, check out this First Monday podcast conversation with Siva on the subject of Google’s book activities (mp3, transcript).

An excerpt:

Q: So what’s the alternative? Who are the major players, what are the major policy points?

SIVA: I think this is an important enough project where we need to have a nationwide effort. We have to have a publicly funded effort. Guided, perhaps led by the Library of Congress, certainly a consortium of public university libraries could do just as well to do it.

We’re willing to do these sorts of big projects in the sciences. Look at how individual states are rallying billions of dollars to fund stem cell research right now. Look at the ways the United States government, the French government, the Japanese government rallied billions of dollars for the Human Genome Project out of concern that all that essential information was going to be privatized and served in an inefficient and unwieldy way.

So those are the models that I would like to see us pursue. What saddens me about Google’s initiative, is that it’s let so many people off the hook. Essentially we’ve seen so many people say, “Great now we don’t have to do the digital library projects we were planning to do.” And many of these libraries involved in the Google project were in the process of producing their own digital libraries. We don’t have to do that any more because Google will do it for us. We don’t have to worry about things like quality because Google will take care of the quantity.

And so what I would like to see? I would like to see all the major public universities, public research universities, in the country gather together and raise the money or persuade Congress to deliver the money to do this sort of thing because it’s in the public interest, not because it’s in Google’s interest. If it really is this important we should be able to mount a public campaign, a set of arguments and convince the people with the purse strings that this should be done right.

e-book developments at amazon, google (and rambly thoughts thereon)

The NY Times reported yesterday that the Kindle, Amazon’s much speculated-about e-book reading device, is due out next month. No one’s seen it yet and Amazon has been tight-lipped about specs, but it presumably has an e-ink screen, a small keyboard and scroll wheel, and most significantly, wireless connectivity. This of course means that Amazon will have a direct pipeline between its store and its device, giving readers access an electronic library (and the Web) while on the go. If they’d just come down a bit on the price (the Times says it’ll run between four and five hundred bucks), I can actually see this gaining more traction than past e-book devices, though I’m still not convinced by the idea of a dedicated book reader, especially when smart phones are edging ever closer toward being a credible reading environment. A big part of the problem with e-readers to date has been the missing internet connection and the lack of a good store. The wireless capability of the Kindle, coupled with a greater range of digital titles (not to mention news and blog feeds and other Web content) and the sophisticated browsing mechanisms of the Amazon library could add up to the first more-than-abortive entry into the e-book business. But it still strikes me as transitional – ?a red herring in the larger plot.

A big minus is that the Kindle uses a proprietary file format (based on Mobipocket), meaning that readers get locked into the Amazon system, much as iPod users got shackled to iTunes (before they started moving away from DRM). Of course this means that folks who bought the cheaper (and from what I can tell, inferior) Sony Reader won’t be able to read Amazon e-books.

But blech… enough about ebook readers. The Times also reports (though does little to differentiate between the two rather dissimilar bits of news) on Google’s plans to begin selling full online access to certain titles in Book Search. Works scanned from library collections, still the bone of contention in two major lawsuits, won’t be included here. Only titles formally sanctioned through publisher deals. The implications here are rather different from the Amazon news since Google has no disclosed plans for developing its own reading hardware. The online access model seems to be geared more as a reference and research tool -? a powerful supplement to print reading.

But project forward a few years… this could develop into a huge money-maker for Google: paid access (licensed through publishers) not only on a per-title basis, but to the whole collection – ?all the world’s books. Royalties could be distributed from subscription revenues in proportion to access. Each time a book is opened, a penny could drop in the cup of that publisher or author. By then a good reading device will almost certainly exist (more likely a next generation iPhone than a Kindle) and people may actually be reading books through this system, directly on the network. Google and Amazon will then in effect be the digital infrastructure for the publishing industry, perhaps even taking on what remains of the print market through on-demand services purveyed through their digital stores. What will publishers then be? Disembodied imprints, free-floating editorial organs, publicity directors…?

Recent attempts to develop their identities online through their own websites seem hopelessly misguided. A publisher’s website is like their office building. Unless you have some direct stake in the industry, there’s little reason to bother know where it is. Readers are interested in books not publishers. They go to a bookseller, on foot or online, and they certainly don’t browse by publisher. Who really pays attention to who publishes the books they read anyway, especially in this corporatized era where the difference between imprints is increasingly cosmetic, like the range of brands, from dish soap to potato chips, under Proctor & Gamble’s aegis? The digital storefront model needs serious rethinking.

The future of distribution channels (Googlezon) is ultimately less interesting than this last question of identity. How will today’s publishers establish and maintain their authority as filterers and curators of the electronic word? Will they learn how to develop and nurture literate communities on the social Web? Will they be able to carry their distinguished imprints into a new terrain that operates under entirely different rules? So far, the legacy publishers have proved unable to grasp the way these things work in the new network culture and in the long run this could mean their downfall as nascent online communities (blog networks, webzines, political groups, activist networks, research portals, social media sites, list-servers, libraries, art collectives) emerge as the new imprints: publishing, filtering and linking in various forms and time signatures (books being only one) to highly activated, focused readerships.

The prospect of atomization here (a million publishing tribes and sub-tribes) is no doubt troubling, but the thought of renewed diversity in publishing after decades of shrinking horizons through corporate consolidation is just as, if not more, exciting. But the question of a mass audience does linger, and perhaps this is how certain of today’s publishers will survive, as the purveyors of mass market fare. But with digital distribution and print on demand, the economies of scale rationale for big publishers’ existence takes a big hit, and with self-publishing services like Amazon CreateSpace and Lulu.com, and the emergence of more accessible authoring tools like Sophie (still a ways away, but coming along), traditional publishers’ services (designing, packaging, distributing) are suddenly less special. What will really be important in a chaotic jumble of niche publishers are the critics, filterers and the context-generating communities that reliably draw attention to the things of value and link them meaningfully to the rest of the network. These can be big companies or light-weight garage operations that work on the back of third-party infrastructure like Google, Amazon, YouTube or whatever else. These will be the new publishers, or perhaps its more accurate to say, since publishing is now so trivial an act, the new editors.

Of course social filtering and tastemaking is what’s been happening on the Web for years, but over time it could actually supplant the publishing establishment as we currently know it, and not just the distribution channels, but the real heart of things: the imprimaturs, the filtering, the building of community. And I would guess that even as the digital business models sort themselves out (and it’s worth keeping an eye on interesting experiments like Content Syndicate, covered here yesterday, and on subscription and ad-based models), that there will be a great deal of free content flying around, publishers having finally come to realize (or having gone extinct with their old conceits) that controlling content is a lost cause and out of synch with the way info naturally circulates on the net. Increasingly it will be the filtering, curating, archiving, linking, commenting and community-building -? in other words, the network around the content -? that will be the thing of value. Expect Amazon and Google (Google, btw, having recently rolled out a bunch of impressive new social tools for Book Search, about which more soon) to move into this area in a big way.

jp google

In these first few generations of personal computing, we’ve operated with the “money in the mattress” model of data storage. Information assets are managed personally and locally – ?on your machine, disks or external drives. If the computer crashes, the drive breaks, it’s as though the mattress has burned. You’re pretty much up the creek. Today, though, we’re transitioning to a more abstracted system of remote data banking, and Google and its competitors are the new banks. Undoubtedly, there are great advantages to this (your stuff is more secure in multiply backed-up, networked data centers; you don’t need to be on your machine to access mail and personal media) but the cumulative impact on privacy ought to be considered.

The Economist takes up some of these questions today, examining Google’s emerging cloud of data services as the banking system of the information age:

Google is often compared to Microsoft…but its evolution is actually closer to that of the banking industry. Just as financial institutions grew to become repositories of people’s money, and thus guardians of private information about their finances, Google is now turning into a custodian of a far wider and more intimate range of information about individuals. Yes, this applies also to rivals such as Yahoo! and Microsoft. But Google, through the sheer speed with which it accumulates the treasure of information, will be the one to test the limits of what society can tolerate.

Google is swiftly becoming a new kind of monopoly: pervasively, subtly, intimately attached to your personal data flows. You – ?your data profile, your memory, your clickstreams – ?are the asset now. The banking analogy is a useful one for pondering the coming storm over privacy.

Also: expect excellent coverage and analysis of these and other Google-related issues very soon on Siva Vaidhyanathan’s new book blog, The Googlization of Everything, which is set to launch here in early September.

“the bookish character of books”: how google’s romanticism falls short

Check out, if you haven’t already, Paul Duguid’s witty and incisive exposé of the pitfalls of searching for Tristram Shandy in Google Book Search, an exercise which puts many of the inadequacies of the world’s leading digitization program into relief. By Duguid’s own admission, Lawrence Sterne’s legendary experimental novel is an idiosyncratic choice, but its many typographic and structural oddities make it a particularly useful lens through which to examine the challenges of migrating books successfully to the digital domain. This follows a similar examination Duguid carried out last year with the same text in Project Gutenberg, an experience which he said revealed the limitations of peer production in generating high quality digital editions (also see Dan’s own take on this in an older if:book post). This study focuses on the problems of inheritance as a mode of quality assurance, in this case the bequeathing of large authoritative collections by elite institutions to the Google digitization enterprise. Does simply digitizing these – ?books, imprimaturs and all – ?automatically result in an authoritative bibliographic resource?

Duguid’s suggests not. The process of migrating analog works to the digital environment in a way that respects the orginals but fully integrates them into the networked world is trickier than simply scanning and dumping into a database. The Shandy study shows in detail how Google’s ambition to organizing the world’s books and making them universally accessible and useful (to slightly adapt Google’s mission statement) is being carried out in a hasty, slipshod manner, leading to a serious deficit in quality in what could eventually become, for better or worse, the world’s library. Duguid is hardly the first to point this out, but the intense focus of his case study is valuable and serves as a useful counterpoint to the technoromantic visions of Google boosters such as Kevin Kelly, who predict a new electronic book culture liberated by search engines in which readers are free to find, remix and recombine texts in various ways. While this networked bibliotopia sounds attractive, it’s conceived primarily from the standpoint of technology and not well grounded in the particulars of books. What works as snappy Web2.0 buzz doesn’t necessarily hold up in practice.

As is so often the case, the devil is in the details, and it is precisely the details that Google seems to have overlooked, or rather sprinted past. Sloppy scanning and the blithe discarding of organizational and metadata schemes meticulously devised through centuries of librarianship, might indeed make the books “universally accessible” (or close to that) but the “and useful” part of the equation could go unrealized. As we build the future, it’s worth pondering what parts of the past we want to hold on to. It’s going to have to be a slower and more painstaking a process than Google (and, ironically, the partner libraries who have rushed headlong into these deals) might be prepared to undertake. Duguid:

The Google Books Project is no doubt an important, in many ways invaluable, project. It is also, on the brief evidence given here, a highly problematic one. Relying on the power of its search tools, Google has ignored elemental metadata, such as volume numbers. The quality of its scanning (and so we may presume its searching) is at times completely inadequate. The editions offered (by search or by sale) are, at best, regrettable. Curiously, this suggests to me that it may be Google’s technicians, and not librarians, who are the great romanticisers of the book. Google Books takes books as a storehouse of wisdom to be opened up with new tools. They fail to see what librarians know: books can be obtuse, obdurate, even obnoxious things. As a group, they don’t submit equally to a standard shelf, a standard scanner, or a standard ontology. Nor are their constraints overcome by scraping the text and developing search algorithms. Such strategies can undoubtedly be helpful, but in trying to do away with fairly simple constraints (like volumes), these strategies underestimate how a book’s rigidities are often simultaneously resources deeply implicated in the ways in which authors and publishers sought to create the content, meaning, and significance that Google now seeks to liberate. Even with some of the best search and scanning technology in the world behind you, it is unwise to ignore the bookish character of books. More generally, transferring any complex communicative artifacts between generations of technology is always likely to be more problematic than automatic.

Also take a look at Peter Brantley’s thoughts on Duguid:

Ultimately, whether or not Google Book Search is a useful tool will hinge in no small part on the ability of its engineers to provoke among themselves a more thorough, and less alchemic, appreciation for the materials they are attempting to transmute from paper to gold.

google news adds an interesting (and risky) editorial layer

Starting this week, Google News will publish comments alongside linked stories from “a special subset of readers: those people or organizations who were actual participants in the story in question.”

John Murrell and Steve Rubel have good analyses of why moving beyond pure aggregation is a risky move for Google, whose relationship with news content owners is already tense to say the least.

cornell joins google book search

…offering up to 500,000 items for digitization. From the Cornell library site:

Cornell is the 27th institution to join the Google Book Search Library Project, which digitizes books from major libraries and makes it possible for Internet users to search their collections online. Over the next six years, Cornell will provide Google with public domain and copyrighted holdings from its collections. If a work has no copyright restrictions, the full text will be available for online viewing. For books protected by copyright, users will just get the basic background (such as the book’s title and the author’s name), at most a few lines of text related to their search and information about where they can buy or borrow a book. Cornell University Library will work with Google to choose materials that complement the contributions of the project’s other partners. In addition to making the materials available through its online search service, Google will also provide Cornell with a digital copy of all the materials scanned, which will eventually be incorporated into the university’s own digital library.

the open library

A little while back I was musing on the possibility of a People’s Card Catalog, a public access clearinghouse of information on all the world’s books to rival Google’s gated preserve. Well thanks to the Internet Archive and its offshoot the Open Content Alliance, it looks like we might now have it – ?or at least the initial building blocks. On Monday they launched a demo version of the Open Library, a grand project that aims to build a universally accessible and publicly editable directory of all books: one wiki page per book, integrating publisher and library catalogs, metadata, reader reviews, links to retailers and relevant Web content, and a menu of editions in multiple formats, both digital and print.

A little while back I was musing on the possibility of a People’s Card Catalog, a public access clearinghouse of information on all the world’s books to rival Google’s gated preserve. Well thanks to the Internet Archive and its offshoot the Open Content Alliance, it looks like we might now have it – ?or at least the initial building blocks. On Monday they launched a demo version of the Open Library, a grand project that aims to build a universally accessible and publicly editable directory of all books: one wiki page per book, integrating publisher and library catalogs, metadata, reader reviews, links to retailers and relevant Web content, and a menu of editions in multiple formats, both digital and print.

Imagine a library that collected all the world’s information about all the world’s books and made it available for everyone to view and update. We’re building that library.

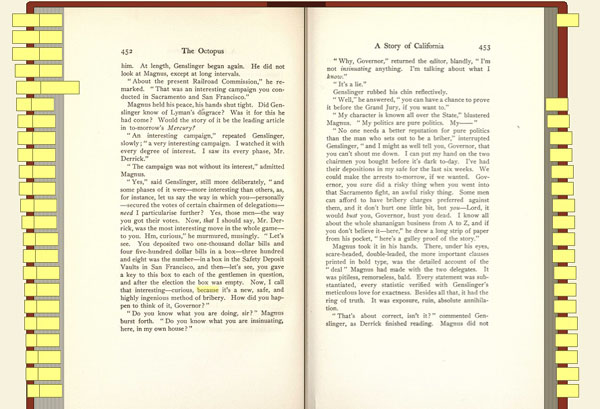

The official opening of Open Library isn’t scheduled till October, but they’ve put out the demo now to prove this is more than vaporware and to solicit feedback and rally support. If all goes well, it’s conceivable that this could become the main destination on the Web for people looking for information in and about books: a Wikipedia for libraries. On presentation of public domain texts, they already have Google beat, even with recent upgrades to the GBS system including a plain text viewing option. The Open Library provides TXT, PDF, DjVu (a high-res visual document browser), and its own custom-built Book Viewer tool, a digital page-flip interface that presents scanned public domain books in facing pages that the reader can leaf through, search and (eventually) magnify.

Page turning interfaces have been something of a fad recently, appearing first in the British Library’s Turning the Pages manuscript preservation program (specifically cited as inspiration for the OL Book Viewer) and later proliferating across all manner of digital magazines, comics and brochures (often through companies that you can pay to convert a PDF into a sexy virtual object complete with drag-able page corners that writhe when tickled with a mouse, and a paper-like rustling sound every time a page is turned).

This sort of reenactment of paper functionality is perhaps too literal, opting for imitation rather than innovation, but it does offer some advantages. Having a fixed frame for reading is a relief in the constantly scrolling space of the Web browser, and there are some decent navigation tools that gesture toward the ways we browse paper. To either side of the open area of a book are thin vertical lines denoting the edges of the surrounding pages. Dragging the mouse over the edges brings up scrolling page numbers in a small pop-up. Clicking on any of these takes you quickly and directly to that part of the book. Searching is also neat. Type a query and the book is suddenly interleaved with yellow tabs, with keywords highlighted on the page, like so:

But nice as this looks, functionality is sacrificed for the sake of fetishism. Sticky tabs are certainly a cool feature, but not when they’re at the expense of a straightforward list of search returns showing keywords in their sentence context. These sorts of references to the feel and functionality of the paper book are no doubt comforting to readers stepping tentatively into the digital library, but there’s something that feels disjointed about reading this way: that this is a representation of a book but not a book itself. It is a book avatar. I’ve never understood the appeal of those Second Life libraries where you must guide your virtual self to a virtual shelf, take hold of the virtual book, and then open it up on a virtual table. This strikes me as a failure of imagination, not to mention tedious. Each action is in a sense done twice: you operate a browser within which you operate a book; you move the hand that moves the hand that moves the page. Is this perhaps one too many layers of mediation to actually be able to process the book’s contents? Don’t get me wrong, the Book Viewer and everything the Open Library is doing is a laudable start (cause for celebration in fact), but in the long run we need interfaces that deal with texts as native digital objects while respecting the originals.

What may be more interesting than any of the technology previews is a longish development document outlining ambitious plans for building the Open Library user interface. This covers everything from metadata standards and wiki templates to tagging and OCR proofreading to search and browsing strategies, plus a well thought-out list of user scenarios. Clearly, they’re thinking very hard about every conceivable element of this project, including the sorts of things we frequently focus on here such as the networked aspects of texts. Acolytes of Ted Nelson will be excited to learn that a transclusion feature is in the works: a tool for embedding passages from texts into other texts that automatically track back to the source (hypertext copy-and-pasting). They’re also thinking about collaborative filtering tools like shared annotations, bookmarking and user-defined collections. All very very good, but it will take time.

Building an open source library catalog is a mammoth undertaking and will rely on millions of hours of volunteer labor, and like Wikipedia it has its fair share of built-in contradictions. Jessamyn West of librarian.net put it succinctly:

It’s a weird juxtaposition, the idea of authority and the idea of a collaborative project that anyone can work on and modify.

But the only realistic alternative may well be the library that Google is building, a proprietary database full of low-quality digital copies, a semi-accessible public domain prohibitively difficult to use or repurpose outside the Google reading room, a balkanized landscape of partner libraries and institutions left in its wake, each clutching their small slice of the digitized pie while the whole belongs only to Google, all of it geared ultimately not to readers, researchers and citizens but to consumers. Construed more broadly to include not just books but web pages, videos, images, maps etc., the Google library is a place built by us but not owned by us. We create and upload much of the content, we hand-make the links and run the search queries that program the Google brain. But all of this is captured and funneled into Google dollars and AdSense. If passive labor can build something so powerful, what might active, voluntary labor be able to achieve? Open Library aims to find out.

welcome siva vaidhyanathan, our first fellow

We are proud to announce that the brilliant media scholar and critic Siva Vaidhyanathan will be establishing a virtual residency here as the Institute’s first fellow. Siva is in the process of moving from NYU to the University of Virginia, where he’ll be teaching media studies and law. While we’re sad to be losing him in New York, we’re thrilled that this new relationship will bring our work into closer, more dynamic proximity. Precisely what “fellowship” entails will develop over time but for now it means that the Institute is the new digital home of SIVACRACY.NET, Siva’s popular weblog. It also means that next month we will be a launching a new website devoted to Siva’s latest book project, The Googlization of Everything, an examination of Google’s disruptive effects on culture, commerce and community.

We are proud to announce that the brilliant media scholar and critic Siva Vaidhyanathan will be establishing a virtual residency here as the Institute’s first fellow. Siva is in the process of moving from NYU to the University of Virginia, where he’ll be teaching media studies and law. While we’re sad to be losing him in New York, we’re thrilled that this new relationship will bring our work into closer, more dynamic proximity. Precisely what “fellowship” entails will develop over time but for now it means that the Institute is the new digital home of SIVACRACY.NET, Siva’s popular weblog. It also means that next month we will be a launching a new website devoted to Siva’s latest book project, The Googlization of Everything, an examination of Google’s disruptive effects on culture, commerce and community.

Siva is one of just a handful of writers to have leveled a consistent and coherent critique of Google’s expansionist policies, arguing not from the usual kneejerk copyright conservatism that has dominated the debate but from a broader cultural and historical perspective: what does it mean for one company to control so much of the world’s knowledge? Siva recently gave a keynote talk at the New Network Theory conference in Amsterdam where he explored some of these ideas, which you can read about here. Clearly Siva’s views on these issues are sympathetic to our own so we’re very glad to be involved in the development of this important book. Stay tuned for more details.

Welcome aboard, Siva.