Assignment Zero, an experimental news site that brings professional journalists together with volunteer researcher-reporters to collaboratively write stories, has kicked off its tenure at Wired News by doing an extended investigation of “crowdsourcing.” Crowdsourcing is the latest internet parlance used to describe work traditionally carried out by one or a few persons being distributed among many people. I’ve always found something objectionable about the term, which is more suggestive of a business model than a creative strategy and sidesteps the numerous ethical questions about peer production and corporate exploitation that are inevitably bound up in it. But it’s certainly a subject that could use a bit of scrutiny, and who better to do it than a journalistic team composed of the so-called crowd?

It is in this self-reflexive spirt that Jay Rosen, a exceedingly sharp thinker on the future of journalism and executive editor of Assignment Zero (and the related NewAssignment.net), presents an interesting series of features assembled by his “pro-am” team that look at a wide variety of online collaboration forms. This package has been in development for several months (many of the pieces contain links back to the original “assignments” and you can see how they evolved) and there’s a lot there: 80 Q&A’s, essays and stories (mostly Q&A’s) looking at innovative practices and practitioners across media types and cultural/commercial arenas. From an initial sifting, it’s less an analysis than just a big collection of perspectives, but this is valuable I think, if for no other reason than as a jumping-off point for further research.

There are many of the usual suspects like Benkler, Lessig, Jarvis, Shirky, Surowiecki, Wales etc., but as many or more of the pieces venture off the beaten track. There’s a thought-provoking interview with Douglas Rushkoff on open source as a cultural paradigm, some stuff on the Wu Ming fiction collective (which is fascinating), a piece about Sydney Poore, a Wikipedia “super-contributor,” and some coverage of our work, an interview with McKenzie Wark about Gamer Theory and collaborative writing. There’s also an essay by one of the Assignment Zero contributors, Kristin Gorski, synthesizing some of the material gathered on the latter subject: “Creative Crowdwriting: The Open Book.”

All in all this seems like a successful test drive for an experimental group that is still inventing its process. I’m interested to see how it develops with other less “wired” subjects.

Category Archives: crowdsourcing

google flirts with image tagging

Ars Technica reports that Google has begun outsourcing, or “crowdsourcing,” the task of tagging its image database by asking people to play a simple picture labeling game. The game pairs you with a randomly selected online partner, then, for 90 seconds, runs you through a sequence of thumbnail images, asking you to add as many labels as come to mind. Images advance whenever you and your partner hit upon a match (an agreed-upon tag), or when you agree to take a pass.

Ars Technica reports that Google has begun outsourcing, or “crowdsourcing,” the task of tagging its image database by asking people to play a simple picture labeling game. The game pairs you with a randomly selected online partner, then, for 90 seconds, runs you through a sequence of thumbnail images, asking you to add as many labels as come to mind. Images advance whenever you and your partner hit upon a match (an agreed-upon tag), or when you agree to take a pass.

I played a few rounds but quickly grew tired of the bland consensus that the game encourages. Matches tend to be banal, basic descriptors, while anything tricky usually results in a pass. In other words, all the pleasure of folksonomies — splicing one’s own idiosyncratic sense of things with the usually staid task of classification — is removed here. I don’t see why they don’t open the database up to much broader tagging. Integrate it with the image search and harvest a bigger crop of metadata.

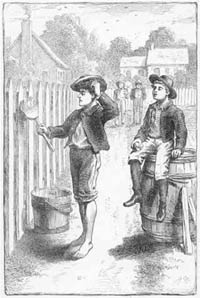

Right now, it’s more like Tom Sawyer tricking the other boys into whitewashing the fence. Only, I don’t think many will fall for this one because there’s no real incentive to participation beyond a halfhearted points system. For every matched tag, you and your partner score points, which accumulate in your Google account the more you play. As far as I can tell, though, points don’t actually earn you anything apart from a shot at ranking in the top five labelers, which Google lists at the end of each game. Whitewash, anyone?

In some ways, this reminded me of Amazon’s Mechanical Turk, an “artificial artificial intelligence” service where anyone can take a stab at various HIT’s (human intelligence tasks) that other users have posted. Tasks include anything from checking business hours on restaurant web sites against info in an online directory, to transcribing podcasts (there are a lot of these). “Typically these tasks are extraordinarily difficult for computers, but simple for humans to answer,” the site explains. In contrast to the Google image game, with the Mechanical Turk, you can actually get paid. Fees per HIT range from a single penny to several dollars.

I’m curious to see whether Google goes further with tagging. Flickr has fostered the creation of a sprawling user-generated taxonomy for its millions of images, but the incentives to tagging there are strong and inextricably tied to users’ personal investment in the production and sharing of images, and the building of community. Amazon, for its part, throws money into the mix, which (however modest the sums at stake) makes Mechanical Turk an intriguing, and possibly entertaining, business experiment, not to mention a place to make a few extra bucks. Google’s experiment offers neither, so it’s not clear to me why people should invest.