Last week got off to an exciting start when the Wall Street Journal ran a story about “networked books,” the Institute’s central meme and very own coinage. It turns out we were quoted in another WSJ item later that week, this time looking at the science journal Nature, which over the summer has been experimenting with opening up its peer review process to the scientific community (unfortunately, this article, like the networked books piece, is subscriber only).

I like this article because it smartly weaves in the story of Grigory (Grisha) Perelman, which I had meant to write about earlier. Perelman is a Russian topologist who last month shocked the world by turning down the Fields medal, the highest honor in mathematics. He was awarded the prize for unraveling a famous geometry problem that had baffled mathematicians for a century.

I like this article because it smartly weaves in the story of Grigory (Grisha) Perelman, which I had meant to write about earlier. Perelman is a Russian topologist who last month shocked the world by turning down the Fields medal, the highest honor in mathematics. He was awarded the prize for unraveling a famous geometry problem that had baffled mathematicians for a century.

There’s an interesting publishing angle to this, which is that Perelman never submitted his groundbreaking papers to any mathematics journals, but posted them directly to ArXiv.org, an open “pre-print” server hosted by Cornell. This, combined with a few emails notifying key people in the field, guaranteed serious consideration for his proof, and led to its eventual warranting by the mathematics community. The WSJ:

…the experiment highlights the pressure on elite science journals to broaden their discourse. So far, they have stood on the sidelines of certain fields as a growing number of academic databases and organizations have gained popularity.

One Web site, ArXiv.org, maintained by Cornell University in Ithaca, N.Y., has become a repository of papers in fields such as physics, mathematics and computer science. In 2002 and 2003, the reclusive Russian mathematician Grigory Perelman circumvented the academic-publishing industry when he chose ArXiv.org to post his groundbreaking work on the Poincaré conjecture, a mathematical problem that has stubbornly remained unsolved for nearly a century. Dr. Perelman won the Fields Medal, for mathematics, last month.

(Warning: obligatory horn toot.)

“Obviously, Nature’s editors have read the writing on the wall [and] grasped that the locus of scientific discourse is shifting from the pages of journals to a broader online conversation,” wrote Ben Vershbow, a blogger and researcher at the Institute for the Future of the Book, a small, Brooklyn, N.Y., , nonprofit, in an online commentary. The institute is part of the University of Southern California’s Annenberg Center for Communication.

Also worth reading is this article by Sylvia Nasar and David Gruber in The New Yorker, which reveals Perelman as a true believer in the gift economy of ideas:

Perelman, by casually posting a proof on the Internet of one of the most famous problems in mathematics, was not just flouting academic convention but taking a considerable risk. If the proof was flawed, he would be publicly humiliated, and there would be no way to prevent another mathematician from fixing any errors and claiming victory. But Perelman said he was not particularly concerned. “My reasoning was: if I made an error and someone used my work to construct a correct proof I would be pleased,” he said. “I never set out to be the sole solver of the Poincaré.”

Perelman’s rejection of all conventional forms of recognition is difficult to fathom at a time when every particle of information is packaged and owned. He seems almost like a kind of mystic, a monk who abjures worldly attachment and dives headlong into numbers. But according to Nasar and Gruber, both Perelman’s flouting of academic publishing protocols and his refusal of the Fields medal were conscious protests against what he saw as the petty ego politics of his peers. He claims now to have “retired” from mathematics, though presumably he’ll continue to work on his own terms, in between long rambles through the streets of St. Petersburg.

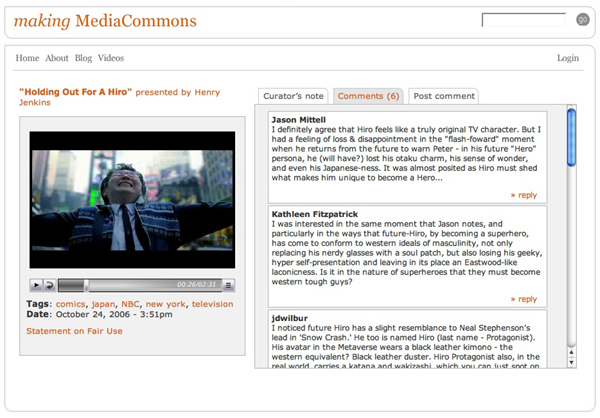

Regardless, Perelman’s case is noteworthy as an example of the kind of critical discussions that scholars can now orchestrate outside the gate. This sort of thing is generally more in evidence in the physical and social sciences, but ought too to be of great interest to scholars in the humanities, who have only just begun to explore the possibilities. Indeed, these are among our chief inspirations for MediaCommons.

Academic presses and journals have long functioned as the gatekeepers of authoritative knowledge, determining which works see the light of day and which ones don’t. But open repositories like ArXiv have utterly changed the calculus, and Perelman’s insurrection only serves to underscore this fact. Given the abundance of material being published directly from author to public, the critical task for the editor now becomes that of determining how works already in the daylight ought to be received. Publishing isn’t an endpoint, it’s the beginning of a process. The networked press is a guide, a filter, and a discussion moderator.

Nature seems to grasp this and is trying with its experiment to reclaim some of the space that has opened up in front of its gates. Though I don’t think they go far enough to effect serious change, their efforts certainly point in the right direction.

I like this article because it smartly weaves in the story of

I like this article because it smartly weaves in the story of