Looks like it’s hardware day at if:book. Just got a video iPod. Hmmm. this looks like another niche where Apple has handily beat Sony. the image is crisper and larger than i expected; the case is slimmer and lighter.

it’s remarkably easy to convert video files to MP4 and load them onto the iPod. the experience is intimate . i’m experimenting with different genres, poetry, animation, family home video, short films. everything works. the iPod got handed around from ben to dan to ray to jesse. we came up with a bunch of ideas for projects we want to try. stay tuned.

Monthly Archives: January 2006

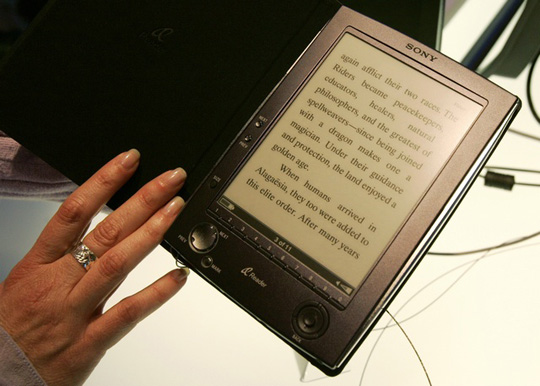

first sighting of sony ebook reader

this is a late addition to this post. i just realized that whatever the strengths and weaknesses of the Sony ebook reader, i think that most of the people writing about it, including me, have missed perhaps the most important aspect — the device has Sony’s name on it. correct me if i’m wrong, but this is the first time a major consumer electronics company has seen fit to put their name on a ebook reader in the US market. it’s been a long time coming.

Reuters posted this image by Rick Wilking. every post i’ve seen so far is pessimistic about sony’s chances. i’m doubtful myself, but will wait to see what kind of digital rights management they’ve installed. if it’s easy to take

things off my desktop to read later, including pdfs and web pages, and if the MP3 player feature is any good they might be able to carve out a niche which they can expand over time if they keep developing the concept. i do wish it were a bit more stylish . . .

here’s a link to ben’s excellent post ipod for text.

useful rss

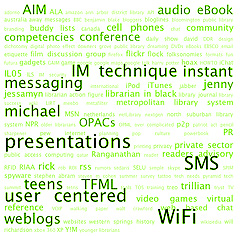

Hi. I’m Jesse, the latest member to join the staff here at the Institute. I’m interested in network effects, online communities, and emergent behavior. Right now I’m interested in the tools we have available to control and manipulate RSS feeds. My goal is to collect a wide variety of feeds and tease out the threads that are important to me. In my experience, mechanical aggregation gives you quantity and diversity, but not quality and focus. So I did a quick investigation of the tools that exist to manage and manipulate feeds.

Sites like MetaFilter and Technorati skim the most popular topics in the blogosphere.  But what sort of tools exist to help us narrow our focus? There are two tools that we can use right now: tag searches/filtering, and keyword searching. Tag searches (on Technorati) and tag filtering (on Metafilter) drill down to specific areas, like “books” or “books and publishing.” A casual search on MetaFilter was a complete failure, but Technorati, with its combination of tags and keyword search results produced good material.

But what sort of tools exist to help us narrow our focus? There are two tools that we can use right now: tag searches/filtering, and keyword searching. Tag searches (on Technorati) and tag filtering (on Metafilter) drill down to specific areas, like “books” or “books and publishing.” A casual search on MetaFilter was a complete failure, but Technorati, with its combination of tags and keyword search results produced good material.

There is also the Google Blog search. As Google puts it, you can ‘find blogs on your favorite topics.’ PageRank works, so PageRank applied to blogs should work too. Unfortunately it results in too many pages that, while higher ranked in the whole set of the Internet, either fail to be on topic or exist outside of the desired sub-spheres of a topic. For example, I searched for “gourmet food” and found one of the premier food blogs on the fourth page, just below Carpundit. Google blog search fails here because it can’t get small enough to understand the relationships in the blogosphere, and relies more heavily on text retrieval algorithms that sabotage the results.

Finally, let’s talk about aggregators. There is more human involvement in selecting sites you’re interested in reading. This creates a personalized network of sites that are related, if only by your personal interest. The problem is, you get what they want to write about. Managing a large collection of feeds can be tiresome when you’re looking for specific information. Bloglines has a search function that allows you to find keywords inside your subscriptions, then treat that as a feed. This neatly combines hand-picked sources with keyword or tag harvesting. The result: a slice of from your trusted collection of authors about a specific topic.

What can we envision for the future of RSS? Affinity mapping and personalized recommendation systems could augment the tag/keyword search functionality to automatically generate a slice from a small network of trusted blogs. Automatic harvesting of whole swaths of linked entries for offline reading in a bounded hypertext environment. Reposting and remixing feed content on the fly based on text-processing algorithms. And we’ll have to deal with the dissolving identity and trust relationships that are a natural consequence of these innovations.

two newspapers

I picked up The New York Times from outside my door this morning knowing that the lead headline was going to be wrong. I still read the print paper every morning – I do read the electronic version, but I find that my reading there tends to be more self-selecting than I’d like it to be – but lately I find myself checking the Web before settling down to the paper and a cup of coffee. On the Web, I’d already seen the predictable gloating and hand-wringing in evidence there. Because of some communication mixup, the papers went to press with the information that the trapped West Virginia coal miners were mostly alive; a few hours later it turned out that they were, in fact, mostly dead. A scrutiny of the front pages of the New York dailies at the bodega this morning revealed that just about all had the wrong news – only Hoy, a Spanish-language daily didn’t have the story, presumably because it went to press a bit earlier. At right is the front page of today’s USA Today, the nation’s most popular newspaper; click on the thumbnail for a more legible version. See also the gallery at their “newseum”. (Note that this link won’t show today’s papers tomorrow – my apologies, readers of the future, there doesn’t seem to be anything that can be done for you, copyright and all that.)

I picked up The New York Times from outside my door this morning knowing that the lead headline was going to be wrong. I still read the print paper every morning – I do read the electronic version, but I find that my reading there tends to be more self-selecting than I’d like it to be – but lately I find myself checking the Web before settling down to the paper and a cup of coffee. On the Web, I’d already seen the predictable gloating and hand-wringing in evidence there. Because of some communication mixup, the papers went to press with the information that the trapped West Virginia coal miners were mostly alive; a few hours later it turned out that they were, in fact, mostly dead. A scrutiny of the front pages of the New York dailies at the bodega this morning revealed that just about all had the wrong news – only Hoy, a Spanish-language daily didn’t have the story, presumably because it went to press a bit earlier. At right is the front page of today’s USA Today, the nation’s most popular newspaper; click on the thumbnail for a more legible version. See also the gallery at their “newseum”. (Note that this link won’t show today’s papers tomorrow – my apologies, readers of the future, there doesn’t seem to be anything that can be done for you, copyright and all that.)

At left is another front page of a newspaper, The New York Times from April 20, 1950 (again, click to see a legible version). I found it last night at the start of Marshall McLuhan’s The Mechanical Bride: Folklore of Industrial Man. Published in 1951, The Mechanical Bride is one of McLuhan’s earliest works; in it, he primarily looks at the then-current world of print advertising, starting with the front page shown here. To my jaundiced eye, most of the book hasn’t stood up that well; while it was undoubtedly very interesting at the time – being one of the first attempts to seriously deal with how people interact with advertisements from a critical perspective – fifty years, and billions and billions of advertisements later, it doesn’t stand up as well as, say, Judith Williamson‘s Decoding Advertisements manages to. But bits of it are still interesting: McLuhan presents this front page to talk about how Stephane Mallarmé and the Symbolists found the newspaper to be the modern symbol of their day, with the different stories all jostling each other for prominence on the page.

At left is another front page of a newspaper, The New York Times from April 20, 1950 (again, click to see a legible version). I found it last night at the start of Marshall McLuhan’s The Mechanical Bride: Folklore of Industrial Man. Published in 1951, The Mechanical Bride is one of McLuhan’s earliest works; in it, he primarily looks at the then-current world of print advertising, starting with the front page shown here. To my jaundiced eye, most of the book hasn’t stood up that well; while it was undoubtedly very interesting at the time – being one of the first attempts to seriously deal with how people interact with advertisements from a critical perspective – fifty years, and billions and billions of advertisements later, it doesn’t stand up as well as, say, Judith Williamson‘s Decoding Advertisements manages to. But bits of it are still interesting: McLuhan presents this front page to talk about how Stephane Mallarmé and the Symbolists found the newspaper to be the modern symbol of their day, with the different stories all jostling each other for prominence on the page.

But you don’t – at least, I don’t – immediately see that when you look at the front page that McLuhan exhibits. This was presumably an extremely ordinary front page when he was exhibiting it, just as the USA Today up top might be representative today. Looked at today, though, it’s something else entirely, especially when you what newspapers look like now. You can notice this even in my thumbnails: when both papers are normalized to 200 pixels wide, you can’t read anything in the old one, besides that it says “The New York Times” as the top, whereas you can make out the headlines to four stories in the USA Today. Newspapers have changed, not just from black & white to color, but in the way the present text and images. In the old paper there are only two photos, headshots of white men in the news – one a politician who’s just given a speech, the other a doctor who’s had his license revoked. The USA Today has perhaps an analogue to that photo in Jack Abramoff’s perp walk; it also has five other photos, one of the miners’ deluded family members (along with Abramoff, the only news photos), two sports-related photos – one of which seems to be stock footage of the Rose Bowl sign, a photo advertising television coverage inside, and a photo of two students for a human interest story. This being the USA Today, there’s also a silly graph in the bottom left; the green strip across the bottom is an ad.

Photos and graphics take up more than a third of the front page of today’s paper.

What’s overwhelming to me about the old Times cover is how much text there is. This was not a newspaper that was meant to be read at a glance – as you can do with the thumbnail of the USA Today. If you look at the Times more closely it looks like everything on the front page is serious news. You could make an argument here about the decline of journalism, but I’m not that interested in that. More interesting is how visual print culture has become. Technology has enabled this – a reasonably intelligent high-schooler could, I think, create a layout like the USA Today. But having this possibility available would also seem to have had an impact on the content – and whether McLuhan would have predicted that, I can’t say.

wikipedia, lifelines, and the packaging of authority

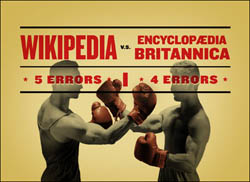

In a nice comment in yesterday’s Times, “The Nitpicking of the Masses vs. the Authority of the Experts,” George Johnson revisits last month’s Seigenthaler smear episode and Nature magazine Wikipedia-Britannica comparison, and decides to place his long term bets on the open-source encyclopedia:

In a nice comment in yesterday’s Times, “The Nitpicking of the Masses vs. the Authority of the Experts,” George Johnson revisits last month’s Seigenthaler smear episode and Nature magazine Wikipedia-Britannica comparison, and decides to place his long term bets on the open-source encyclopedia:

It seems natural that over time, thousands, then millions of inexpert Wikipedians – even with an occasional saboteur in their midst – can produce a better product than a far smaller number of isolated experts ever could.

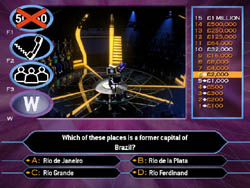

Reading it, a strange analogy popped into my mind: “Who Wants to Be a Millionaire.” Yes, the game show. What does it have to do with encyclopedias, the internet and the re-mapping of intellectual authority? I’ll try to explain. “Who Wants to Be a Millionaire” is a simple quiz show, very straightforward, like “Jeopardy” or “The $64,000 Question.” A single contestant answers a series of multiple choice questions, and with each question the money stakes rise toward a million-dollar jackpot. The higher the stakes the harder the questions (and some seriously overdone lighting and music is added for maximum stress). There is a recurring moment in the game when the contestant’s knowledge fails and they have the option of using one of three “lifelines” that have been alloted to them for the show.

The first lifeline (and these can be used in any order) is the 50:50, which simply reduces the number of possible answers from four to two, thereby doubling your chances of selecting the correct one — a simple jiggering of probablities.  The other two are more interesting. The second lifeline is a telephone call to a friend or relative at home who is given 30 seconds to come up with the answer to the stumper question. This is a more interesting kind of a probability, since it involves a personal relationship. It deals with who you trust, who you feel you can rely on. Last, and my favorite, is the “ask the audience” lifeline, in which the crowd in the studio is surveyed and hopefully musters a clear majority behind one of the four answers. Here, the probability issue gets even more intriguing. Your potential fortune is riding on the knowledge of a room full of strangers.

The other two are more interesting. The second lifeline is a telephone call to a friend or relative at home who is given 30 seconds to come up with the answer to the stumper question. This is a more interesting kind of a probability, since it involves a personal relationship. It deals with who you trust, who you feel you can rely on. Last, and my favorite, is the “ask the audience” lifeline, in which the crowd in the studio is surveyed and hopefully musters a clear majority behind one of the four answers. Here, the probability issue gets even more intriguing. Your potential fortune is riding on the knowledge of a room full of strangers.

In most respects, “Who Wants to Be a Millionaire” is just another riff on the classic quiz show genre, but the lifeline option pegs it in time, providing a clue about its place in cultural history. The perceptive game show anthropologist would surely recognize that the lifeline is all about the network. It’s what gives “Millionaire” away as a show from around the time of the tech bubble in the late 90s — manifestly a network-era program. Had it been produced in the 50s, the lifeline option would have been more along the lines of “ask the professor!” Lights rise on a glass booth containing a mustached man in a tweed jacket sucking on a pipe. Our cliché of authority. But “Millionaire” turns not to the tweedy professor in the glass booth (substitute ivory tower) but rather to the swarming mound of ants in the crowd.

And that’s precisely what we do when we consult Wikipedia. It isn’t an authoritative source in the professor-in-the-booth sense. It’s more lifeline number 3 — hive mind, emergent intelligence, smart mobs, there is no shortage of colorful buzzwords to describe it. We’ve always had lifeline number 2. It’s who you know. The friend or relative on the other end of the phone line. Or think of the whispered exchange between students in the college library reading room, or late-night study in the dorm. Suddenly you need a quick answer, an informal gloss on a subject. You turn to your friend across the table, or sprawled on the couch eating Twizzlers: When was the Glorious Revolution again? Remind me, what’s the Uncertainty Principle?

With Wikipedia, this friend factor is multiplied by an order of millions — the live studio audience of the web. This is the lifeline number 3, or network, model of knowledge. Individual transactions may be less authoritative, pound for pound, paragraph for paragraph, than individual transactions with the professors. But as an overall system to get you through a bit of reading, iron out a wrinkle in a conversation, or patch over a minor factual uncertainty, it works quite well. And being free and informal it’s what we’re more inclined to turn to first, much more about the process of inquiry than the polished result. As Danah Boyd puts it in an excellently measured defense of Wikipedia, it “should be the first source of information, not the last. It should be a site for information exploration, not the definitive source of facts.” Wikipedia advocates and critics alike ought to acknowledge this distinction.

So, having acknowledged it, can we then broker a truce between Wikipedia and Britannica? Can we just relax and have the best of both worlds? I’d like that, but in the long run it seems that only one can win, and if I were a betting man, I’d have to bet with Johnson. Britannica is bound for obsolescence. A couple of generations hence (or less), who will want it? How will it keep up with this larger, far more dynamic competitor that is already of roughly equal in quality in certain crucial areas?

So, having acknowledged it, can we then broker a truce between Wikipedia and Britannica? Can we just relax and have the best of both worlds? I’d like that, but in the long run it seems that only one can win, and if I were a betting man, I’d have to bet with Johnson. Britannica is bound for obsolescence. A couple of generations hence (or less), who will want it? How will it keep up with this larger, far more dynamic competitor that is already of roughly equal in quality in certain crucial areas?

Just as the printing press eventually drove the monastic scriptoria out of business, Wikipedia’s free market of knowledge, with all its abuses and irregularities, its palaces and slums, will outperform Britannica’s centralized command economy, with its neat, cookie-cutter housing slabs, its fair, dependable, but ultimately less dynamic, system. But, to stretch the economic metaphor just a little further before it breaks, it’s doubtful that the free market model will remain unregulated for long. At present, the world is beginning to take notice of Wikipedia. A growing number are championing it, but for most, it is more a grudging acknowledgment, a recognition that, for better of for worse, what’s going on with Wikipedia is significant and shouldn’t be ignored.

Eventually we’ll pass from the current phase into widespread adoption. We’ll realize that Wikipedia, being an open-source work, can be repackaged in any conceivable way, for profit even, with no legal strings attached (it already has been on sites like about.com and thousands — probably millions — of spam and link farms). As Lisa intimated in a recent post, Wikipedia will eventually come in many flavors. There will be commercial editions, vetted academic editions, handicap-accessible editions. Darwinist editions, creationist editions. Google, Yahoo and Amazon editions. Or, in the ultimate irony, Britannica editions! (If you can’t beat ’em…)

All the while, the original Wikipedia site will carry on as the sprawling community garden that it is. The place where a dedicated minority take up their clippers and spades and tend the plots. Where material is cultivated for packaging. Right now Wikipedia serves best as an informal lifeline, but soon enough, people will begin to demand something more “authoritative,” and so more will join in the effort to improve it. Some will even make fortunes repackaging it in clever ways for which people or institutions are willing to pay. In time, we’ll likely all come to view Wikipedia, or its various spin-offs, as a resource every bit as authoritative as Britannica. But when this happens, it will no longer be Wikipedia.

Authority, after all, is a double-edged sword, essential in the pursuit of truth, but dangerous when it demands that we stop asking questions. What I find so thrilling about the Wikipedia enterprise is that it is so process-oriented, that its work is never done. The minute you stop questioning it, stop striving to improve it, it becomes a museum piece that tells the dangerous lie of authority. Even those of use who do not take part in the editorial gardening, who rely on it solely as lifeline number 3, we feel the crowd rise up to answer our query, we take the knowledge it gives us, but not (unless we are lazy) without a grain of salt. The work is never done. Crowds can be wrong. But we were not asking for all doubts to be resolved, we wanted simply to keep moving, to keep working. Sometimes authority is just a matter of packaging, and the packaging bonanza will soon commence. But I hope we don’t lose the original Wikipedia — the rowdy community garden, lifeline number 3. A place that keeps you on your toes — that resists tidy packages.

new mission statement

the institute is a bit over a year old now. our understanding of what we’re doing has deepened considerably during the year, so we thought it was time for a serious re-statement of our goals. here’s a draft for a new mission statement. we’re confident that your input can make it better, so please send your ideas and criticisms.

OVERVIEW

The Institute for the Future of the Book is a project of the Annenberg Center for Communication at USC. Starting with the assumption that the locus of intellectual discourse is shifting from printed page to networked screen, the primary goal of the Institute is to explore, understand and hopefully influence this evolution.

THE BOOK

We use the word “book” metaphorically. For the past several hundred years, humans have used print to move big ideas across time and space for the purpose of carrying on conversations about important subjects. Radio, movies, TV emerged in the last century and now with the advent of computers we are combining media to forge new forms of expression. For now, we use “book” to convey the past, the present transformation, and a number of possible futures.

THE WORK & THE NETWORK

One major consequence of the shift to digital is the addition of graphical, audio, and video elements to the written word. More profound, however, are the consequences of the relocation of the book within the network. We are transforming books from bounded objects to documents that evolve over time, bringing about fundamental changes in our concepts of reading and writing, as well as the role of author and reader.

SHORT TERM/LONG TERM

The Institute values theory and practice equally. Part of our work involves doing what we can with the tools at hand (short term). Examples include last year’s Gates Memory Project or the new author’s thinking-out-loud blogging effort. Part of our work involves trying to build new tools and effecting industry wide change (medium term): see the Sophie Project and NextText. And a significant part of our work involves blue-sky thinking about what might be possible someday, somehow (long term). Our blog, if:book covers the full-range of our interests.

CREATING TOOLS

As part of the Mellon Foundation’s project to develop an open-source digital infrastructure for higher education, the Institute is building Sophie, a set of high-end tools for writing and reading rich media electronic documents. Our goal is to enable anyone to assemble complex, elegant, and robust documents without the necessity of mastering overly complicated applications or the help of programmers.

NEW FORMS, NEW PROCESSES

Academic institutes arose in the age of print, which informed the structure and rhythm of their work. The Institute for the Future of the Book was born in the digital era, and we seek to conduct our work in ways appropriate to the emerging modes of communication and rhythms of the networked world. Freed from the traditional print publishing cycles and hierarchies of authority, the Institute seeks to conduct its activities as much as possible in the open and in real time.

HUMANISM & TECHNOLOGY

Although we are excited about the potential of digital technologies to amplify human potential in wondrous ways, we believe it is crucial to consciously consider the social impact of the long-term changes to society afforded by new technologies.

BEYOND BORDERS

Although the institute is based in the U.S. we take the seriously the potential of the internet and digital media to transcend borders. We think it’s important to pay attention to developments all over the world, recognizing that the future of the book will likely be determined as much by Beijing, Buenos Aires, Cairo, Mumbai and Accra as by New York and Los Angeles.

the year in ideas

In developed nations, and in the US in particular, high-speed wireless access to the Internet is a given for the affluent and an achievable possibility for most. In the rest of the world, owning a computer is a dream for a community, and a fantasy for the individual. At this moment, away in the central mountains of Colombia, I am virtually disconnected from the world, though quite connected to the splendor of nature. I’m writing this relying on uncertain electricity that, if it fails, will be backed up by a gas generator that will keep food fresh and beer cold, the hell with the laptop. Reading one of last week’s Medellín’s newspapers, I was surprised to see news of the advent of the BlueBerry as a technological advance that will reach the city in early 2006. Medellín is a booming, sophisticated Third World city of more than 3.5 million people. This piece of news made clearer for me, more than ever, how in the US we take technology for granted when, in fact, it is the domain of only a small minority of the world. This doesn’t mean that the rest don’t need connectivity, it means that if they are being pushed to play in the global monopoly game, they must have it. From that perspective, I bring the New York Times Magazine’s fifth edition of The Year in Ideas” (12/11/2005.) As always, it examines a number of trends and fads that, in a way or another, were markers of the year. Considering the year at the Institute and its pursuit of the meaningful among myriad innovations, I’ll review some of the ideas the Times chose, that meet the ones the Institute brought to the front throughout the year. Beyond the noteworthy technological inventions, it is the human contribution, the users’ innovative ways of dealing with what already exists in the Internet, which make them worth reflecting upon.

The political power of the blogosphere is an accepted fact, but it is the media infrastructure that passes on what is said on blogs what has given the conservatives the upper hand. Even though Howard Dean’s campaign epitomized the power of the liberal blogosphere, the so called “Net roots” continue to be regarded as the terrain of young people with the time in their hands to participate in virtual dialogue. The liberal’s approach blogs as a forum to air ideas and to criticize not only their opponents but also each other, differs greatly from that of the conservatives’. They are not particularly interested in introspection and use the Web to support their issues and to induce emotional responses from their base. But, it is their connection to a network of local and national talk-radio and TV shows what has given exposure and credibility to the conservative blogs. Here, we have a sad, but true, example of how it is the coalescence of different media what matters, not their insular existence.

The news media increasingly have been using the Web both as an enhancer and as a way to achieve two-way communications with the public. An exciting example of the meeting of journalism and the blog is the New Orleans Times-Picayune. Before Katrina hit the city, they set up a page on their Web site called “Hurricane Katrina Weblog.” Its original function was supplemental. However, when the flood came and the printed edition was shut down, the blog became the newspaper. Even though the paper’s staff kept compiling a daily edition as a download, the blog was brimming with posts appearing throughout the day and readership grew exponentially, getting 20 to 30 million page views per day. The paper continued posting carefully edited stories interspersed with short dispatches phoned or e-mailed to the newspaper’s new headquarters in Baton Rouge. In the words of Paul Tough, “what resulted was exciting and engrossing and new, a stream-of-consciousness hybrid that combined the immediacy and scattershot quality of a blog with the authority and on-the-scene journalism of a major daily newspaper.”

Joshua M. Marshall, editor of the blog Talkingpointsmemo.com, decided to ask his readers to share their knowledge of the ever spreading Washington scandals in an effort to keep abreast of news. He called his experiment “open-source investigative reporting.” Marshall’s blog has about 100,000 readers a day and he saw in them the potential to gather news in a nationwide basis. For instance, he relied on his readers’ expertise with Congressional ethics code in order to determine if Jack Abramoff’s gifts were violations. What Marshall has come up with is a very large news-gathering and fact-checking network, a healthy alternative to traditional journalism.

Podcasting has become another alternative to broadcasting which provides the ability to access audio and video programs as soon as they’re delivered to your computer, or to pile them up as you do with written media. Now, through iTunes, we are experiencing the advent of homemade video postcasts. Some of them have already thousands of viewers. Potentially, this could become the next step of community access television.

The mash-up of data from different web sites has gained thousands of adepts. One of the first ones was Adrian Holovaty’s Chicagocrime.org, a street map of Chicago from Google overlaid with crime statistics from the Chicago Police online database. Following this, many people started to make annotated maps, organizing all sorts of information geographically from real-estate listings to memory maps. The social possibilities of this personal cartography are enormous. The Times brings Matthew Haughey’s “My Childhood, Seen by Google Maps,” as an example of an elegant and evocative project. If we think of the illuminated maps that expanded the world and ignited the imagination of many explorers, this new form of cartography brings a similar human dimension to the perfect satellite maps.

Thomas Vander Wal has called “folksonomy” to tagging taken to the level of taxonomy. The labeling of people’s photos, on Flicker for instance, gets richer by the additions of others who tag the same photos for their own use. Daniel H. Pink claims, “The cumulative force of all the individual tags can produce a bottom-up, self organized system for classifying mountains of digital material.” In an interesting twist, several institutions that are part of the Art Museum Community Cataloging Project, including the San Francisco Museum of Modern Art and the Guggenheim, are taking a folksonomic approach to their online collections by allowing patrons to supplement the annotations done by curators, making them more accessible and useful to people.

The effort of Nicholas Negroponte, chairman of the MIT’s Media Lab, to raise the funds to have a group of his colleagues design a no-frills, durable, and cheap computer for the children of the world is a terrific one. Having laptops equipped with a hand crank, in absence of electricity, and using wireless peer-to-peer connections to create a local network will make it easier to access the Internet from economically challenged areas of the world, notwithstanding the difficulties this presents. The detractors of Negroponte’s effort claim that children in Africa, for instance, will not benefit from having access to the libraries of the world if they don’t understand foreign languages; that children with little exposure to modern civilization will suddenly have access to pornography and commercialism; and that wealthy donors should concentrate on malaria eradication before giving an e-mail address to every child. Negroponte, as Jeffrey Sachs, Bono, Kofi Annan, and many others, know that education along with connectivity, are key to bring the next generation out of the poverty cycle to which they have been condemned by foreign powers interested in the resources of their countries, and by every corrupt local regime that has worked along the lines of those powers. The $100 laptop, accompanied by a sound and humane program to use them will bring enormous benefits.

A. O. Scott’s review of the documentary as a genre that supplies satisfaction not from Hollywood formulas but from the real world, reminded me of Bob Stein’s quest for thrills beyond technologically enhanced reality. A factor of the postmodern condition is the unprecedented immediate accessibility to the application of scientific knowledge that technology brings, accessibility that has permeated our relationships with and towards everything. Knowledge has acquired an unsettling superficiality because it has become an economic product. Technology is used and abused, forced upon the consumer in all sorts of ways and Hollywood’s productions are the obvious example. 2005 was the year of the documentary, and I suspect this has to do with a yearning for the human, for the real, for the immediate, for the unmediated. A. O. Scott eloquently traces that line when he praises Luc Jacquet’s “March of the Penguins” as the documentary that hits it all; epic journey, humor, tenderness and suspense, as well as “an occasion for culture-war skirmishing. In short it provided everything you’d want from a night at the movies, without stars or special effects. It’s almost too good to be true.” With that I greet 2006.