I had an exchange about my previous post with an RDF expert who explained to me that API’s are not like RDF and it would be incorrect to try to equate them. She’s right – API’s do not replace the need for RDF, nor do they replicate the functionality of RDF. API’s do provide access to data, but that data can be in many forms, including XML bound RDF. This is one of the pleasures and priviledges of writing on this blog: the audience contributes at a very high level of discourse, and is endowed with extremely deep knowledge about the topics under discussion.

I want to reiterate my point with a new inflection. By suggesting that API’s were an alternative to RDF, I was trying to get at a point that had more to do with adoption than functionality. I admit, I did not make the point well. So let me make a second attempt: API’s are about data access, and that, currently (and from my anecdotal experience) is where the value proposition lies for the new breed of web services. You have your data in someone’s database. That data is accessible to developers to manipulate and represent back to you in new, innovative, and useful ways. Most of the attention in the webdev community is turning towards the development of new interfaces—not towards the development of new tools to manage and enrich the data (again, anecdotal evidence only). Yes, there are people still interested in semantic data; we are indebted to them for continuing to improve the way our systems interact at a data level. But the focus of development has shifted to the interface. API’s make the gathering of data as simple as setting parameters, leaving only the work of designing the front-end experience.

Another note on RDF from my exchange: it was pointed out that practitioners of RDF prefer not to read it in XML, but instead use Notation 3 (N3), which is undeniably easier to read than XML. I don’t know enough about N3 to make a proper example, but I think you can get the idea if you look at the examples here and here.

Category Archives: RDF

RDF = bigger piles

Last week at a meeting of all the Mellon funded projects I heard a lot of discussion about RDF as a key technology for interoperability. RDF (Resource Description Framework) is a data model for machine readable metadata and a necessary, but not sufficient requirement for the semantic web. On top of this data model you need applications that can read RDF. On top of the applications you need the ability to understand the meaning in the RDF structured data. This is the really hard part: matching the meaning of two pieces of data from two different contexts still requires human judgement. There are people working on the complex algorithmic gymnastics to make this easier, but so far, it’s still in the realm of the experimental.

So why pursue RDF? The goal is to make human knowledge, implicit and explicit, machine readable. Not only machine readable, but automatically shareable and reusable by applications that understand RDF. Researchers pursuing the semantic web hope that by precipitating an integrated and interoperable data environment, application developers will be able to innovate in their business logic and provide better services across a range of data sets.

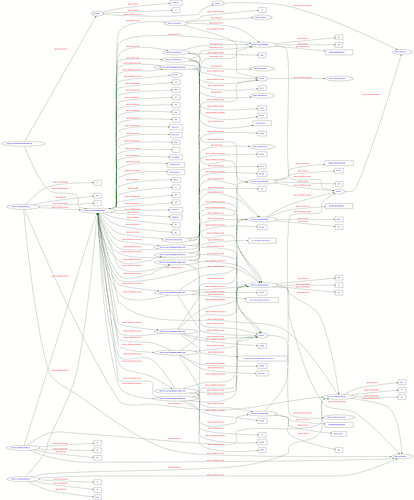

Why is this so hard? Well, partly because the world is so complex, and although RDF is theoretically able to model an entire world’s worth of data relationships, doing it seamlessly is just plain hard. You can spend time developing a RDF representation of all the data in your world, then someone else will come along with their own world, with their own set of data relationships. Being naturally friendly, you take in their data and realize that they have a completely different view of the category “Author,” “Creator,” “Keywords,” etc. Now you have a big, beautiful dataset, with a thousand similar, but not equivalent pieces. The hard part—determining relationships between the data.

We immediately considered how RDF and Sophie would work. RDF importing/exporting in Sophie could provide value by preparing Sophie for integration with other RDF capable applications. But, as always, the real work is figuring out what it is that people could do with this data. Helping users derive meaning from a dataset begs the question: what kind of meaning are we trying to help them discover? A universe of linguistic analysis? Literary theory? Historical accuracy? I think a dataset that enabled all of these would be 90% metadata, and 10% data. This raises another huge issue: entering semantic metadata requires skill and time, and is therefore relatively rare.

In the end, RDF creates bigger, better piles of data—intact with provenance and other unique characteristics derived from the originating context. This metadata is important information that we’d rather hold on to than irrevocably discard, but it leaves us stuck with a labyrinth of data, until we create the tools to guide us out. RDF is ten years old, yet it hasn’t achieved the acceptance of other solutions, like XML Schemas or DTD’s. They have succeeded because they solve limited problems in restricted ways and require relatively simple effort to implement. RDF’s promise is that it will solve much larger problems with solutions that have more richness and complexity; but ultimately the act of determining meaning or negotiating interoperability between two systems is still a human function. The undeniable fact of it remains— it’s easy to put everyone’s data into RDF, but that just leaves the hard part for last.