« December 2005 | Main | March 2006 »

January 10, 2006

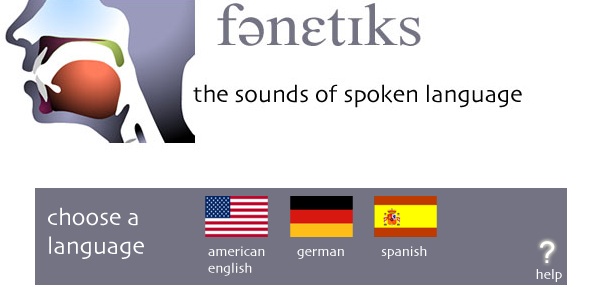

Learning Language Pronunciation

The University of Iowa hosts a great example of using digital technology to improve teaching language pronunciation. The Phonetics Flash Animation Project demonstrates how to pronounce sounds with: flash animations of anatomic diagrams, video clips of people making a particular sound, audio files of words with those sounds, as well as traditional written descriptions.

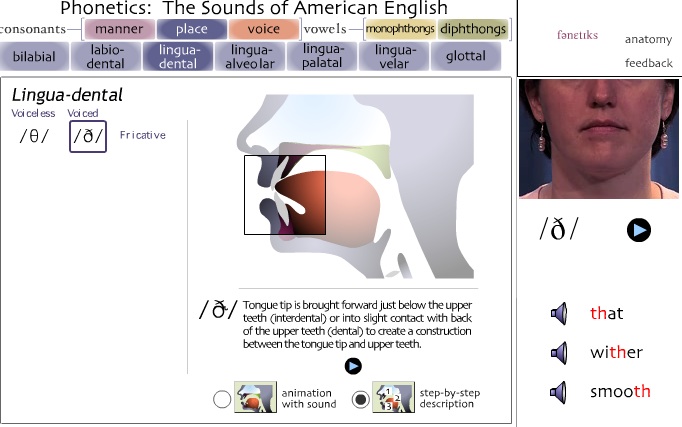

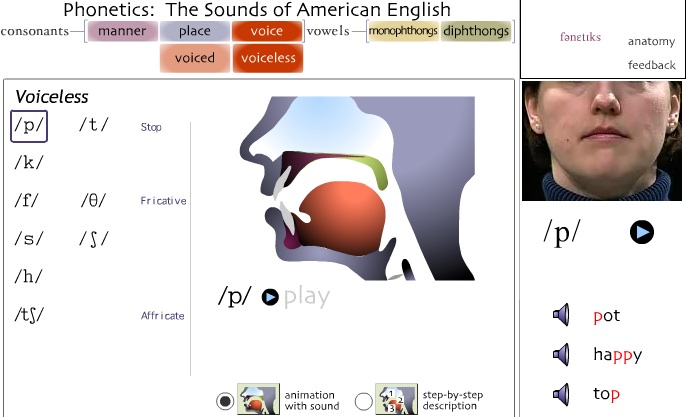

The clear visual and interface design requires little written directions and documention. A key to the success of the project relies in the fact that it provides students with information in many different ways. There are step-by-step directions on what part of your body are used. Animated drawings of a person's profile show the sound being produced. Where as, the video clips show a real person (shown in the front view) making the sound. Finally by providing three examples of the sound in a word, the student receives an opportunity to contextualize its use in an actual word. Another appreciated feature is that the project includes sounds used in German and Spanish that are not used in English, for example the rolling Rs found in Spanish.

Phonetics was a collaborative effort with the Department of Spanish and Portuguese, Speech and Pathology and Audiology, and Academic Technologies. Clearly, the end results show expert knowledge from all three participants. As well, a clean interface provides an enjoyable user experience which comes from many wise and simple design decisions. For example, using video versus animation for specific purposes. The front-on shot videos are more clear than would have been with animation, and the animations reveal a view that obviously would be impossible to show otherwise.

The site offers a drastically improved way of teaching how to produce sounds over traditional paper-based texts. This example hints that educators are only beginning to explore the vast possibilities of digital media in the classroom. It is difficult to imagine how any language class would not benefit from access to this kind of material for that particular language of study.

Posted by ray cha at 4:29 PM

January 6, 2006

Narrative Structure and Medium: "The Red Planet" and the Future of the Educational DVD-ROM

As we've noted before in Next/Text, a key question in considering the future of the digital textbook is whether the text should be delivered to the user via the web or on a disc. In the 1990s -- when Voyage pioneered the development of the educational CD-ROM -- the choice was clear: accessing video and audio was far too difficult to make developing web content worthwhile.

Since the demise of Voyager, however, the production of disc-based educational material has gradually decreased, and it has become less clear that ROM discs are the medium of choice for delivering "thick" multimedia content. As some of the examples we've written about (especially the WebCT film course and the MIT Biology class) demonstrate, streaming video can be easily integrated into online instructional material: there's still much room for improvement, but it's clear that online video and audio delivery will only improve over time.

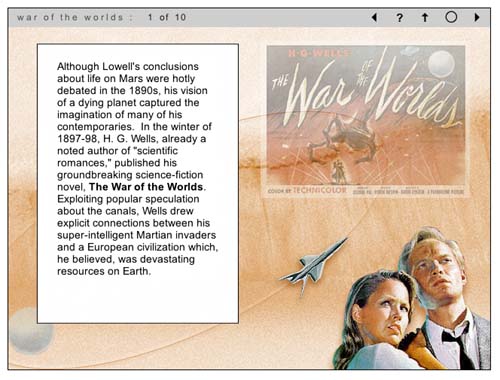

So what is the future of the disc-based educational text? The question seems especially relevant now that the recent industry debate over the formats of next-generation DVDs has provoked more than a few technology analysts to wonder aloud whether discs themselves are a doomed delivery medium. I'm not completely convinced by these arguments, as I feel that, as far as educational media are concerned, there may still be good reasons to put things on disc. In what follows, I'll be weighing the pros and cons of scholarly work on DVD-ROM through a discussion of The Red Planet, a DVD-ROM project published in 2001 by the University of Pennsylvania Press.

The Red Planet is the first publication in Penn's Mariner 10 Series, a publishing venture that represents the first attempt by a university press to publish scholarly work on DVD-ROM. The titles chosen for this Penn series are interdisciplinary studies that blend what C.P. Snow might call the "two cultures" of science and the humanities; each also includes a wide range of textual, visual and audio resources. Thus far, in addition to The Red Planet, Penn has published a volume on humanistic approaches to medicine; a physics text, "The Gravity Project," is forthcoming.

Interestingly, The Red Planet has its roots in an unsuccesful venture in online education: project co-author Robert Markely was first approached to do a series of video interviews to be embedded in a web course about science fiction. When that project fell through in 1997, Markley realized that such interviews would be an ideal first step towards creating a digital scholarly text that explored the roles Mars has played in 19th and 20th century astronomy, literature and speculative thought. Teaming up with multimedia designer/theorists Helen Burgess (also a co-author for Biofutures, a DVD-ROM-in-progress that I've previously discussed) Harrison Higgs and Michele Kendrick, Markley approached Penn with his idea for the project.

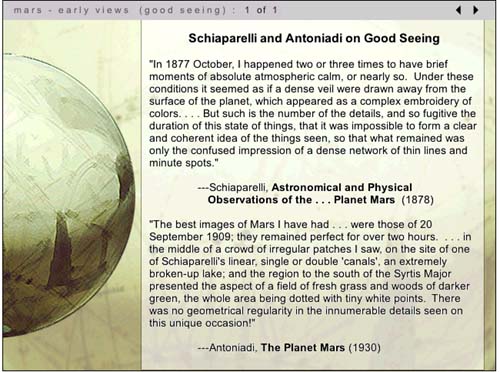

After getting the go-ahead from Penn -- and substantial funding from West Virgina University and Washington State University -- the group spent more than four years completing the project. Markley wrote a 200-page monograph on cultural and scientific approaches to Mars divided into nine chapters -- Early Views; The Canals of Mars; The War of the Worlds; Dying Planet; Red and Dead; Missions to Mars; Ancient Floods; Dreams of Terraforming, and Life On Mars. He and others in the group in the group then interviewed science fiction writers including Kim Stanley Robinson, cultural critics such as N. Katherine Hayles and a diverse group of major scientific figures including Richard Zare, Carol Stoker, Christopher McKay and Henry Giclas (the Giclas interview is pictured below). They also assembled hundreds of current and archival photos, quotations from scientific and literary texts, dozens of clips from science fiction films, and an impressive array of diagrams explaining key concepts in astronomy. And to complement the DVD-ROM project, they authored a website with educational resources, a timeline, and updatable discussions of debates about Mars exploration.

The success of The Red Planet stems from the fact that all of this multimedia does not serve simply to gloss on Markley's monograph: it changes Markley's own authoring process as well. As Markely points out in a chapter he wrote on the project for a book titled Eloquent Images, his main challenge was to create a work that was scholarly enough to be considered worthy by tenure committees, a requirement that has been a serious hurdle to the willingness of academics to invest time and effort in the creation of digital scholarly work. "The majority of commercial educational software treats content as a given," Markley wrote, "reified as information that has to be encoded within a programming language and designed in such a way as to enhance its usuability." To Markley, moving beyond this "usable information" paradigm, involved more than just foregrounding the text: it meant that the designers should make a significant contribution to the intellectual shape of the project that extended beyond usability issues and promoted them to the status of co-authors.

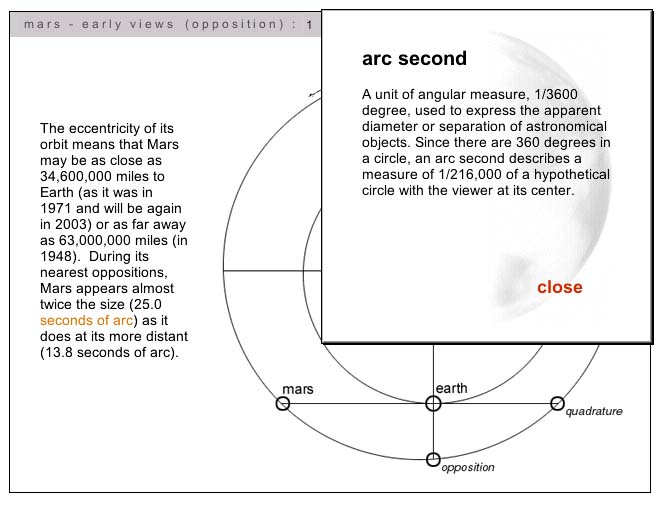

With this in mind, Markley and his co-authors worked collaboratively to develop a navigation structure for the project that reflected both scholarly and pedagogical concerns. The resulting interface eschews the hypertext structures of many web-based projects and relies instead on a chronological narrative structure that the authors felt best maintained the integrity of the scholarly text. In other words, hyperlinks don't move the user around inside the central text, but rather lead to film clips or selections of explanatory material (such as the diagram illustrating the term 'arc second,' pictured below).

The structure of The Red Planet thus resembles, in some ways, the structure of Columbia University's Gutenberg-e publications: if the reader chooses, they can read straight through the text and ignore the linked explanations and supporting material. For the most part, video interviews open on seperate pages and launch automatically (the screenshot above actually shows an exception to this), but the reader can always use the forward navigation key to advance the narrative.

The Red Planet demonstrates that this text-centric approach to digital scholarly work need not come at the expense of the quality of multimedia integrated into the project. From the beginning, Markley and his collaborators wanted The Red Planet to be a standout in terms of both the amount and the quality of supporting media. This, in turn, determined their choice of medium: in writing grant proposals to fund the development of the project, Markley stressed the importance of using DVD-ROM instead of CD-ROM to construct the volume. At the time The Red Planet was produced, the disc was able to hold about six times more data than a traditional CD-ROM; it was also able to read this data about seven times faster, meaning that the video segments played much more smoothly and were of far higher quality. As Markely wrote in his chapter for Eloquent Images, "video on CD-ROM is a pixilated, impressionistic oddity: on DVD-ROM, video begins to achieve something of the semiotic verisimilitude we associate with film."

The DVD-ROM format also allows The Red Planet'sdesigners to incorporate much longer video clips into their project: interviews are up to five minutes in length, and video clips are the maximum length allowable under fair use. In the case of the interviews, the longer interviews allow for detailed explorations of the question at hand: in the case of the film clips, the expansiveness of the selections gives the viewer a sense that they are experiencing a portion of the actual film rather than a snapshot.

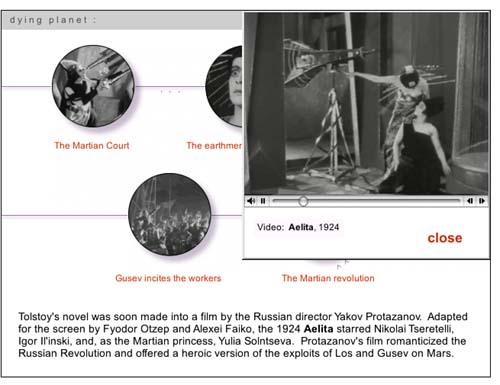

Still, though the videos are high quality, they are not full screen (as shown by the screenshot above, which shows a page that features a series of clips from the 1924 Russian film Aelita). The limited size of the clips is one of a series of tradeoffs Markley and his team found they had to make when confronted with their own limited budgets and realistic pricing expectations for their DVD-ROM. In his chapter for Eloquent Images, Markley writes:

In authoring The Red Planet, we were caught in an ongoing process of having to decide what we could afford in time, money and labor to live up to our grant application claims that DVD ROM could do what neither CD Rom nor the web could manage: integrate hours of high-quality video into a scholarly multimedia project. At the same time, we had to engineer downward the minimum requirements of RAM, operating systems, monitor resolutions, etc to avoid pricing our project out of the mainstream educational market.

Markley's point is an important one: DVD-ROMs have the potential to deliver amazing multimedia content, but that is simply the capacity of the medium. A successful DVD-ROM author not only has to find the time, money, and skill to produce the project; they also have to operate within the constraints of a market which is increasingly accustomed to getting educational content online for free. In the end, The Red Planet was probably more successful at the former than the latter: as a example of the genre, it is exceptional both in content and design, yet the disc has yet to find the wide audience of both scholars and Mars enthusiasts that its authors had hoped it would find.

Looking at The Red Planet and reading Markley's theorization of the work done by his team, I'm impressed by their very self-reflective effort to author a major project over several years in the midst of a rapidly shifting new media landscape. I'm convinced by Markley's argument claims in Eloquent Images that the DVD-ROM format allows multimedia authors to create projects that are more narrative-centered, and thus also, perhaps, more scholarly and more acceptable to the legitimating bodies of academia. At the same time, I'm unsure what will happen to the market for such work: either we will see a critical mass of DVD-ROMS emerge that will create a critical mass of reader/users, or the format will atrophy as the web becomes more and more the preferred method of distribution. Markley himself is aware of the uncertain future of projects such as his own: while making the case for The Red Planet as the best fit between form, content and medium, he also notes that the project is less "a model to be emulated" as much as it is "a historical document, a means to think through the scholarly and professional legitimation of video and visual information."

So should scholars persist in authoring in the medium? I think so, as long as they take a clear-eyed view of its advantages and pitfalls, and as long as they realize -- as Markley and his team did all along -- that while the time and effort spent on a DVD-ROM project is greater than the time spent on a traditional academic monograph, the audience might ultimately be more limited. Not always: a project such as the Biofutures DVD (discussed in an earlier post), which has been developed with a very specific pedagogical aim, might find a more extensive academic audience even if it doesn't find a non-academic one. The same might be said for Medicine and Humanistic Understanding, the newest title in the Mariner 10 series. The key is finding a way to create a new media object that is stable enough to allow users to take advantages of its merits both now and in the foreseeable future.

Posted by lisa lynch at 2:37 PM

January 3, 2006

Reading the "Augmented" Digital Text: AR Volcano

When we talk about "digital textbooks" here at Next/Text, we're usually referring to textbooks that have moved from the printed page to the computer screen. But there are other types of digital texts -- and digital learning environments -- that move beyond the boundaries of the screen to rethink our assumptions of what books might be. One example is the use of augmented reality to create texts which resemble books in their basic physical form, but have features that dramatically alter the experience of reading and learning.

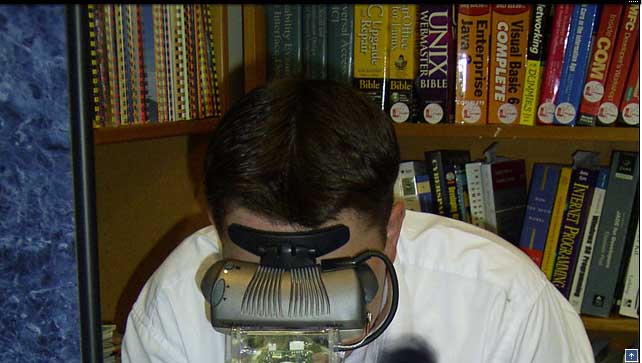

Over the past five years, HCI engineer Mark Billinghurst (formerly based at the University of Washington and now director of the Human Interface Technology Lab at the University of New Zealand) has been working on a series of "magic books" which use AR technology to project three-dimensional animations off of each page, allowing the user to learn about a topic by watching a series of scenarios and demonstrations at their own pace. AR Volcano, created with the assistance of Eric Woods, Graham Aldridge, uses AR to teach students about the science of volcanoes, including details on tectonic plates, subduction, rifts, 'the ring of fire,' volcano formation, and eruptions. I'm going to discuss this project here, with the caveat that I haven't used it: I've only read through articles and documentation and watched a few demonstration videos.

What is augmented reality? While most digitally literate folks might be familiar with virtual reality, augmented reality has generally gotten less attention. An article from from the April 2002 issue of Scientific American neatly outlines the differences between the two media:

Augmented reality (AR) refers to computer displays that add virtual information to a user's sensory perceptions. Much AR research focuses on "see-through" devices, usually worn on the head, that overlay graphics and text on the user's view of his or her surroundings. (Virtual information can also be in other sensory forms, such as sound or touch, but this article will concentrate on visual enhancements.) AR systems track the position and orientation of the user's head so that the overlaid material can be aligned with the user's view of the world. Through this process, known as registration, graphics software can place a three-dimensional image of a teacup, for example, on top of a real saucer and keep the virtual cup fixed in that position as the user moves about the room. AR systems employ some of the same hardware technologies used in virtual-reality research, but there's a crucial difference: whereas virtual reality brashly aims to replace the real world, augmented reality respectfully supplements it.

The image above illustrates how Billinghurst's AR Volcano supplements the reality of the "book" that the user encounters. Without goggles, someone approaching AR Volcano would only see what looked like a conventional paper book propped (albeit one which is only six pages long) on a podium. With the goggle, the computer software recognizes special patterns embedded in the book and replaces them with photo-realistic 3D objects. In this photo, a volcanic eruption takes place over the course of several minutes. The image will appear no matter where the user positions themselves around the book, and a new image can be seen by simply turning the page.

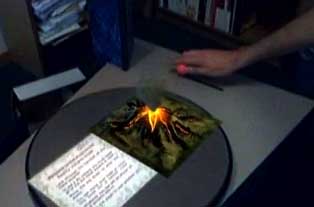

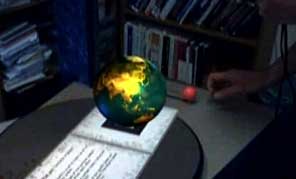

AR Volcano has a significant feature that sets it apart from Billinghurst's previous magic books and makes the technology much more suitable for creating learning environments. In earlier books -- such as the Black Magic Book, which tells the story of the America's Cup Race -- the user was positioned as a passive spectator in an enhanced "reading" environment. AR Volcano, however, provides an interactive slider that allows the user to control volcano formation and eruption as well as the movement of tectonic plates. In the image above, the slider (a physical slider attached to the book podium), is adjusted so that the volcano is erupting at a rapid pace; in the image below, the slider is adjusted downwards so that the movement of tectonic plates on the earth's surface occurs more gradually. The audio narrative also adjusts, so that it keeps pace with the user's movement through the pages of the book.

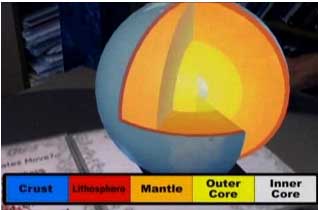

Another useful feature, common to all "magic books," is a zoom function connected to the user's experience of physical space -- simply put, as a user moves physically closer to the book the image grows larger, mirroring the experience of approaching an object in the real world. The photo below is taken from the vantage point of someone who has moved closer to the 3-D visualization of the earth's various layers in order to examine it more closely. Notice that the three-dimensional globe is overlaid with a two-dimensional text label which color-keys the layers indicated by the cross-section.

Since it reflects a user's bodily experience, the physical zoom enhances the reality effect of AR books; ideally, the technology will evolve to the point where the augmentations will become less cartoonish and more natural, even "transparent" to the user. The improvement of what AR designers call the "immersion effect" is the focus of another project at Billinghurst's HIT lab, AR Relight, which attempts to improve shadow casting in 3D models.

Making AR more believable is only one of the challenges faced by researchers like Billinghurst; another is integrating interactive functions into a text in a manner that would make it more portable and booklike than AR Volcano; while the Black Magic Book resembles a conventional spiral bound volume, AR Volcano depends on its computerized podium. Since portability is one of the hallmark features of textbooks -- including digital ones, if one has a laptop -- the technology has a ways to go before it can emulate the combination of interactivity and portability of the Young Ladies' Illustrated Primer imagined by Neal Stephenson in his novel The Diamond Age, a book which uses the wonders of nanotechnology to generate three dimensional, interactively narrated animations to guide the protagonist through the challenges of her girlhood.

AR researchers probably won't need to wait for functional nanotechnology to make viable AR books that could be used in teaching contexts; medical books, for example, in which 3D visualizations emerge from the pages. Alternately, educational uses of AR might move away the book metaphor as a means of navigation: for example, a project called MARIE at the University of Sussex uses AR technology to allow students taking online courses to view and interact with three-dimensional objects that the instructor is describing. But AR has made its impact as a way of imagining a form of digital textuality that is not physically wedded a computer screen -- or, for that matter, to immersion in an entirely simulated world.

For those who are interested in experimenting with the technology themselves, Billinghurst and his group have released an AR toolkit, which they describe as "a collection of libraries, utilities applications, and documentation and sample code aimed at creators of augmented reality applications." The libraries allow users to capture images from video sources, process those images to optically track markers in the images, composite computer-generated content with the real-world images, and finally display the result using the graphics language OpenGL.

Posted by lisa lynch at 2:35 PM